Creators: Maryia Markhvida, Dror Margalit, Peter Zhang

Voice: Zeynep Elif Ergin

If we are lucky, we are born into the loving arms of our parents and for many years they guide us through life and help us understand the events around us. But at some point, sooner or later, life takes a turn and we are all eventually thrown into the chaos of this world. In these moments things often stop making sense and we have a hard time navigating the day-to-day. Eventually though, most of us adapt and figure out some way to go on.

Sound, in a way, is a chaotic disturbance of the air, but somehow we learn how to make sense of it and even manipulate our voice and things around us to reproduce the strange frequencies. We start recognizing the patterns in the randomness and eventually derive deep meaning from it.

This work is an interactive sound journey that uses evolution of randomly generated frequencies to reflect the human experience with uncertainty and chaos. It tells Zeynep Elif Ergin’s story through a composition of computer-generated sounds, her voice, and interactive visuals :

Inspiration and Process

After our first Hypercinema lecture, I got very intrigued by the composition of sound in terms of signal and superposition of sine waves. This is something I vaguely knew about from my engineering background (I worked a lot with earthquake wave signal in my PhD) but never got to actually play with, let alone create. I was also curious to hear what different sounds could be produced if I used different probability distributions (uniform, normal, lognormal) to generate the number of waves, the frequencies and the amplitudes.

Once we formed a group with Dror and Pete, we started talking about what uncertainty and randomness meant to each one of us. We discussed that when people face moments of high uncertainty they are first thrown into absolute chaos and then slowly they tend to embrace the uncertainty and adapt the chaos into something that feels more familiar. We eventually arrived at a question: What would ones journey through uncertain times sound like using randomly generated sounds?

All of us wanted this piece of work to be grounded in and driven by real human experience. In the words of Haley Shaw on creating soundscapes:

‘…even when going for goosebumps, the intended feeling should emerge from the story, not the design’

The final piece is presented in an interactive web interface ,which allows one to listen to Zeynep Elif Ergin’s story through a progression of randomly generated noises. The listener has the option of experiencing the story without the main subject (inspired by the removal of the main character in Janet Cardiff’s work) or overlaying her voice over the computer-generated soundscape. There is also a gradual evolution of the visuals.

The first question was how can one randomly generate sound starting from scratch, i.e. a blank Python Jupyter Notebook. After a quick conversation with my father about the physics of sound waves and super-positioning, I had an idea of what to do.

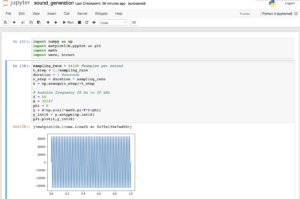

I started with generating a simple sine wave (formula below) and converting is into a .wav file.

$$ y = A sin(2\pi f t +\phi) $$

This is the equation of a sine wave with phase angle ($\phi$), frequency ($f$) and amplitude ($A$), all of which were eventually randomized according to different probability distributions. Below is a sample of the first python code and first sounds I generated with only one frequency:

- Frequency 100 Hz:

- Frequency 10 KHz (WARNING: NOT PLEASANT, play at your own risk):

Then I played around and generated many different sounds. Here are some things I tired:

-

- Broken down the duration into many phases of varying speed, where each phase had a different sound;

- Created “heart beats” with low frequency;

- Super-positioned a range of 2-100 waves of varying frequency, amplitude, and phase angle to create multi-dimensional sound;

- Tried a uniform and normal distributions to randomly generate frequencies and sounds;

- Generated random sounds out of music note frequencies using 5 octaves;

- Generated random arpeggios;

- Limited notes to C major to produce random arpeggios (creates a happier tone).

Here is an example of one of these generated sounds:

In the meantime, Dror and Peter recorded the interview with Zeynep Elif Ergin as well as additional ambient sounds around NYC, and worked in Adobe Audition to compose the final piece using randomly generated sounds .

The last step was to create the interactive interface with p5.js [link to code] , which gives the listener the option of playing only the ‘chaos track’ or overlaying the voice when the mouse inside of the center square. As the track is played, the uncertainty and chaos slowly resolve, both sonically and visually… but they are never quite gone.