Role:

Since I was most experienced with Unity, I took responsibility for writing the scripts and other technical stuff in Unity.

Technical Description & Issues:

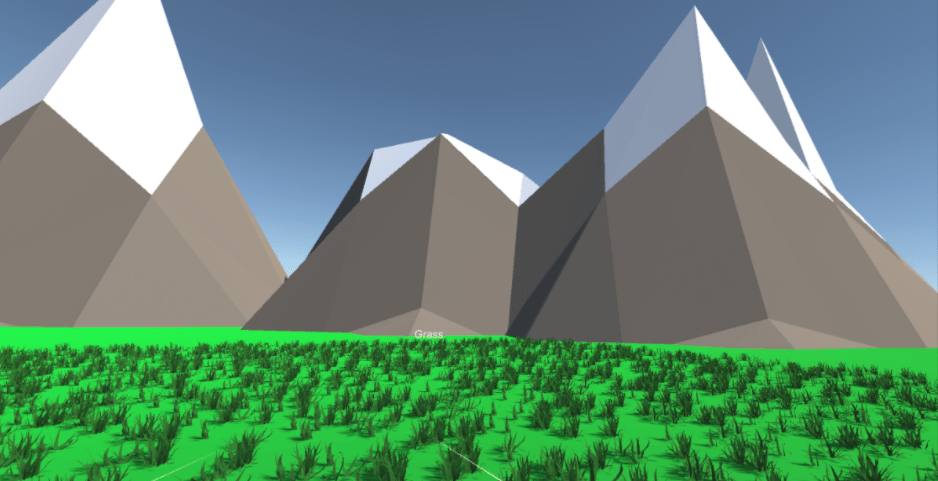

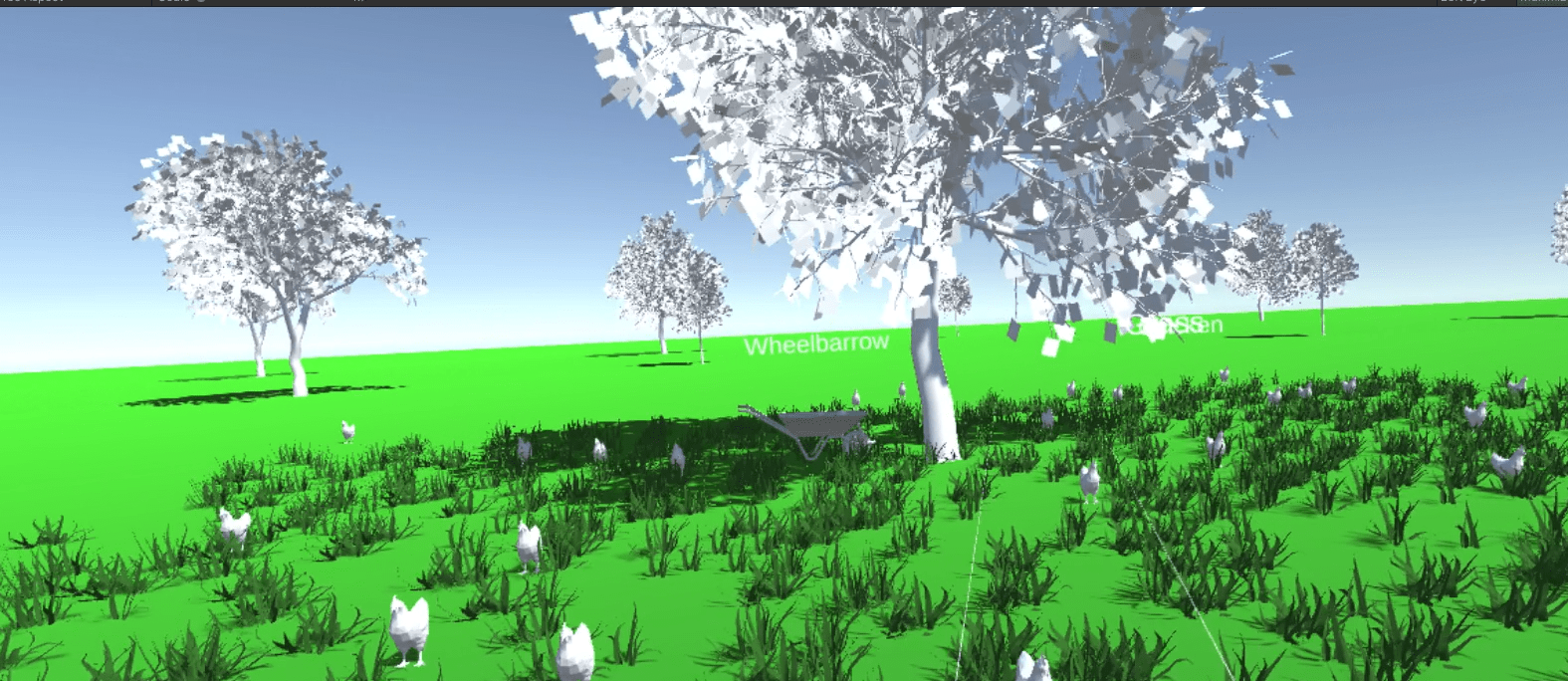

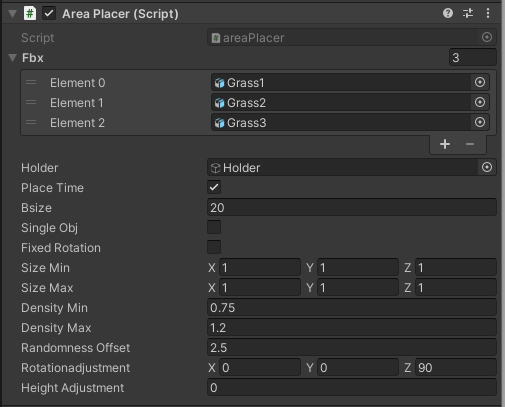

Going in, I knew that developing the project should follow the law of object orient programming in general- first with a classification then minor modifiers to change factors for each object.

The script above is designed to be able to handle every single type of object we could want to put in. The list fbx would contain all variations of a model- in this case 3 different models of grass could spawn when issued the command “create grass.” The holder was meant for organization, in which every instantiated object would become a child of an empty object in the prefab named as such- this way when I had to update rotation, size, placement, etc., all I had to do was clear all the children in the object “holder.”

The other options are fairly straightforward, and something I realized with how some of the models interacted with Unity was that they would for whatever reason decide to spawn in rotated despite the axis technically being correct for the models outside of Unity. For this I added the extra parameters rotation adjustment (which would also randomly rotate objects on the y-axis to make it seem more natural), and height adjustment, in case any objects would come in floating or too deep underground depending on it’s pivot.

When converting to XR interaction toolkit, it took me several days to really figure out the new input system. Most online tutorials explicitly say to use action-based rigs, but for me this seemed very unclear, and given that I knew I was working with an Oculus Quest 2, I figured I could go with device-based instead. This meant, however, that I needed to declare certain aspects within each script.

private InputDevice headset;

private InputDevice lContr;

private InputDevice rContr;

private bool devFound;

private CharacterController gc;

private Vector3 cEA = new Vector3(0,0,0);

void Start()

{

gc = gameObject.GetComponent<CharacterController>();

List<InputDevice> hds = new List<InputDevice>();

List<InputDevice> lcs = new List<InputDevice>();

List<InputDevice> rcs = new List<InputDevice>();

InputDeviceCharacteristics hsc = InputDeviceCharacteristics.HeadMounted;

InputDeviceCharacteristics lcc = InputDeviceCharacteristics.Left;

InputDeviceCharacteristics rcc = InputDeviceCharacteristics.Right;

InputDevices.GetDevicesWithCharacteristics(hsc, hds);

InputDevices.GetDevicesWithCharacteristics(lcc, lcs);

InputDevices.GetDevicesWithCharacteristics(rcc, rcs);

if (hds.Count > 0 && lcs.Count > 0 && rcs.Count > 0)

{

headset = hds[0];

lContr = lcs[0];

rContr = rcs[0];

devFound = true;

}

else

{

Debug.LogError("No Headset or Controller(s) Detected");

}

}

void Update()

{

if (devFound == false)

{

List<InputDevice> hds = new List<InputDevice>();

List<InputDevice> lcs = new List<InputDevice>();

List<InputDevice> rcs = new List<InputDevice>();

InputDeviceCharacteristics hsc = InputDeviceCharacteristics.HeadMounted;

InputDeviceCharacteristics lcc = InputDeviceCharacteristics.Left;

InputDeviceCharacteristics rcc = InputDeviceCharacteristics.Right;

InputDevices.GetDevicesWithCharacteristics(hsc, hds);

InputDevices.GetDevicesWithCharacteristics(lcc, lcs);

InputDevices.GetDevicesWithCharacteristics(rcc, rcs);

if (hds.Count > 0 && lcs.Count > 0 && rcs.Count > 0)

{

headset = hds[0];

lContr = lcs[0];

rContr = rcs[0];

devFound = true;

}

else

{

Debug.LogError("No Headset or Controller(s) Detected");

}

}

else

{

lContr.TryGetFeatureValue(CommonUsages.primary2DAxis, out Vector2 ljs);

rContr.TryGetFeatureValue(CommonUsages.primary2DAxis, out Vector2 rjs);

At first, the if statement was hds.Count > 0 || lcs.Count > 0 || rcs.count > 0 only in the Start() function. I fairly quickly realized that the problem with this was that if the headset wasn’t on before starting the program, then it would never register the headset until after you restarted up the program. The simplest fix was to just also run it in update until the bool “devfound” was confirmed, after making sure not only one but all 3 (headset, right controller, left controller) were all detected. This method would then use var.TryGetFeature() in Update() in order to actually read all the input of the buttons.

Since I was still more used to traditional input methods, I didn’t know how I would be able to use custom Raycasts for the controllers, since it seemed to me the interaction raycaster scripts default to the package were only good for picking stuff up and the like. I came to a rather cheeky workaround for this, as I remembered that we could use prefabs as display models for the hands. I could attach a script to each of the prefabs and they would technically follow the controllers as intended, while I could work in a territory more familiar inside the script. There were therefore 2 raycasts coming from the hands, 1 to detect the “control nodes” around each object and one that would ignore only control nodes. This came after I realized that only using one raycast would mean that any object you tried to grab would always gradually come towards the user since the raycast kept detecting the top of the “control node” as the new position to place the object instead of an absolute position on the map instead.

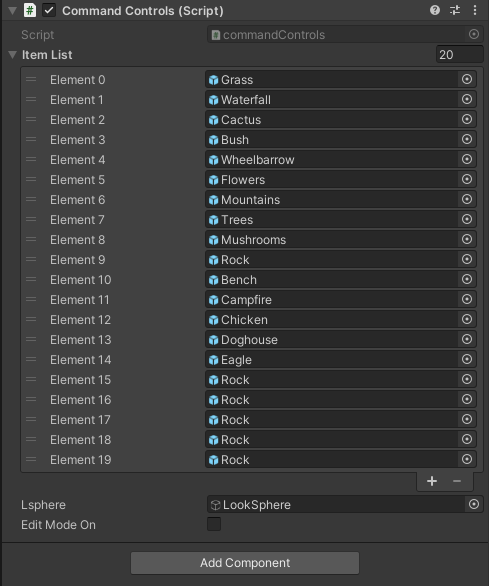

The script for voice commands and editing could not follow the idea of “flexibility” like areaPlacer.cs, which controlled each individual object’s parameters because each word needed to be able to do it’s own separate function.

When I started to work on the “edit mode” I also realized another flaw in the plan which was control scheme. Because of the limited amount of buttons on the controllers I wasn’t sure how to move forward with resizing and whatnot, and voice commands were not viable since it doesn’t have a universal number catcher- in other words I’d have to limit it to specific numbers such as 1-10 otherwise I’d be copy pasting numbers and commands infinitely to accommodate float values. In the end I discovered that the controllers do track velocity as well as position, ranging -1 to 1, so I set a deadzone of 0.1 and then fowards/backwards/left/right/up/down could be used to control these parameters.

Improvements:

The number one thing that requires fixing is the edit mode, since it was much more rushed in comparison to the ability to create objects. I realized that there was a bit of a problem with overlapping objects, and whichever’s collider stuck out further would end up being the one edited, similar to the problem that was solved with dual raycasts on the controllers. This could be rectified by instead of directly editing what the raycast hits, it instead marks down the object that is first hit when the triggers are pulled, ensuring that until the triggers are released only the initial object is being controlled. Otherwise, adding more models would’ve been nice, but it ended up being more time consuming than expected, especially since they were all taken from online, with different file formats and different orientations. Some also had extra parts that didn’t make sense, such as background or additional environmental pieces that were unnecessary because that’s the point of the project in itself, so we had to use blender/maya to edit those parts out of the models. Colliders acting as the “edit nodes” were also somewhat flaws, since I used bounds to simply create a collider around the entirety of a prefab, even if it spawned multiple objects such as grass in a field. This is a problem due to how unity registers hits, so if you are inside of the collider you can no longer interact with the object. The way I originally designed it was with a small ball in the center, but for larger objects vision of it would become obscured. The easiest fix would simply be to switch the project to URP, where we could utilize camera stacking to make sure that the nodes are always rendered above everything else. I’d also be interested in being able to create seasonal effects, such as snowfall, rain, and so on. I assume I could make it follow similar logic to the object prefab script, wherein there is a matrix grid of raycasts so that each individual object can adjust its height independently but with a particle system instead.