Pixels-Reality

Abstract

We are living in an era that the digital world(the virtual world) intersects with the real world. AR/VR technologies and Smartphones allow us to create and interact with virtual stuff based on our real world. For example, we can see virtual Pokemon in Pokemon Go game, we can use Microsoft Hololense to interact with a virtual object in the real world, VR even brings us to an entirely new world which is completely different from where we really are. This evokes me to have critical thinking on the virtual creature and the real world: how about virtualize people in the real world? We are virtual creatures like Pokemon in Pokemon Go?

Concept

Everything on a screen is shown as a collection of pixels set. So the idea of my project is really simple: pixelate human in video/image. We are also a set of pixels in video/image. In order to show the pixel style, we need to manipulate the pixels that consist of our body, changing the size.

Development

Model

DenseDepth is a machine learning model that can detect the depth of an image/video. By using this, I can get a depth image that I can operate on. The image show depth by showing brightness.

As you can see, my head is a little bit darker than the surrounding. The RGB value of my body pixels is smaller than the background pixels. This mechanism allows me to pixelate my body and keep the initial background.

Combine with Processing

After getting the depth video from the DenseDepth model, I export the model result in Processing. I used the sample code provided by Processing libraries.

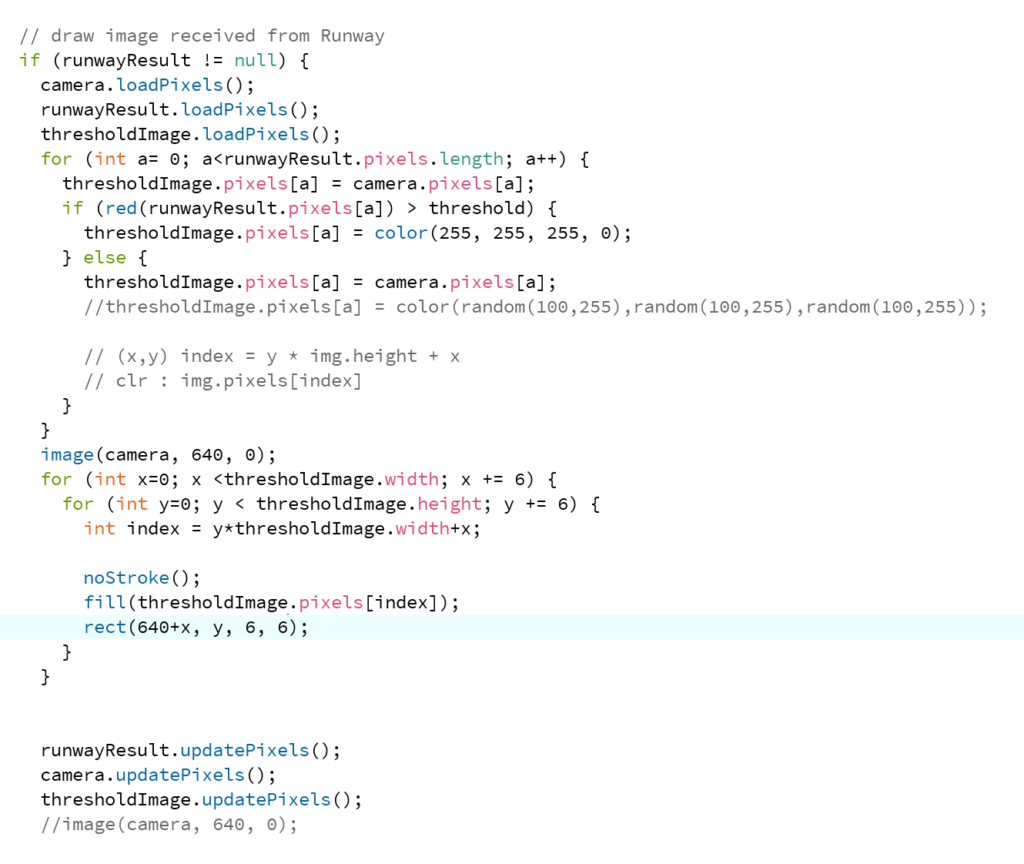

This is the key code block of my project. This is also my hand-written code. The basic idea of my project is: Keep the original background, only pixelate body. To achieve this, I separate my work into four steps:

- Copy background image from camera

- Create a new image, draw out the body contour and set the rest part of the image transparent so that we can see the background image I copy in the step1

- Pixelate body contour

- Draw background and pixelated image

Copy background image from camera

This is the easiest step for my project. image(camera,640,0) can simply achieve this.

Create a new image, draw out the body contour and set the rest part of the image transparent so that we can see the background image I copy in the step1

This is a little bit tricky. I utilize the depth image I got from the model in this step. Firstly, I need to create a new image, I called it a threshold image. Then by going through each pixel of the original image, I can set the threshold image’s pixel transparent if the brightness is larger than a threshold value(103 in my testing). This means that the pixel is the pixel that consists of the background. Else, I will draw the pixel out if the brightness is smaller than the threshold value.

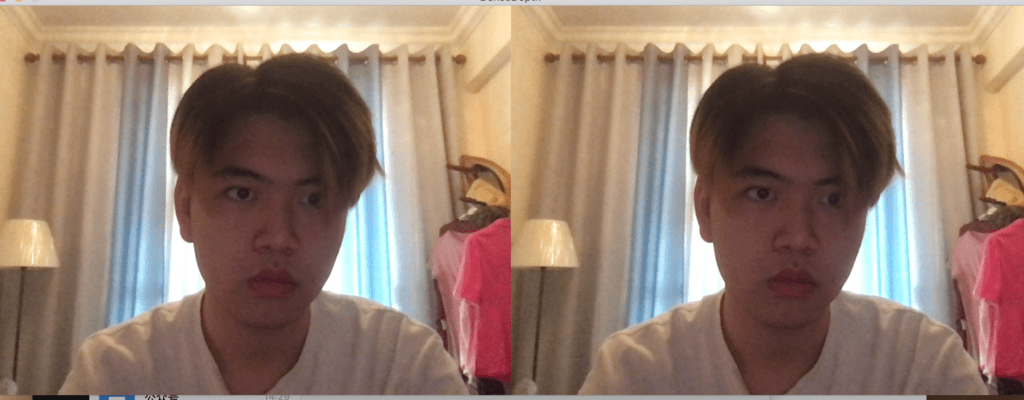

Here is what I got. For testing purposes, I set the body contour pixels’ RGB value to be random.

Pixelate body contour

To pixelate the body contour, I did a pixel manipulation:

for (int x=0; x <thresholdImage.width; x += 6) {

for (int y=0; y < thresholdImage.height; y += 6) {

int index = y*thresholdImage.width+x;

noStroke();

fill(thresholdImage.pixels[index]);

rect(640+x, y, 6, 6);

}

}

This code block indicates the computer to go through all the pixels of the threshold image, and when the computer passes by 6 pixels, it will draw a rectangle(size 6*6) and fill in according to the current RGB value. After the pixel manipulation, I got:

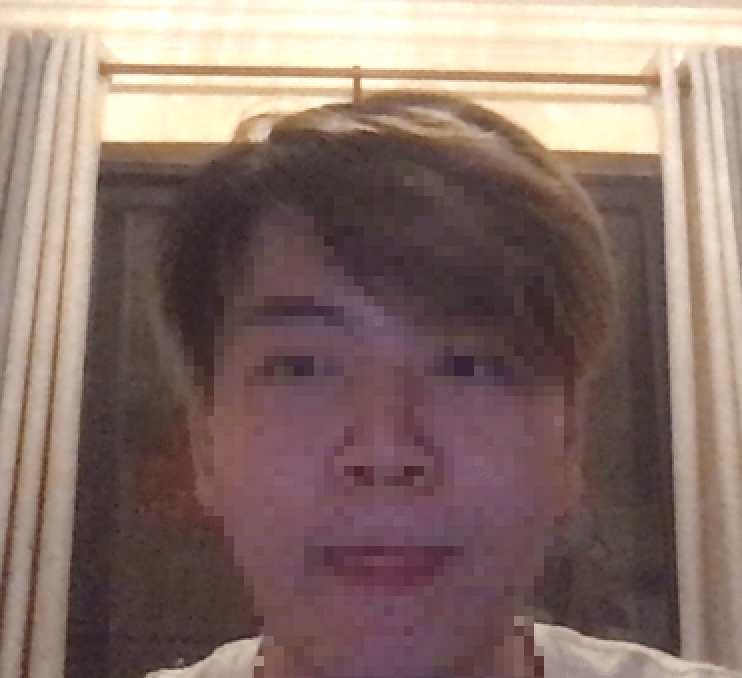

This is the final version of the threshold image. The body contour is pixelated, the background is transparent. So the final step is to draw the background image and the threshold image.

Draw background and pixelated image

This is the final step, and it is also quite easy. Firstly, I draw the background image first, meaning that the background image will at the bottom. Secondly, I draw the threshold image beyond the background image so that the threshold image won’t be covered. Since the threshold image is transparent in the part outside the body, we will see the background image I draw first. The final result is:

Even though it is a blur, we can tell that the head part is pixelated.

Conclusion&Future Development

This is a simple try with the DenseDepth model and pixel manipulation skill. The idea is simple but the process makes me struggling. I learned a lot from this try and thank Aven for his patient help! From the perspective of the quality of the project, there is one thing I think is worth refining: if the resolution size is too large, the people in front of the camera will look like a suspected criminal. It is funny but I am not supposed to achieve this. So in the future, it will be a good idea to add interaction. The audience of this project can control the size of the rectangle by themselves so that they can decide their own experience.

Leave a Reply