Computer Vision art project: Two-Stream Convolutional Networks for Dynamic Texture Synthesis

This week I cased study on “Two-Stream Convolutional Networks for Dynamic Texture Synthesis”

Link: https://ryersonvisionlab.github.io/two-stream-projpage/

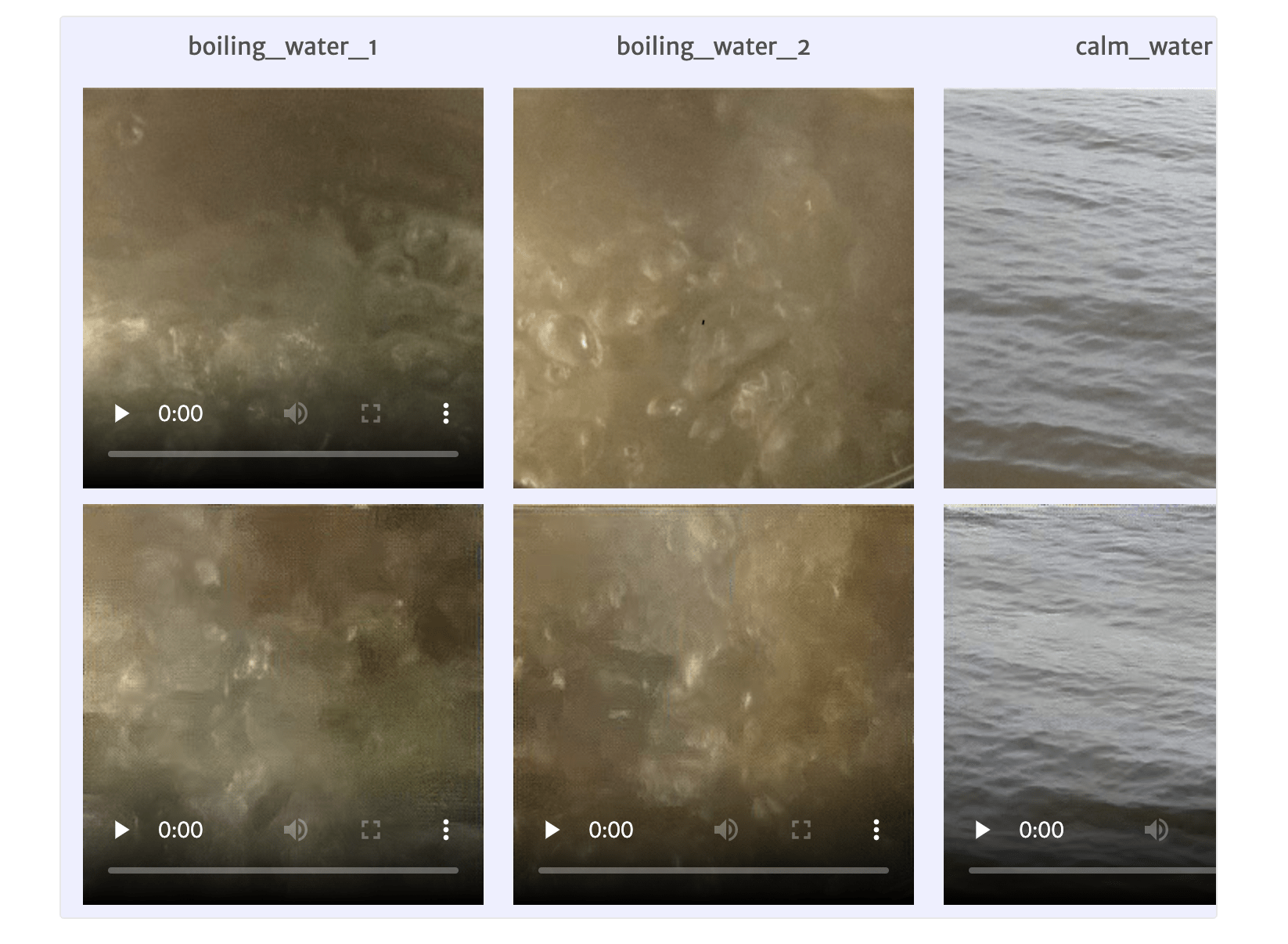

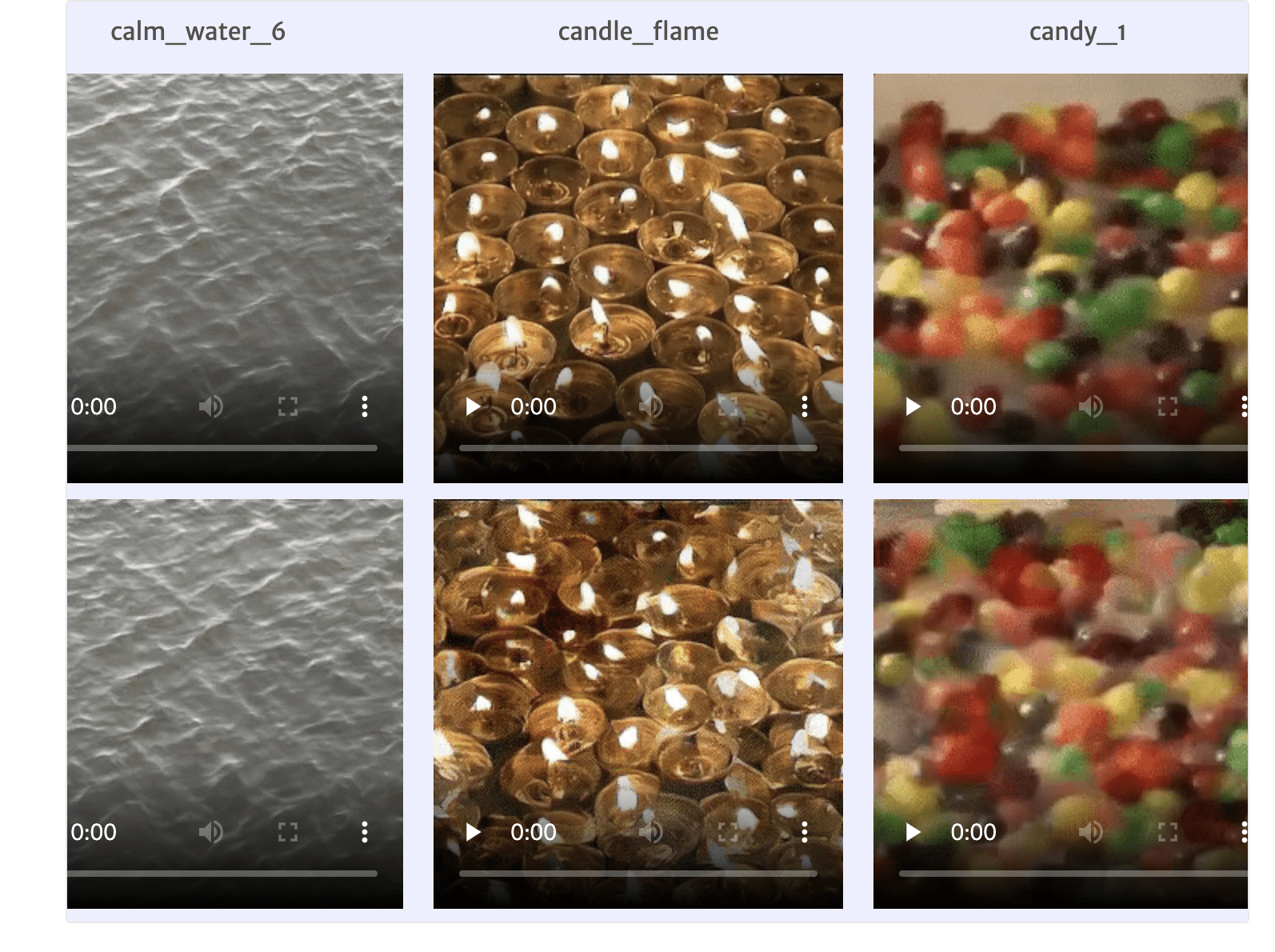

This project mainly utilizes pre-trained convolutional networks (ConvNets) to recognize dynamics texture and synthesize optical flow prediction. Through the recognition and analysis of dynamic texture, the dynamic texture of each frame of dynamic texture is encapsulated separately, and the model will initialize and optimize to form many different sub-sequences. Typically a sequence will have 12 frames. This is the basic process of generating a dynamics texture.

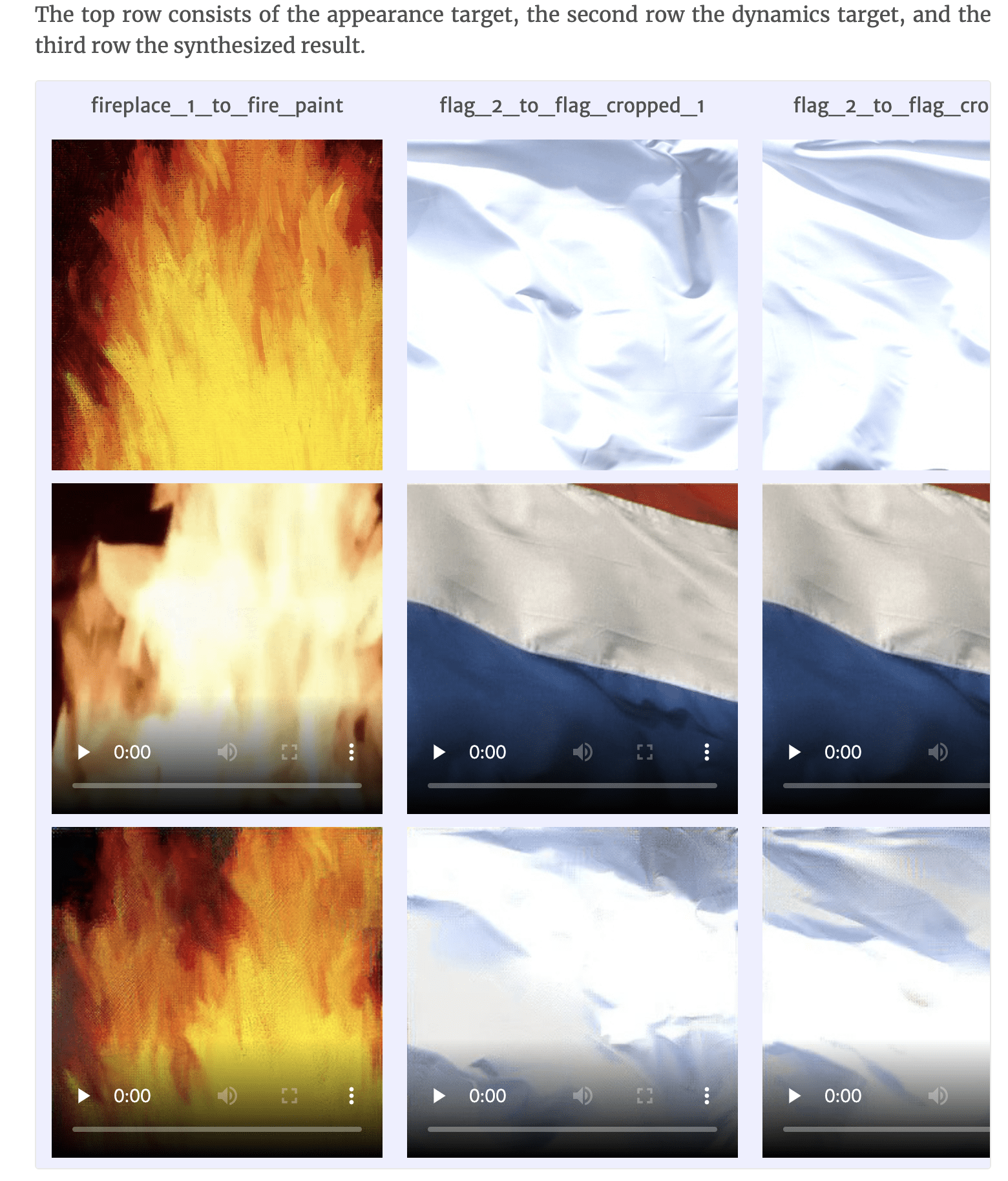

As we can see in this example, the water flow in painting style is the result of the recognition and synthesis of real water flow texture in the middle of the top.

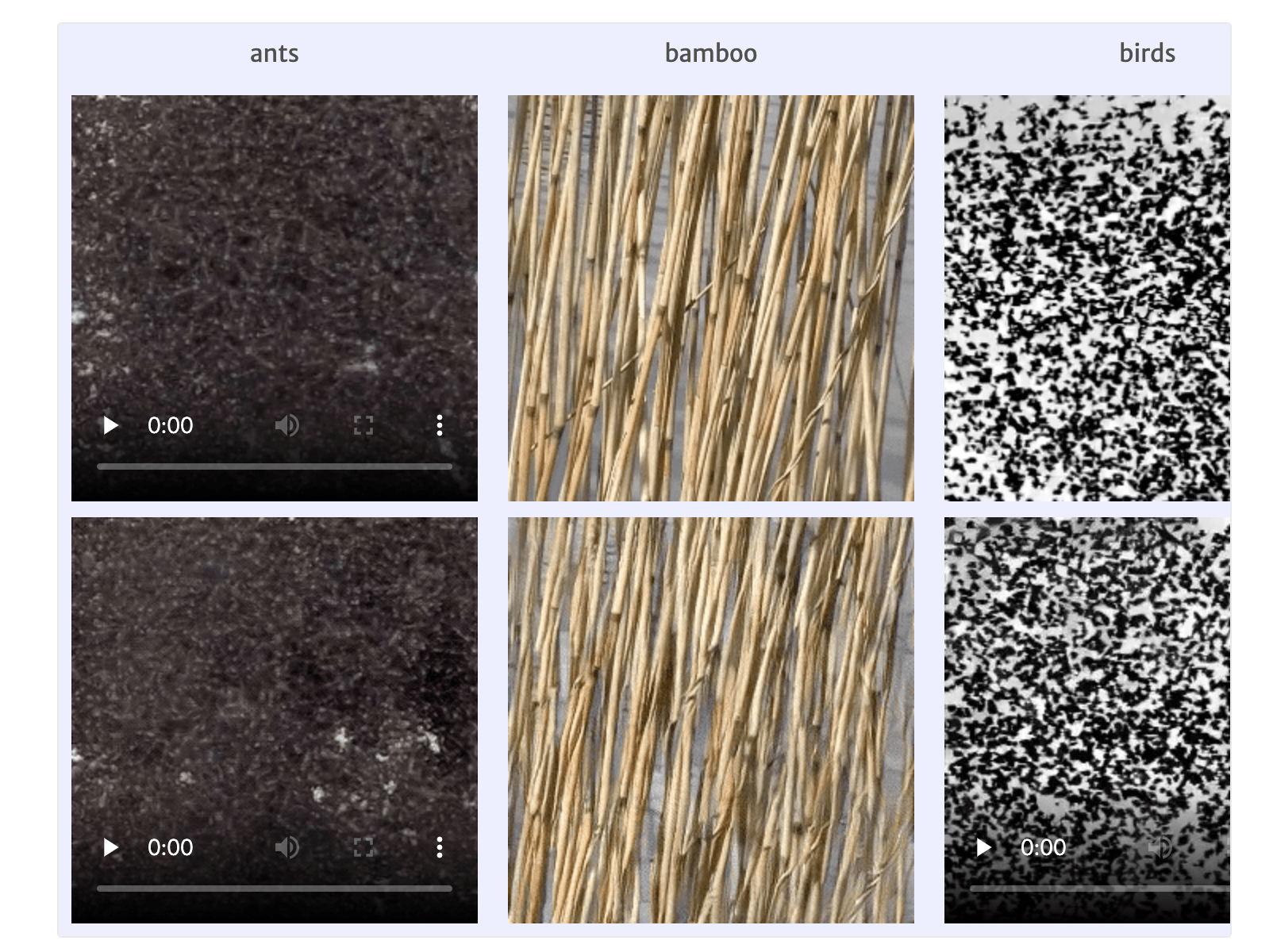

Data source: The author applied their dynamic texture synthesis process to a wide range of textures that were selected from the DynTex database as well as others collected in-the-wild. The total amount of synthesized results they provided is nearly 60.

In order to avoid the consistency between the synthesized dynamic texture and the original texture, the subsequence is optimized and initialized. For example, the first frame of a subsequence becomes the last frame of the preceding subsequence. Therefore, the differences between the original texture and synthesized texture can be told by our eyes in the endless loop of playing.

Besides, this AI model can transfer one dynamics texture into another one. As the documentation page says, “The underlying assumption of our model is that the appearance and dynamics of a dynamic texture can be factorized. As such, it should allow for the transfer of the dynamics of one texture onto the appearance of another“.

General feeling:

This project is a little bit different from the previous CV cases I studied. Firstly, it is not based on the camera but based on the inputs of texture(images/videos). It is such a genius project that we can use this model to make something static(like paintings) dynamic by analyzing the existence of texture from nature.

Leave a Reply