– PROJECT GUIDELINE –

- Unconventional Interaction with KNN Classification: Create a unique, unconventional, artistic and/or practical interaction by developing a classification process with the KNN algorithm.

-

- Read the instructions in the script carefully and train the computer based on your idea by pressing the key “0”, “1”, and “2”.

- Different scene(s) or animation(s) can be triggered and/or manipulated by the “resultLabel” variable from the KNN classifier.

- Requirements

- Utilize at least one ML model.

- It is recommended to use only one model considering the performance.

- Having user interaction is mandatory.

- Optimize the performance (i.e. Attempt to keep the frame rate Ideally above 29fps.)

- Utilize at least one ML model.

– MY SKETCH –

– REFERENCE –

- KNN Classifier on ml5.js from Example Codes | Uses of Machine Learning Models

– DEVELOPMENT –

Greatly inspired by Prof. Moon’s in-class example, I’m thinking of using machine learning-based techniques to realize user interaction. In particular, the KNN classification from the ml5 library seems to serve the purpose well.

Because the KNN algorithm supports a classification process, it is feasible to develop different classes to trigger different kinds of interaction among users. Because I intended to consider the lighting ball as main avatar for users, the general interaction design idea of detecting user physical gestures to control the avatar was gradually shaped.

Therefore, after careful consideration and in reference to the above inspiring sample codes, I determined to apply KNN classification based on Webcam, through which users’ body movement would be captured and classified. I was thinking of moving the avatar mainly through head interaction, because I would like to avoid normal hand interaction with other computer devices like a mouse or keyboard. Moreover, I considered head interaction as more intuitive and convenient in terms of moving around in front of the camera meanwhile being able to observe the avatar on screen.

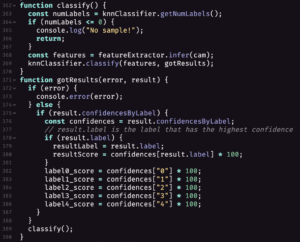

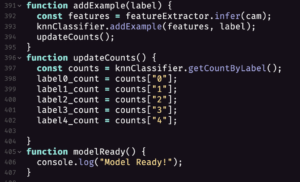

As the codes indicate above, I set five classes in total, each representing a different head movement: head upward to move forward, head downward to move backward, head toward the left to move left, head toward right to move right, and head keeping unmoved as default. Upon realization, users would be able to control the avatar by moving heads around and stay still without moving, pretty much similar to “WASD” control on keyboard while not directly touching it using hand.

Although successfully realizing the movement, there were two problems left:

- Every time I open the project, I need to set the classification before interacting with the avatar. –> After the final decision, such classification could be saved into a JSON file and automatically read.

- Because head movement is limited in terms of 360 degrees of movement in a virtual space, I might need to polish the design of interaction system.

Leave a Reply