Project Name: Revealing

Video Demo:

Code Link:

https://drive.google.com/file/d/1zA6KRWj8FQQsm8KUQ7lpCYfGOBFPxctH/view?usp=sharing

Development Doc:

https://docs.google.com/document/d/1cx7WbF6syAdNrv-9CLh0WKJVK6rxhX3cA5MZjvfWTNg/edit#

Concept Development

Initially inspired by The Scream and Blow, I was thinking about [control], [information], and [distortion]. The 3 words remind me of the turbulence in the contemporary era – everyone is struggling in the turbulence caused by the information explosion, a so-called “modern people” cannot live without any phone or laptop for one day. However, it is important that we still keep a part of ourselves awake. Therefore, I wanted to create something that help people find the calm inner self –some of my classmates questioned this since they are not sure how I should express the “inner self”. At that moment what I have decided was only to use voice input to control the visual effect, so it was highly on the fence.

I have been long feeling there is something contradictory in my project – until I discussed it with Professor Moon, he mentioned that it is not only important to keep yourself in turbulence, but also to make your voice heard. You have to reveal yourself to avoid being flooded – another way of expressing that you have to be yourself inside the information explosion. The technical part of my project echos with this concept as well.

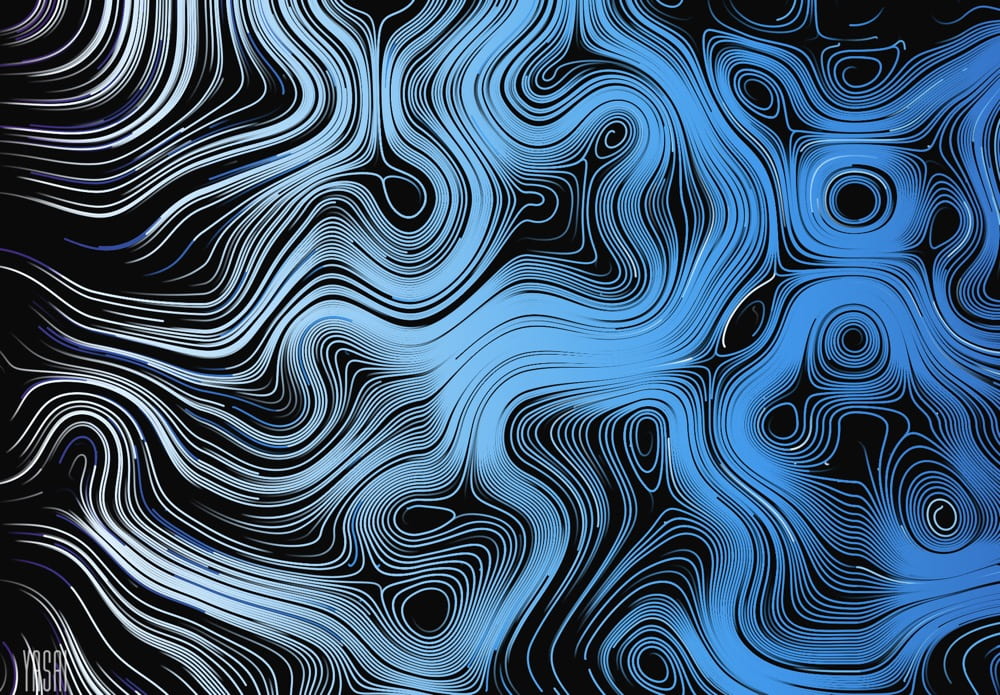

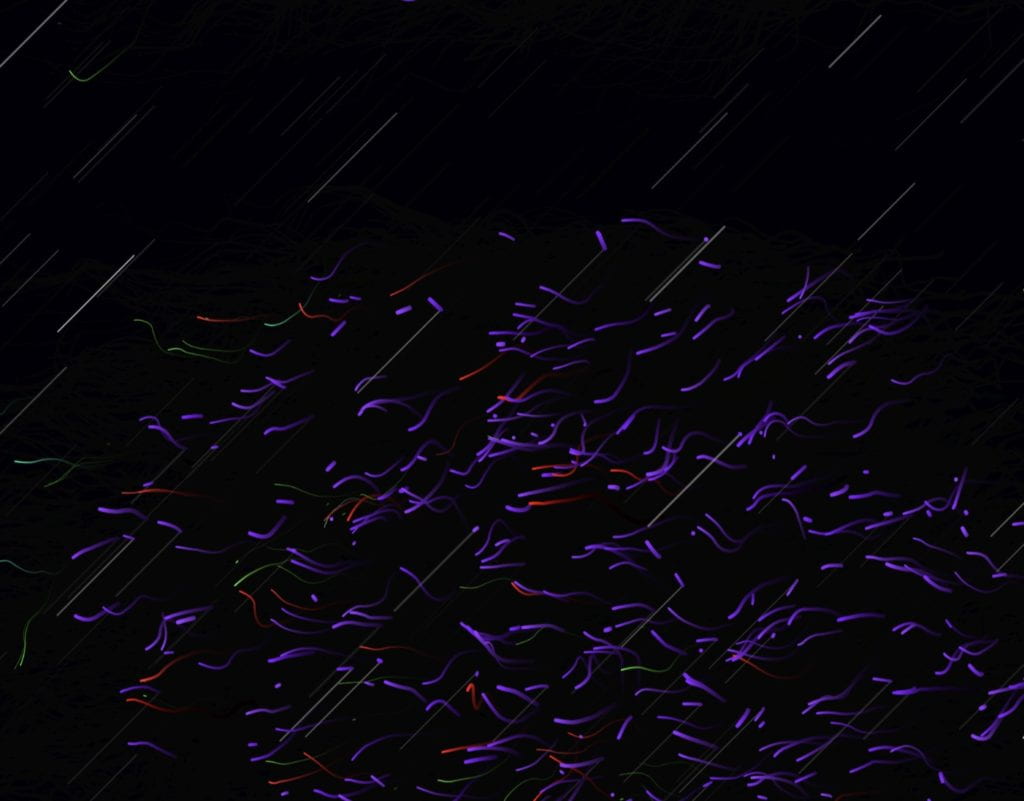

For the visualization, my inspiration mostly comes from Perlin Noise. The flow of the lines is so natural and perfectly fits my expectation on consciousness visualization. Therefore, I started to research more about Perlin Noise.

Technical Development

Basically following my implementation plan, there are 3 main parts of my development:

Visualization -> Combination with voice input -> Finalization

Difficulties & Progress

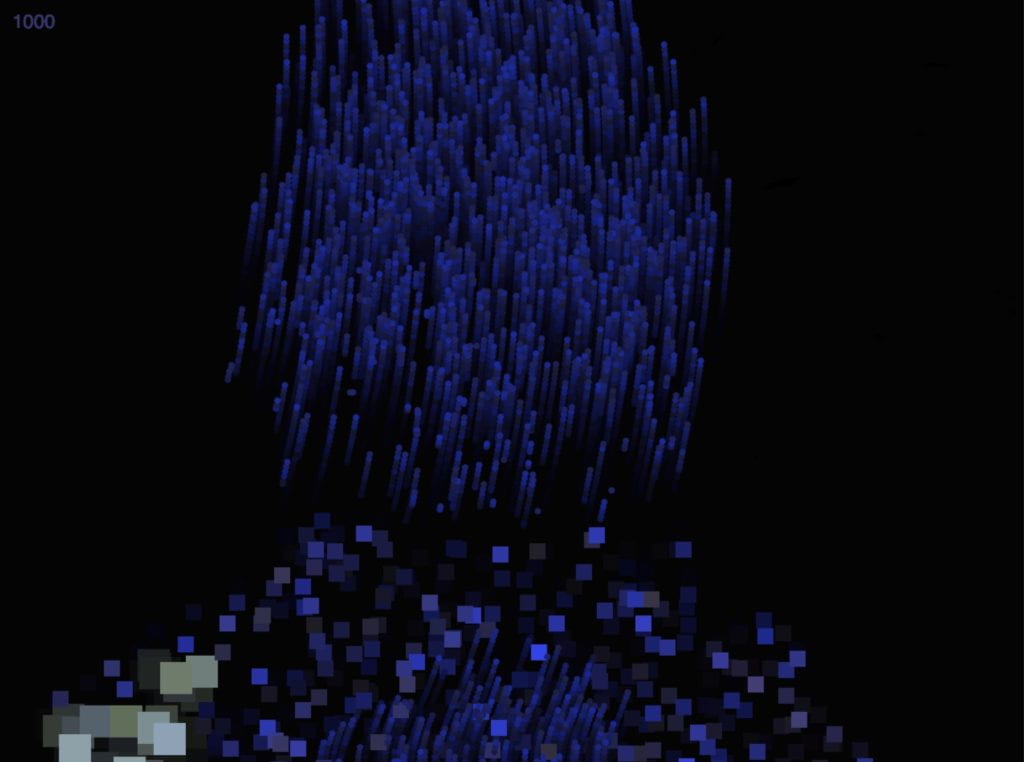

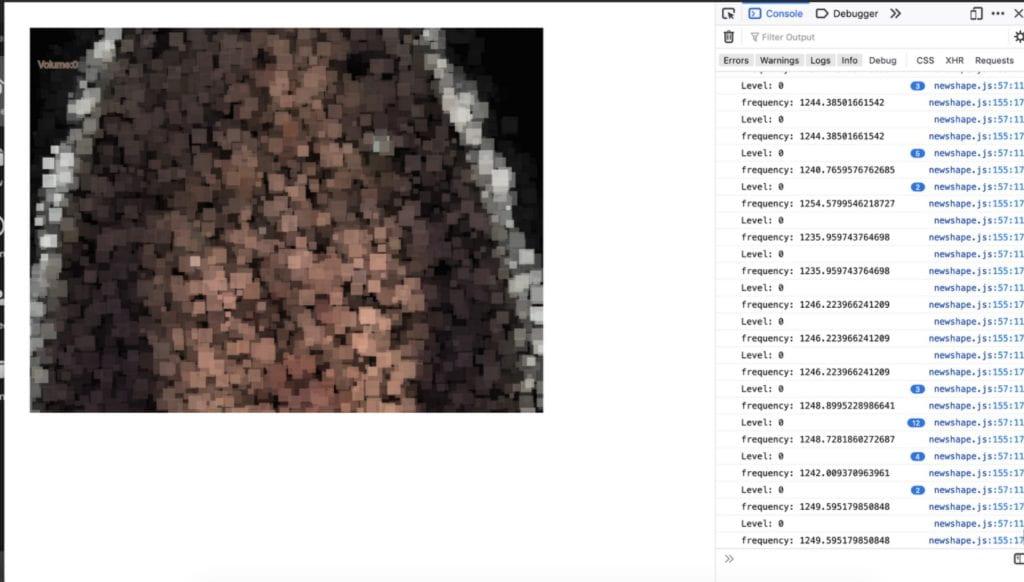

- Perlin Noise! It is an amazing and beautiful pattern, but much more difficult to play with than I have thought. The first thing I had to do is to integrate the Perlin noise pattern into my face area detected by body pix. I referred to Ellen’s midterm project and integrate the Perlin Noise pattern into the face area, however, the curves were changed into lines, and didn’t look good. It is even worse after I made some modifications.

After having office hour with Moon, he pointed out that I had roo many for loops in my code which made everything super slow and didn’t work well since my laptop had to calculate too many things in one run. He also created the function checkBodyPixArea() (even though we didn’t use it later), and store the value inside the index function. By pushing the particles class inside the corresponding if statement, it is much easier to go.

2. The visual effect. As some peers have commented, my concept was relatively ambiguous and hard to achieve. After solving the first difficulty, I started to think about what kind of effect I wanted. I asked Ellen for feedbacks on what I had and she introduced me to her bodypix assignment. I was inspired by the alpha effect and played around with different shapes & colors, but not satisfied with the result.

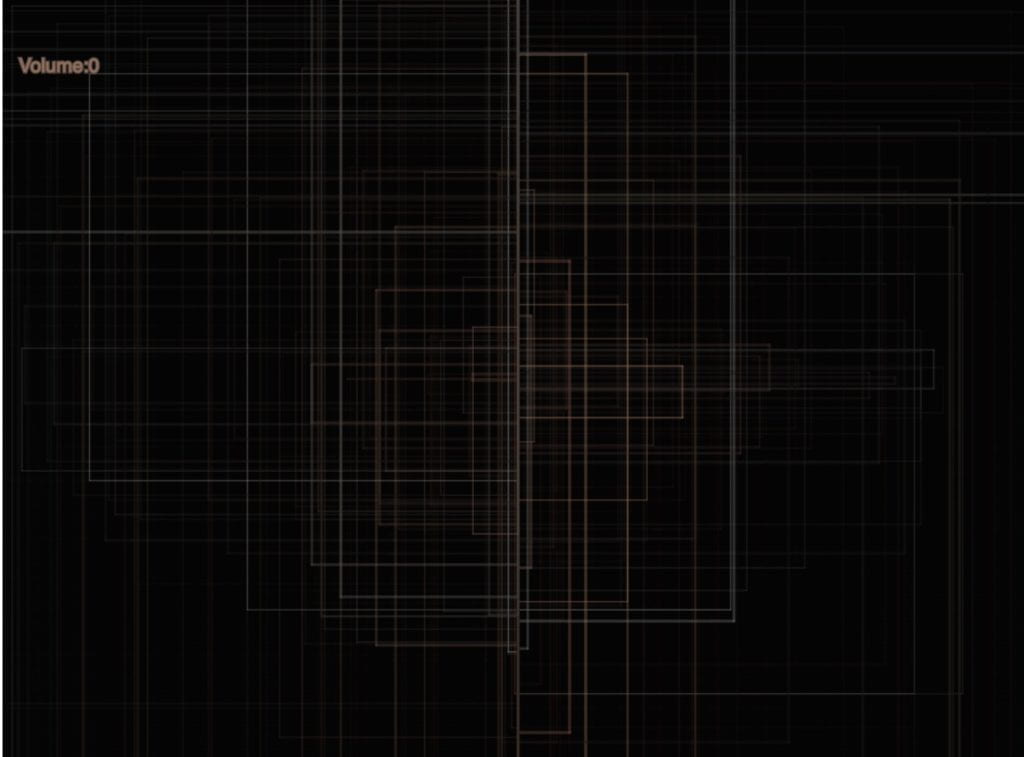

Doesn’t sound good so far. The Perlin noise in the background looks like the bacterias developed in the laboratory – they are realistic, but a little bit gross. The whole visual effect is not discrete as well, as I was too greedy: I wanted all kinds of factors to be inside to better embody the information explosion, but what I was doing was already explosion. I also played with the noise wave during the process, but finally, I decided to abandon it since it doesn’t contribute much to the visual effect.

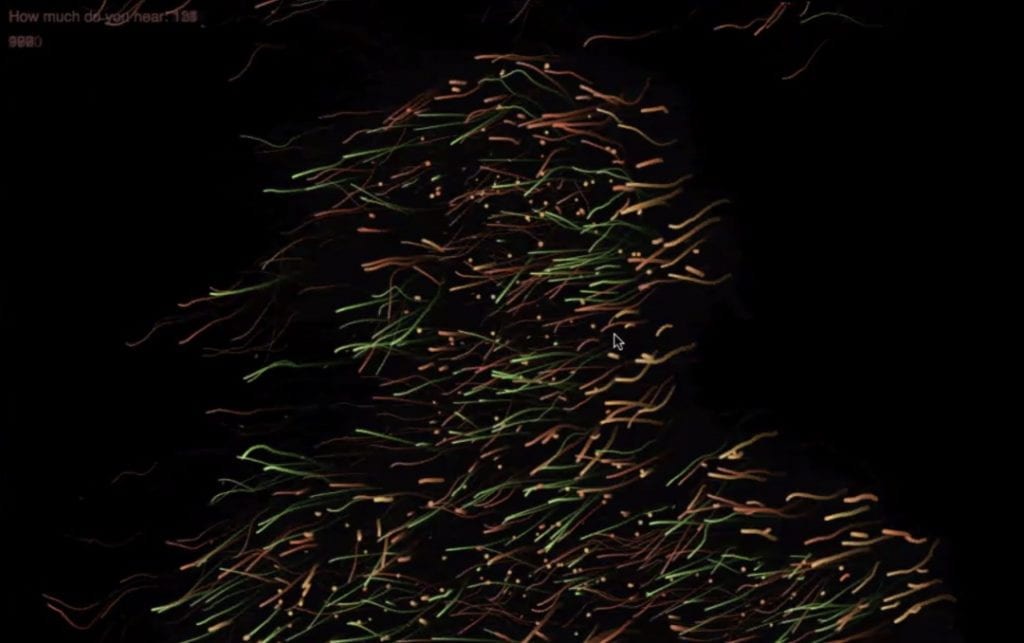

For the improvement of visual effect, Instead of using one color, Professor Moon suggested I use hue color mode instead of RGB mode. The changing color made the visual much more varied and colorful. Moon also modified the parameters of the Perlin Noise so everything flows more naturally and stays on the screen longer, making sure that there is still something when there is no obvious input. Now the visual effect looks more like some people’s claiming – it can be heard and would be recorded.

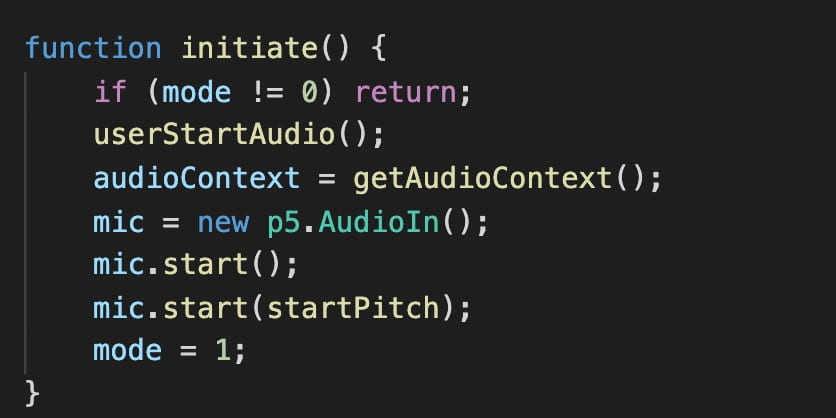

3.Sound input. Going along with my initial proposal, I decided to use the crepe model and frequency as my input. But only using the crepe model is too monotonous and cannot reflect the information explosion very well, since its most intuitive presentation is people’s loudness. However, the amplitude doesn’t work even though I checked it multiple times. During the final meeting, Moon still suggested I use the amplitude and added an initiate function where help the browser check I have started running the code. Later I also figured out that amplitude-related input only works in Chrome but not in Firefox, and the crepe model works way better in Firefox. Embarrassing but still don’t know why 🙁

4. Performance & Experience. Initially, I was about to design a game-like experience, with a noticeable and official beginning and end. However, Professor Moon advised that an experience without end would give the audience a better, more immersive experience. Regarding the performance area, since I tried to run everything on the window size, it made everything super slow and my laptop cannot support a long-time live showing. Mapping the normal camera size (320×240) to the whole screen shows the same quality but much better performance.

Future Improvement

As mentioned in the documentation doc, there are mainly 2 aspects I want to develop further.

- According to the audience feedback from IMA Show, some of them didn’t realize what’s on the screen is themselves. The delay of the model and various patterns confused them a little bit and didn’t embody a real-time body shape. I would like to slightly divide different parts of the visual effect to better let the audience realize their body shapes, or as some of my peers commented, add an instruction page/voice before it starts.

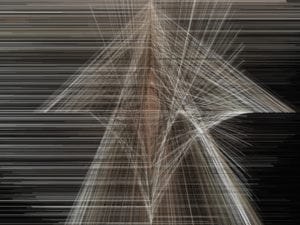

2. Even though it is not like a game but more like an experience right now, I still have a tiny bonus scene, which is when your pitch is higher than 1500 Hz(when you talk, generally won’t be higher than 600 and the highest pitch of human beings seems to be around 2000), you will have a chance generating lines on the screen. I wanted to convey that don’t be too loud when you speak up, also keep some space for others to express their ideas. Listening is still important under the information explosion. It is not the main part, but I think I can develop further on it as well.

Thanks to all my peers who provided me suggestions and help do the user test, and great thanks to Professor Moon! You are really one of the best teachers I have ever met in my life. I know I am not a coding savvy person (even not good at it) but you can always inspire me a lot, no matter for coding knowledge or concepts, or even the way of learning. Wish you all the best and hope to see you soon next semester! 대단히 감사합니다!