EmoSynth for Sensorium AI

EmoSynth for Sensorium AI

EmoSynth: A combination of ‘Emotion’ and ‘Synthesizer’, highlighting the device’s ability to synthesize emotional expressions.

Designers

- Junru Chen

- Lang Qin

- Emily Lei

- Kassia Zheng

- Muqing Wang

Final Presentation

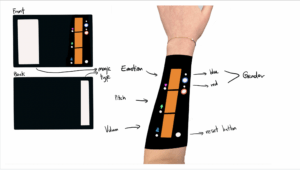

Project Description

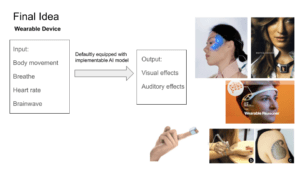

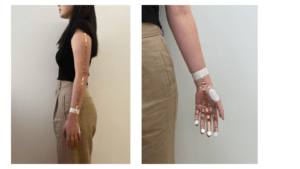

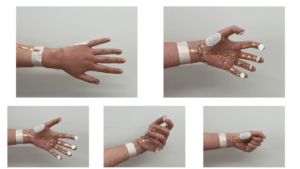

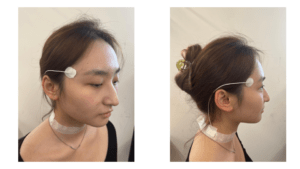

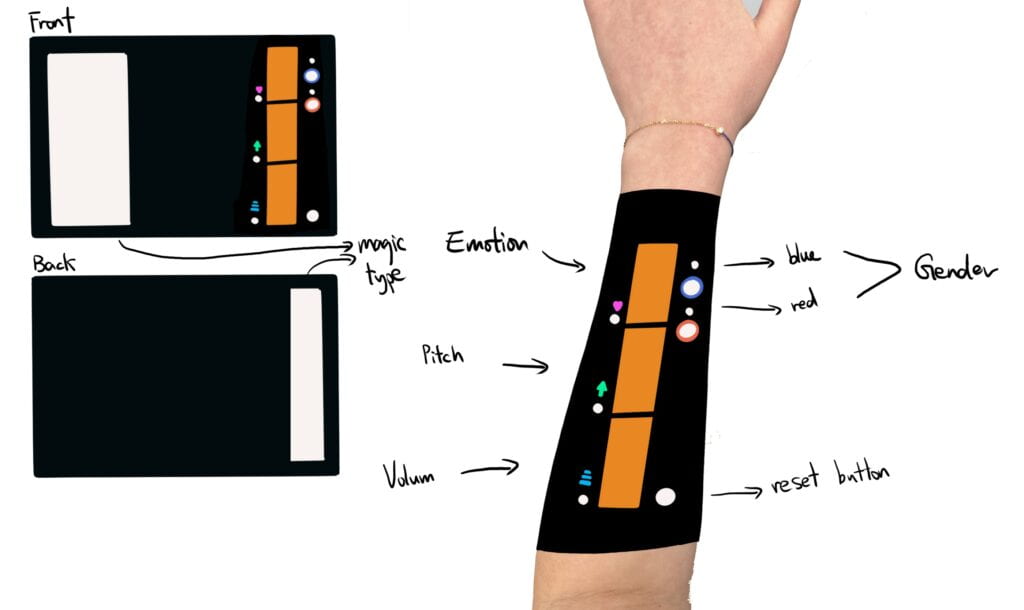

“We are Sensorium AI Group from the Multisensory class. We designed a wearable device that works with Sensorium Ex, Luke, Michael, and our professor, Lauren. In order to fulfill the design objectives of facilitating real-time artistic expression and modulation for performers with cerebral palsy and/or limited speech, our device allows the user to freely select the emotion and level of emotion they want to express. Additionally, there is a selection of high and low audio to represent diverse genders, along with a reset button to prevent accidental touches during movement on stage. Each selection on the device is clearly denoted by an embedded LED hint to better inform the users.

Timeline

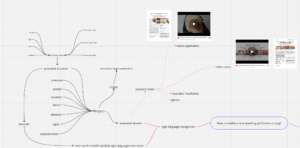

- 09/14/2023: Primary research ( Reference cases and Inspiration)

- 09/21/2023: Ideas brainstorm and sketch

- 09/28/2023: Finalized our project idea

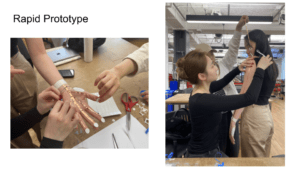

- 10/05/2023:Developed first quick prototype

- 10/12/2023: User testing with the quick prototype

- 10/19/2023: Implementation of user testing result

- 10/26/2023: Finalize the sensors and buy materials

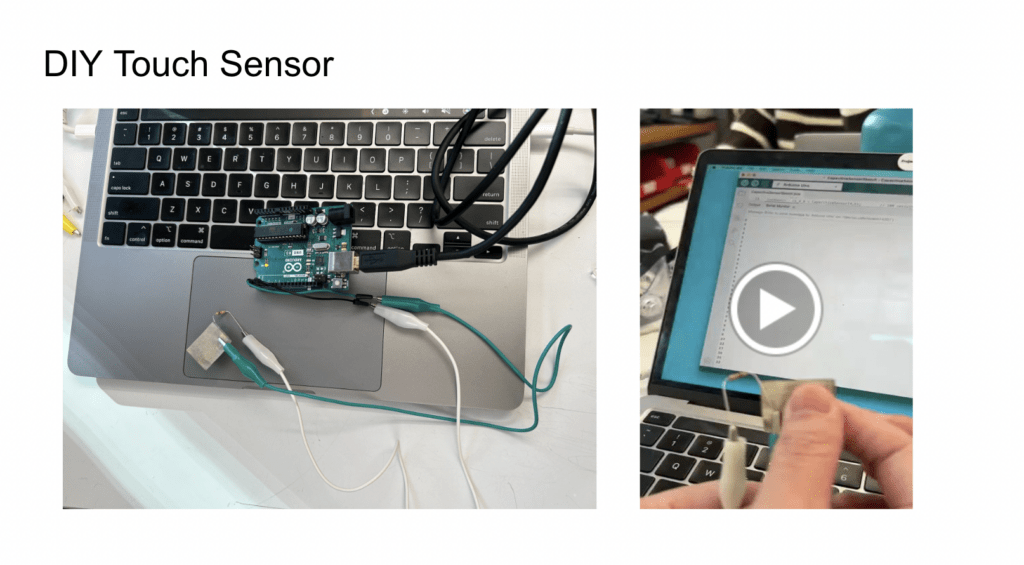

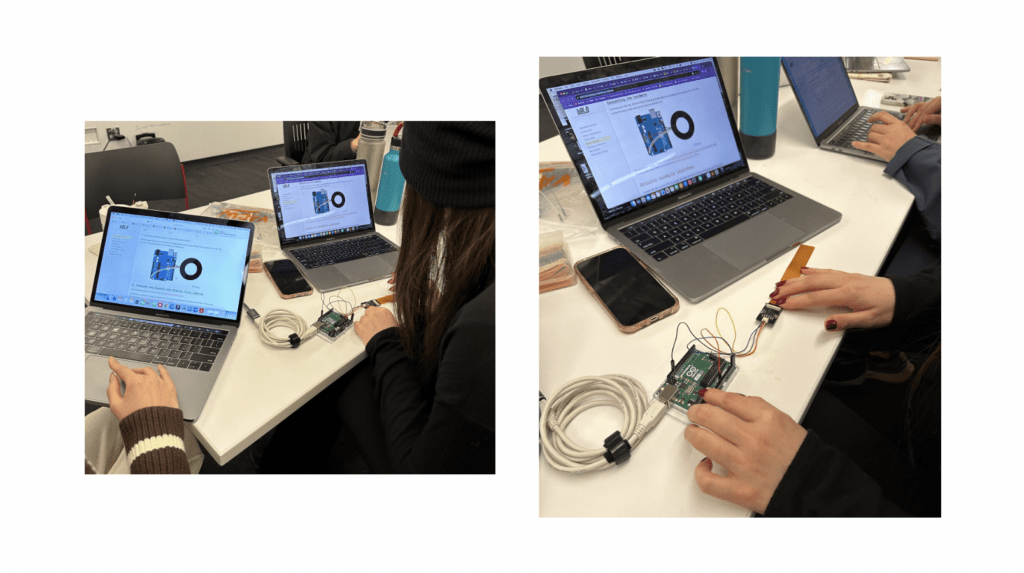

- 11/02/2023: Prototyping with the sensors (input signal)

- 11/09/2023: Start to work on the visual and audio output

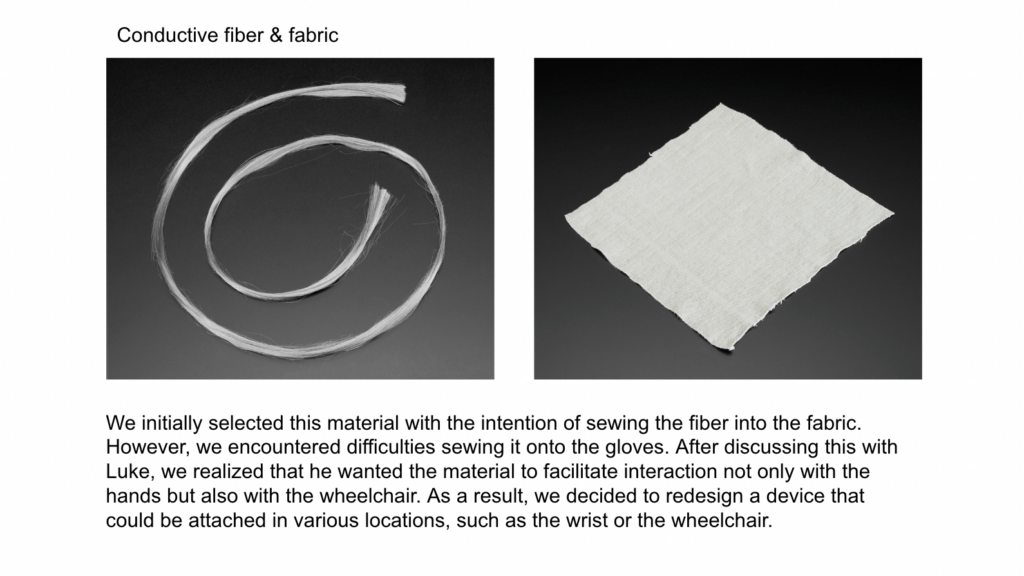

- 11/16/2023: Finalize our soft material and the functions of each button

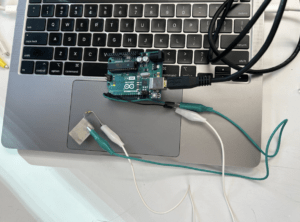

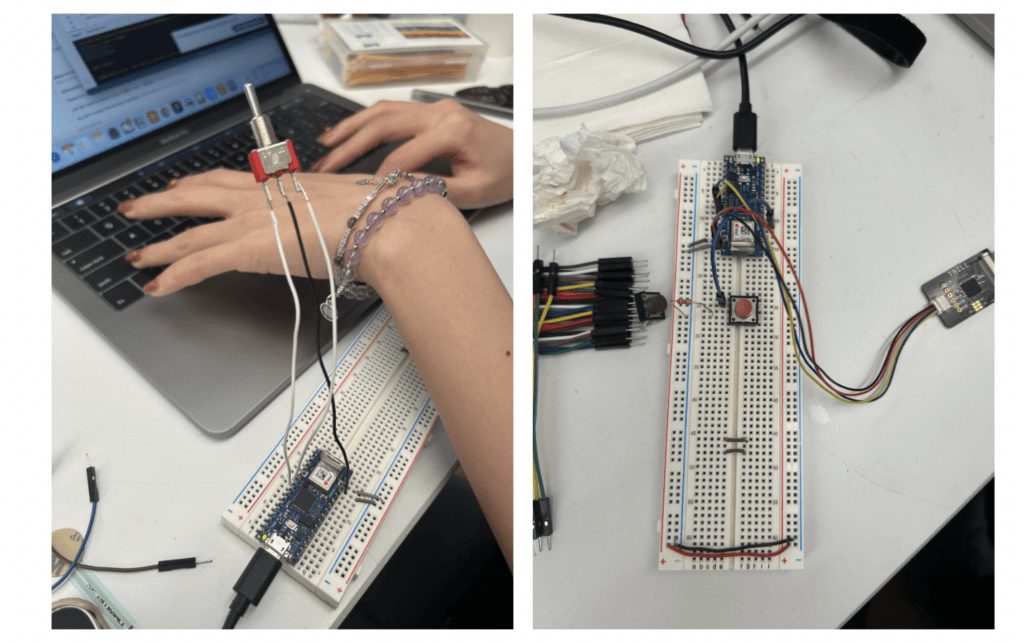

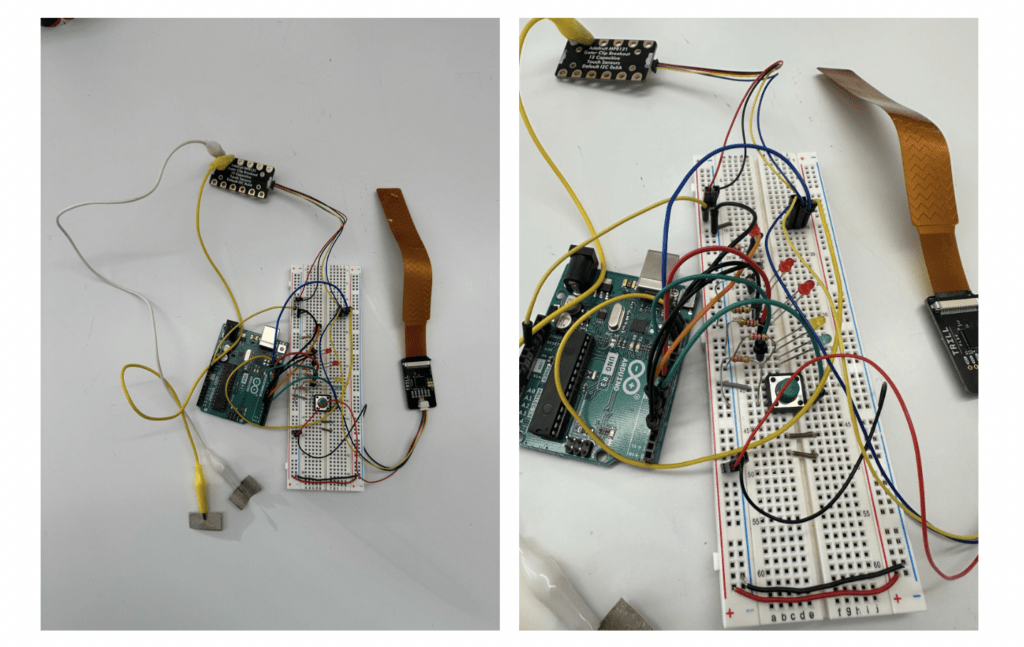

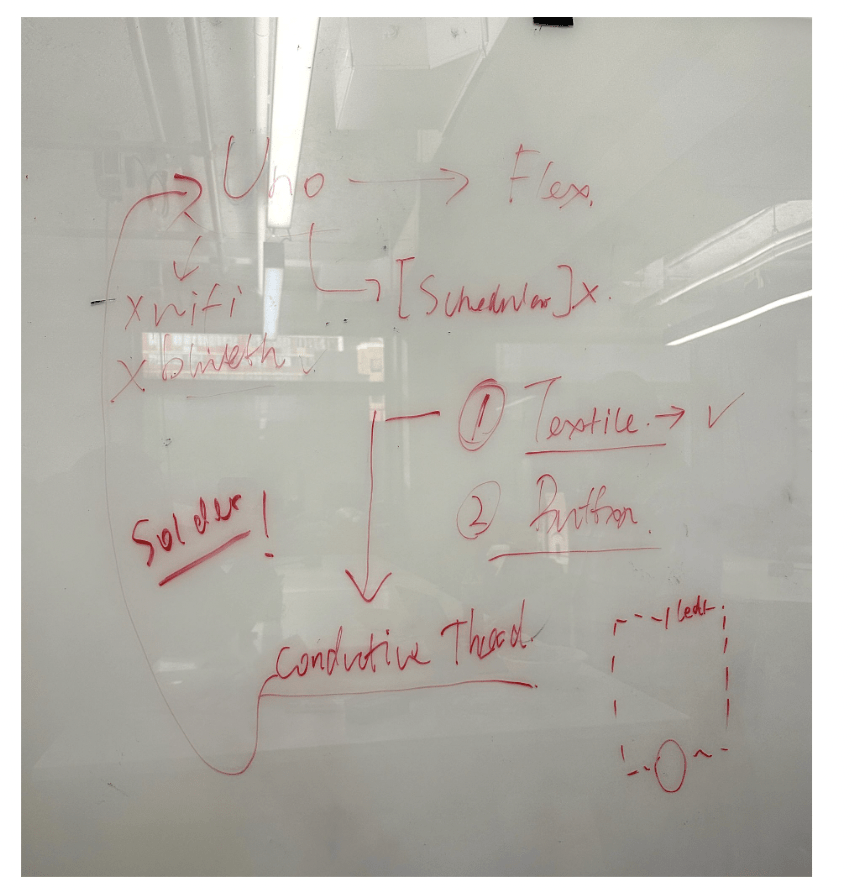

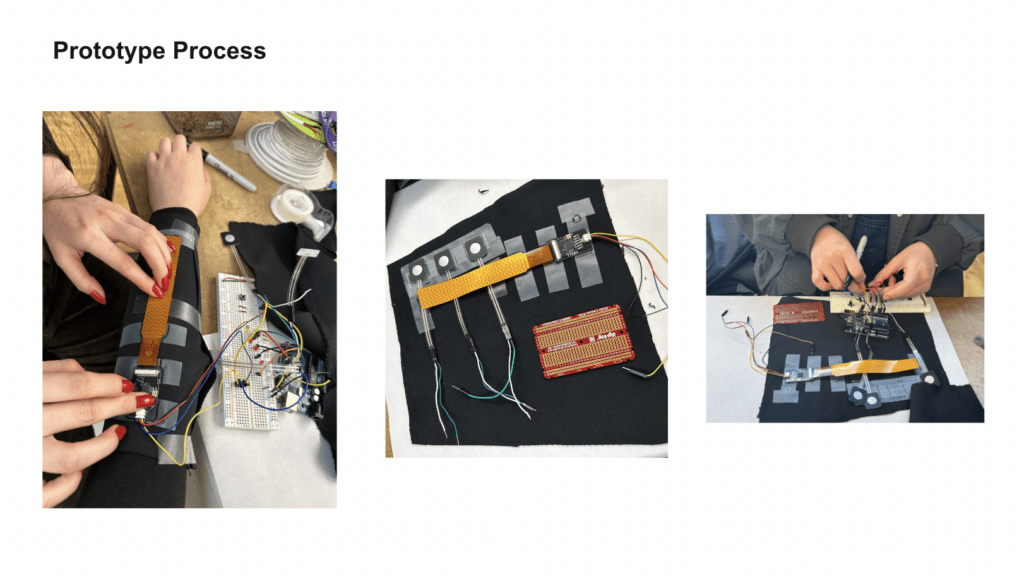

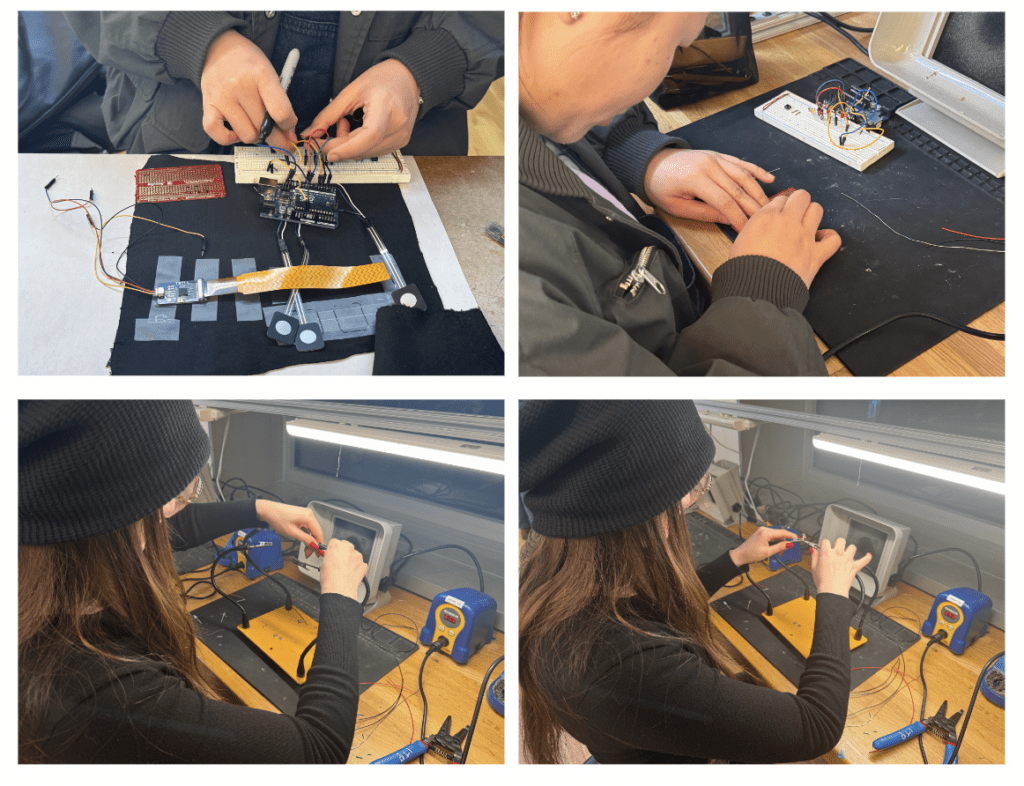

- 11/30/2023: Connect flex sensors, lights and buttons with the Arduino

- 12/07/2023: Finalized the fabrication part

- 12/14/2023: Documentation and final testing/troubleshooting.

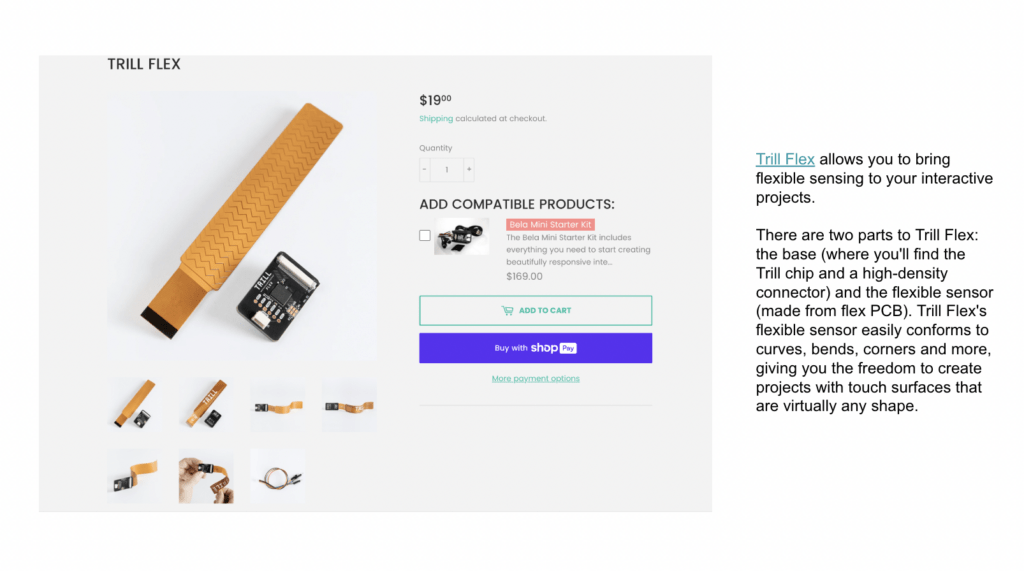

Weekly Updates

Click here for our Google Slides Weekly Presentation link (66 pages)

Click here for our Final Google Slides Presentation link (15 pages)

09/14/23

Reference

Inspiration 1: http://xhslink.com/6NqCyu

Inspiration 2: https://www.media.mit.edu/projects/alterego/overview/

Inspiration 3: https://www.deafwest.org/

Other Possibilities:

Emotion AI: https://www.affectiva.com/about-affectiva/

EMOTIC Dataset- Context Analysis: https://s3.sunai.uoc.edu/emotic/index.html

Brainwave: https://prezi.com/p/fvxrqad30mjq/music-from-brainwaves/

09/21/2023

Background:

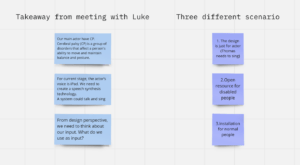

Takeaways from meeting with Luke

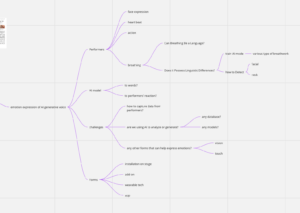

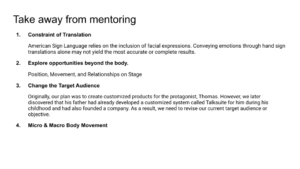

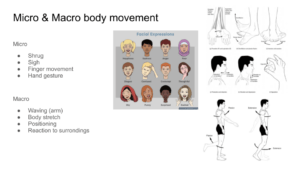

Based on our target user, Thomas, and the general audience, we have proposed two ideas: sign language translation and alternative emotional expression.

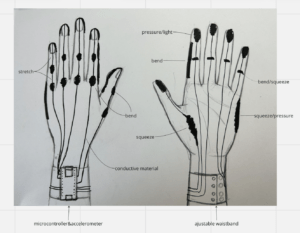

“ Translation” of sign language

Sketch:

Alternative Emotional Expression

Notes from Crit:

- Invisible and visible design

- Thomas’s frequent language/issues

- Pre-set device? Technical issues.

- The generated voice is more vivid than the machinery voice.

- How to train AI mode?

- Product or part of art/opera?

- Connect to Thomas.

09/28/2023

10/05/2023

10/12/2023

Hypothesis

An educated guess on what you think the results of the study will be.

If we can create a wearable device that is able to recognize sign languages and analyze the emotions of the user based on an AI model, then it may help them to effectively communicate with people who do not understand sign languages. In the case of the Sensorium AI, with a pre-set movement, content, and voice output, it may allow Thomas to express the context with the sign language as triggers and translate it into voices while he is performing on the stage.

Research Goals

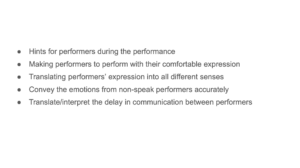

- Help individuals with speech impairments communicate with others and express their emotions more effectively.

- Specifically focus on performers in opera with speech impairments to help them express emotions effectively and timely on stage.

Methodology

Number of participants: 5

Location: NYC

Age range: not limited

Gender: not limited

Disability Identity: speech impairments

Experience: prefer people with performance experience

Procedure

Data collection: Bodystorming, feasibility testing Find more methods here

Length: approximately 30 mins

Location: unknow

Testing Script

Informed Consent

Hi, my name is <XX>. Today We would like to invite you to participate in a research study designed to explore the potential benefits of wearable devices in aiding non-verbal performers in conveying content and emotions on stage. Before you decide whether or not to participate, it is important for you to understand the purpose of the study, your role, and your rights as a participant

Please let me know if you’d like a break, have any access requirements, or need any accommodations. There are no right or wrong answers and we can stop at any point. Do I have your permission to video and audio record? Quotes will be anonymized and all data will only be shared internally with my team, stored on a secure, password-protected cloud server, and will be deleted after the study is completed.

General questions:

- What’s your first impression of this wearable device?

-

- Do you feel any barriers from this device?

- Will anything from this device affect the costumes and stage design?

- What is the safest way to have this device attached to you by considering your interactions with other performers on the stage?

User TAsk Feedback:

Mary:

It will be Cool to wear on stage.

There might be some kind of conflict between recognizing movements and the freedom of performers on the stage.

To ensure the wearable device, I might need to move in a very specific way.

Maybe the speed of movement should be considered.

Cindy:

This looks very futuristic.

There might be some unintended activation.

Nicole:

The appearance of this device kinda aligns with the story background.

The device may make the performer concerned that their own movements will damage the device so that they cannot perform as well as they used to.

I am concerned about the learning curve of this device.

Are there any tips I need to learn or remember by wearing this device?

Josh:

Will sweating and makeup influence the circuit?

Potential risk to the body’s security since it’s an electronic device.

Fanny:

It’s the solo device for each individual? The construction of relationships are inevitable on the stage/in the opera, multi-player might be the concept that you can dive in.

Edward:

I really like the design because it expand the expression of dancing and movement on the stage. As a dancer who perform at large theater, we concern about how to affect audiences far away from the stage. I think the design would help us express and visualize our emotion more dramatically. I am really looking forward to your next step! The one suggestion I would like to say is that be sure to pay attention to the comfort as well as the beauty of design. It’s quite hard to imagine to wear a winter glove all the time while dancing. Find some breathable material and try it on yourself for several hours before you decide to use the material.

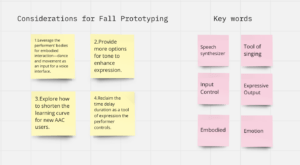

10/19/2023

Comments on the form:

It will be Cool to wear on stage.

This looks very futuristic.

The appearance of this device kinda aligns with the story background.

Potential Constraints in the Performance

There might be some kind of conflict between recognizing movements and the freedom of performers on the stage.

To ensure the wearable device, I might need to move in a very specific way.

The device may make the performer concerned that their own movements will damage the device so that they cannot perform as well as they used to.

Damage Concerns

There might be some unintended activation.

Will sweating and makeup influence the circuit?

Potential risk on the body security since it’s an electronic device.

Learning Process

I am concerned about the learning curve of this device.

Is there any tips I need to learn or remember by wearing this device?

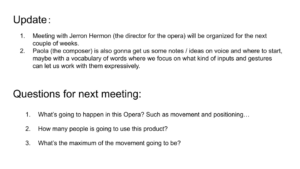

Our question for weekly meeting with Luke, Jerome and Michael

- What’s going to happen in this Opera? Such as movement and positioning…

- How many people is going to use this product?

- What’s the maximum of the movement going to be?

- Is this prototype can be used in this opera with some movement preset into the performance?

Take AWay from meeting with Luke, jerome and michael

Regulation

Number of users: 10-15 performers

Suggestion:

Maybe the speed of movement should be considered?

Take AWay from in-person hack with Luke

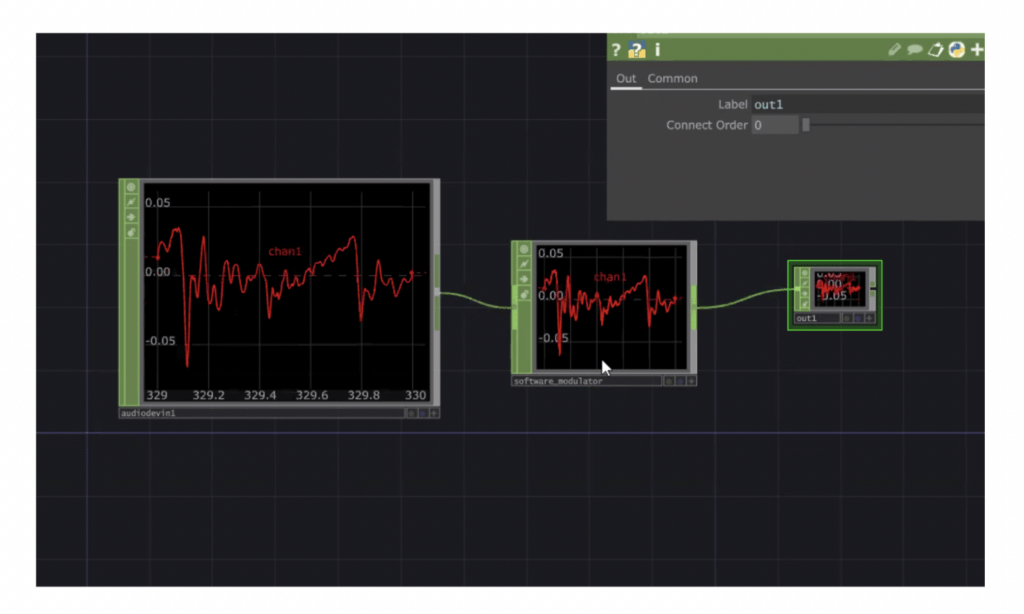

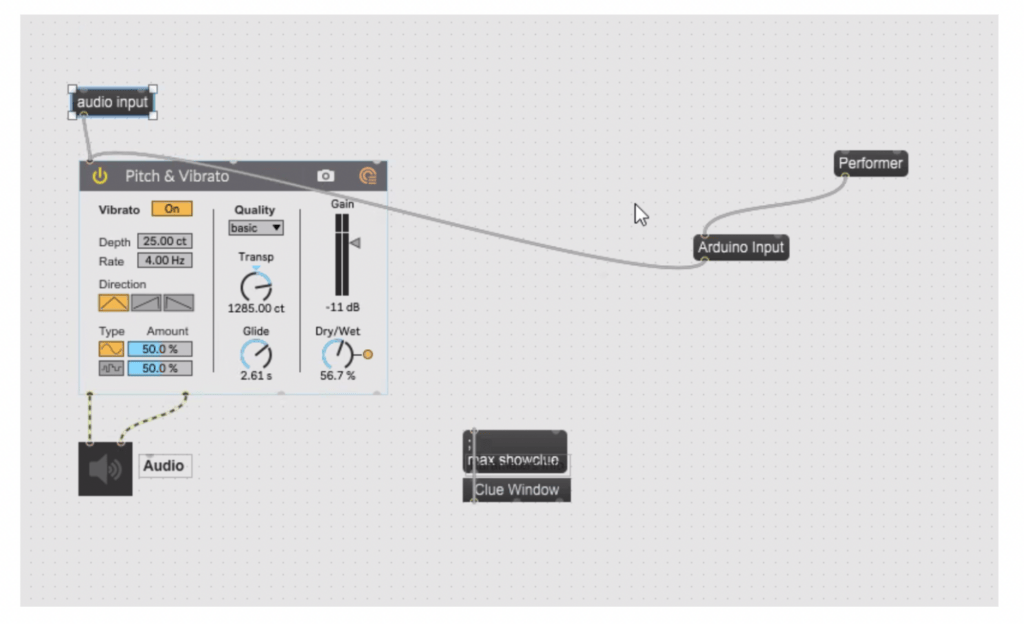

We learned about how to use MaxMSP to create output, which helps us get a better understanding of what would the final prototype be.

10/26/2023

our Prototype

Main Component in the circuit

lock/unlock

Reset

Reference link for prototype: Physical computing project from other website

Reference link for slider: Youtube video

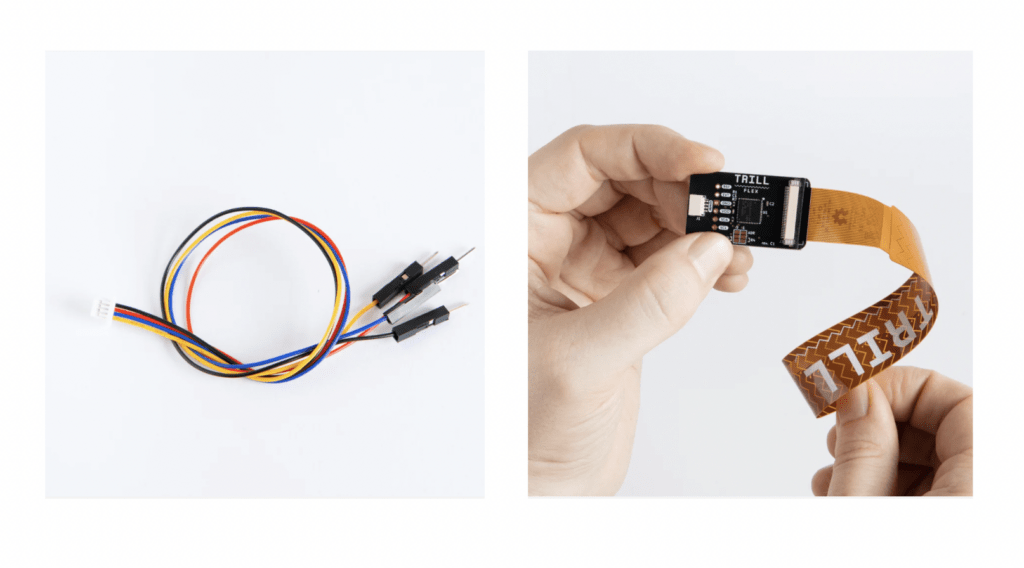

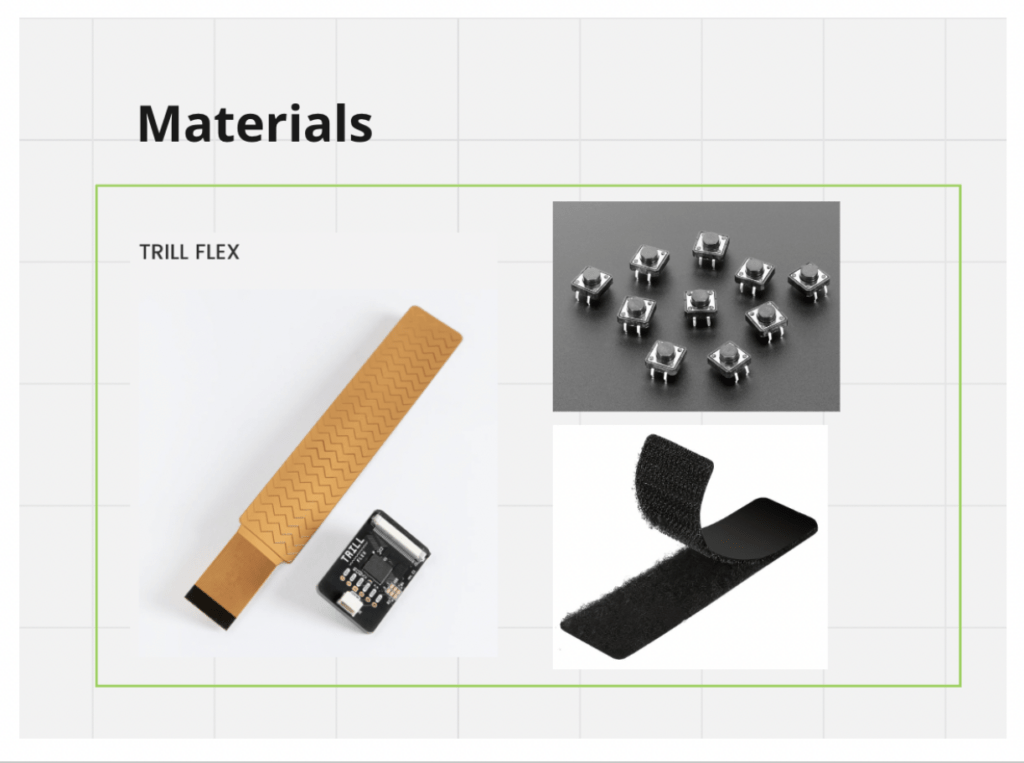

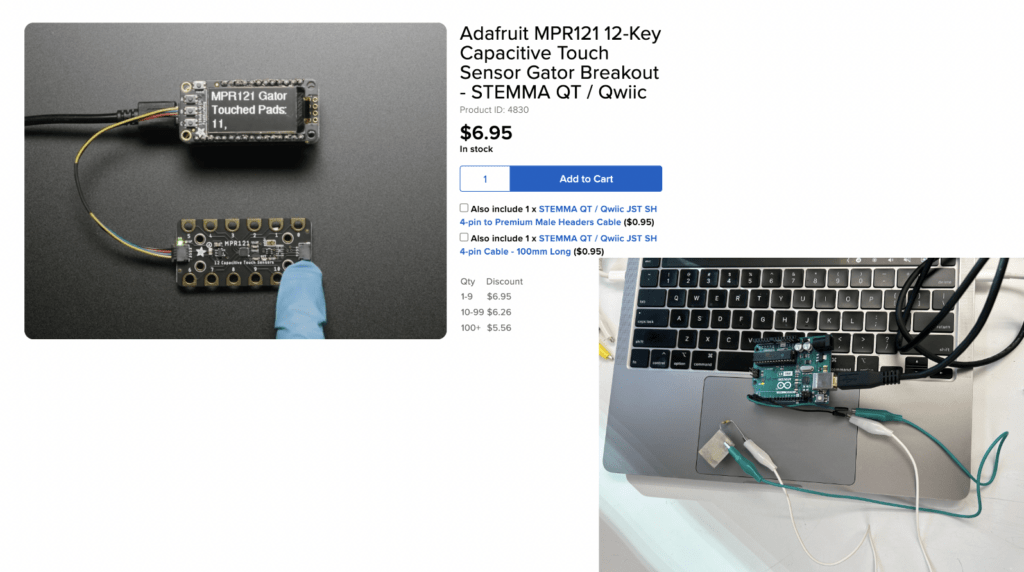

In our weekly group meeting, we also bought materials for final prototype including different sensors and parts:

11/01/2023

11/07/2023

11/14/2023

11/19/2023

11/30/2023

12/07/2023

12/14/2023

This week we filmed our documentation with the whole team.

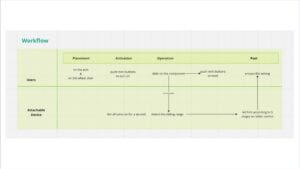

5 W’s Chart

| Components | Questions and considerations |

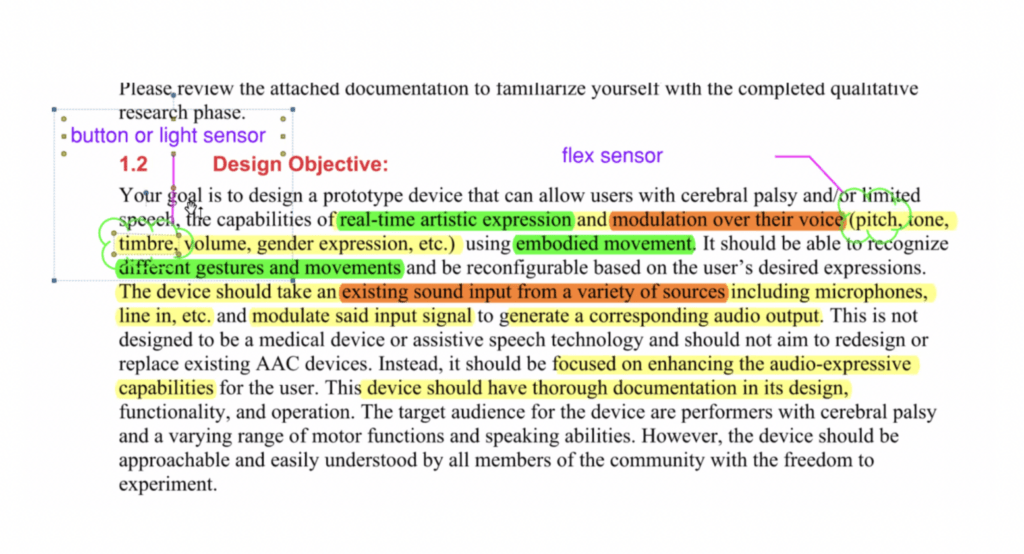

| Background (Why) | Upon receiving the mission, we got to know an incredible opera team called Sensorium Ex. In order to fulfill the design objectives of facilitating real-time artistic expression and modulation for performers with cerebral palsy and/or limited speech, we resolved to craft a user-controlled device aimed at alleviating the inconveniences experienced on the stage. |

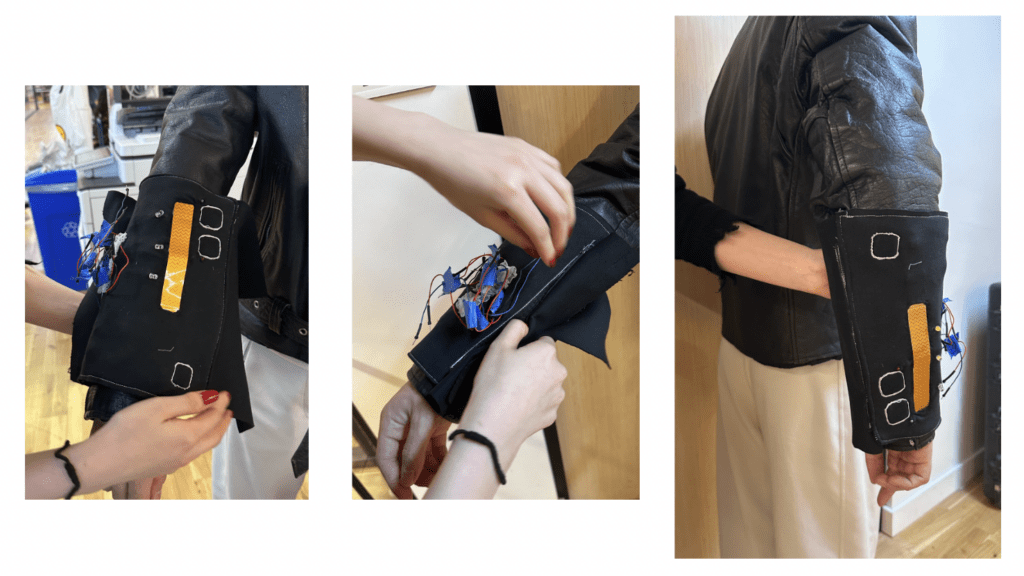

| Impressions (What) | Anticipating the availability of pre-existing AAC devices, we fashioned a wearable voice-emotion synthesizer with a soft interface. In our design process, we considered various factors that may influence human voices, encompassing aspects such as gender, age, emotions, accents, and more. |

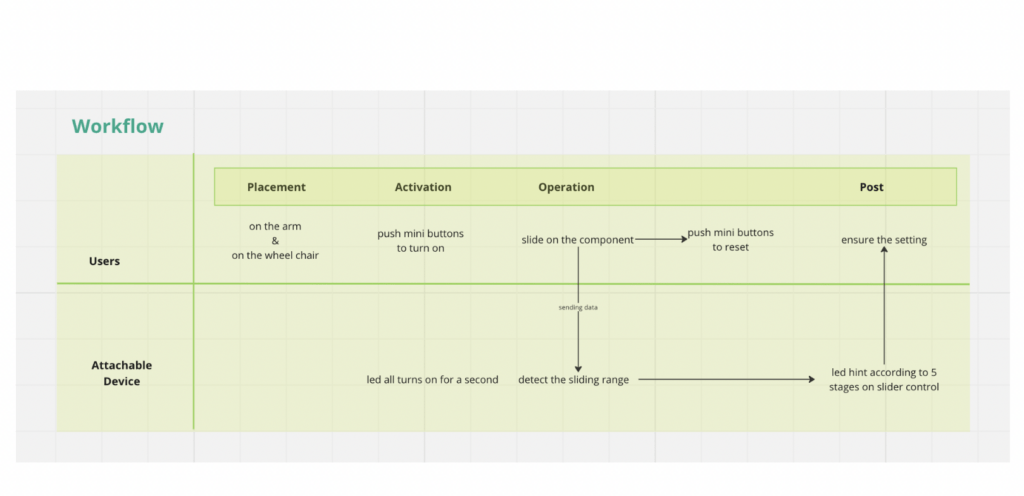

| Events (When) | This device is designed to be both accessible and user-friendly, requiring no prolonged learning curve. Furthermore, we considered sizing and explored diverse placement methods, culminating in the development of an adjustable extension fabric. Consequently, it can be comfortably worn on the arms, legs, or wheelchair handles, accommodating a spectrum of usage scenarios. |

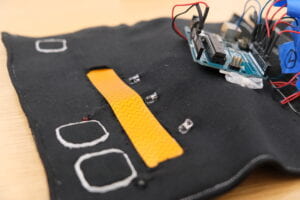

| Sensory Elements (How) | In the final stages, we prototyped pivotal components, including pitch selections representing diverse genders, visualizations of emotional expressions as placeholders for the yet-to-be-incorporated model, and a reset button to forestall inadvertent activation during on-stage movement or dancing. Our design showcases a highly tactile and instinctive control system, empowering users to effortlessly navigate the device without the need for visual attention. Each selection on the device is clearly denoted by an embedded LED hint. |

| Designer/User (Who/Whom) | The designers are Kassia Zheng, Junru Chen, Lang Qin, Emily Lei, and Muqing Wang. Lang did the coding associated with Arduino and electronic connections. Junru and Kassia handled the fabrication part, connecting sensors and sewing all the materials into one piece. Emily was responsible for the visual output using TouchDesigner, while Muqing learned MaxMSP and actively communicated with Luke and Michael. As a team, we worked together on the final output and documentation. |