Last updated: May 18, 2023 (final-round benchmarks and scripts are made public)

Sample Benchmarks

Download, Overview: This link points to a modified version of the ASAP7 library and one sample benchmark, a SHA256 crypto core. Included are all files necessary to run physical design, and also the Innovus databases from successful runs at our end, including some manual efforts regarding DRC issues (see further below).

Important: Only the above link is to be used throughout the contest, not any other ASAP7 version.

Scope: Note that the sample benchmark is only meant for warm-up on the ASAP7 library. There are no particular efforts made for security closure nor for design optimization for these samples. Feel free to also see the discussion of sample benchmarks from last year, which were indicating on some trivial, non-optimized ideas for security closure. Recall that there is no single, right or wrong approach for the contest — it is up to your creativity and skills to come up with the best defense solutions.

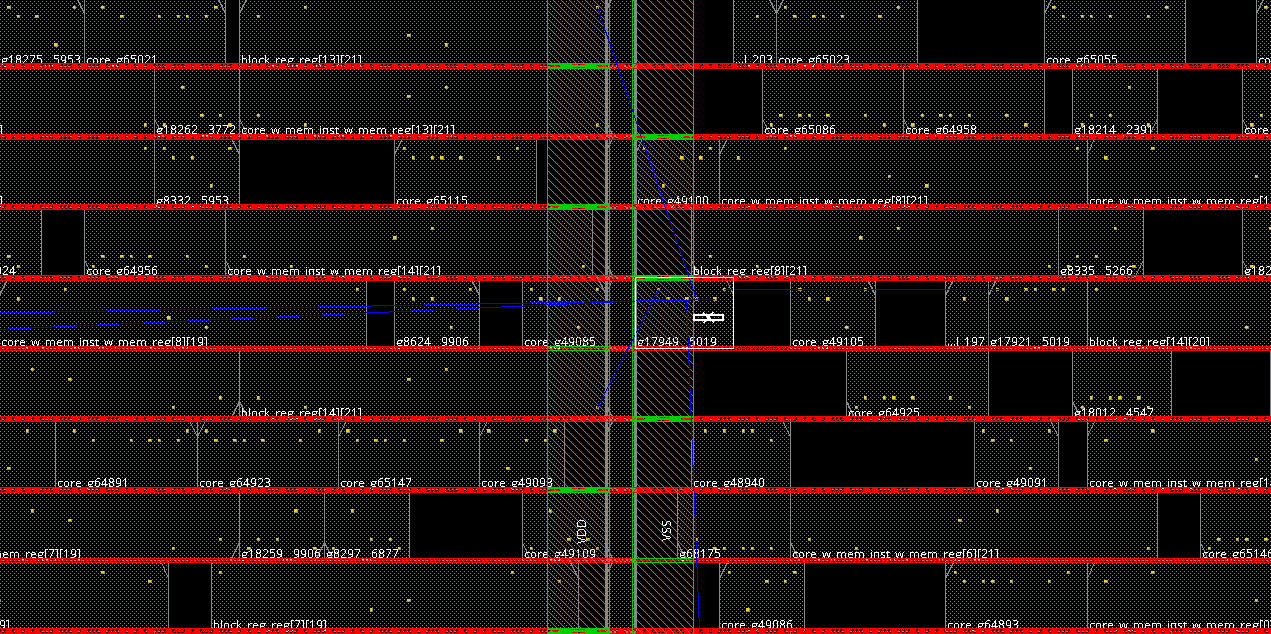

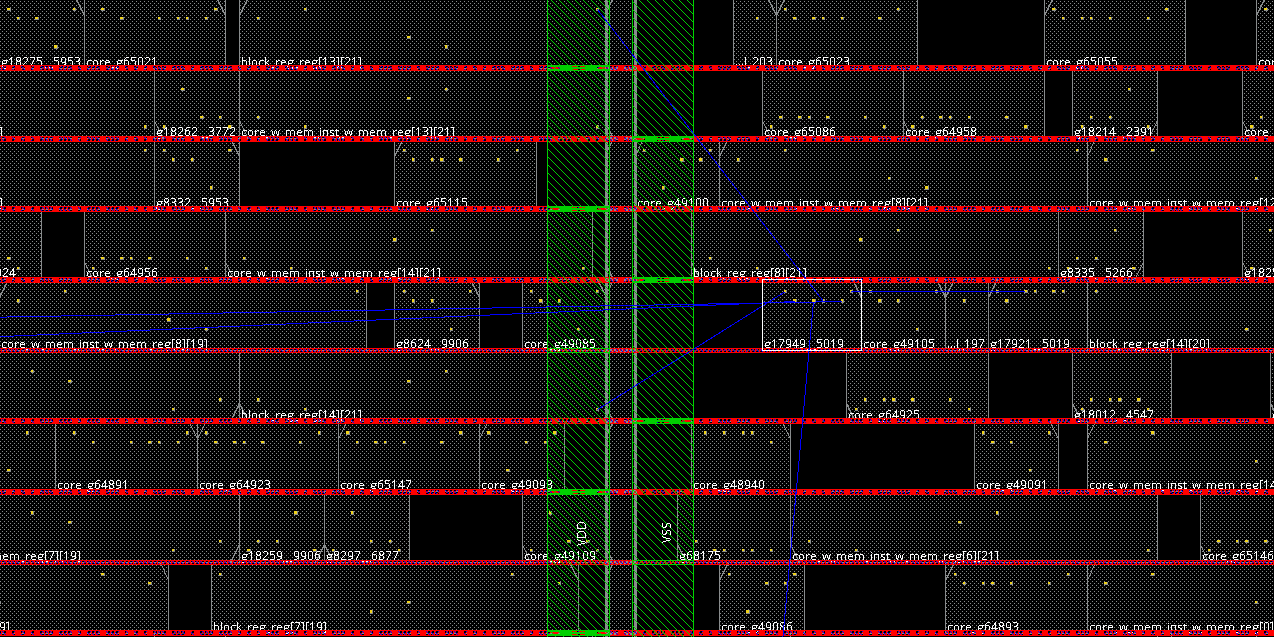

DRC Issues: Also note that, when you run the physical design as is, using the provided script innovus.tcl, there will be some DRC issues related to pin access around power stripes, shown below.

Important: This year, DRC issues will be considered as hard constraints. Thus, some efforts are required to fix those and any other issues, ideally considering both design quality and security. Shown below is a simple fix for these particular DRC issues, based on shifting of cells, without special attention paid to the impact on security.

Alpha-Round Benchmarks

Download, Overview: This link points to the ZIP bundle of the alpha-round benchmarks, which are different crypto cores. The designs have varying ranges of complexity, size, layout density, timing constraints, etc. Besides the baseline layout files, this release also includes official scoresheets as well as relevant scripts and library files.

Scope: The alpha-round benchmark are to be hardened during the alpha/qualifying round, considering the generic security evaluation of exploitable regions. For the provided baseline layouts, there are no particular efforts made for security closure nor for design optimization.

The ZIP bundle has the following contents:

-

- _scripts/

- Evaluation scripts

- Same version as employed in the official evaluation backend

- _ASAP7/

- Relevant library files

- Typical corner only

- Same version as employed in the official evaluation backend

- This ZIP bundle already contains the latest version of library files provided in the ASAP7 reference.

- Benchmarks, in separate sub-folders

- Each benchmark sub-folder has the following contents:

- db/

- Innovus database for physical design following the ASAP7 reference flow

- Generated using Innovus 20.11-s130_1

- design.assets

- Assets: flip-flops holding sensitive data, representing potential targets for Trojans

- Note that assets are derived manually from netlists based on their function and sensitivity. For example, all flip-flops holding the secret key are considered as assets.

- design.def and design.v

- Post-route design file and corresponding netlist file

- design.sdc and latency.sdc

- SDC files for timing analysis

- As used for timing closure during physical design

- reports/

- All the report files as obtained from the evaluation backend, using the same scripts as shared in the _scripts/ folder

- Few examples:

- checks_summary.rpt: Summary of design checks

- *.geom.rpt: DRC checks, using Innovus command ‘check_drc’

- exploitable_regions.rpt: Exploitable regions checks, using the codes provided in $root/_scripts/_cpp and scripts/exploitable_regions.tcl

- _scripts/

See also the README files for more details.

Final-Round Benchmarks

Download, Overview: This link points to the ZIP bundle of the final-round benchmarks, which are different crypto cores. The designs have varying ranges of complexity, size, layout density, timing constraints, etc. Besides the baseline layout files, this release also includes official scoresheets as well as relevant scripts and library files.

Scope: The final-round benchmark are to be hardened during the final round. considering the generic security evaluation of exploitable regions as well as the advanced evaluation based on actual Trojan insertion. For the provided baseline layouts, there are no particular efforts made for security closure nor for design optimization. In fact, the designs are the very same as in the alpha round.

The ZIP bundle has the following contents:

-

- _scripts/

- Evaluation scripts

- Same version as employed in the official evaluation backend

- _ASAP7/

- Relevant library files

- Typical corner only

- Same version as employed in the official evaluation backend

- This ZIP bundle already contains the latest version of library files provided in the ASAP7 reference.

- Benchmarks, in separate sub-folders

- Each benchmark sub-folder has the following contents:

- db/

- Innovus database for physical design following the ASAP7 reference flow

- Generated using Innovus 20.11-s130_1

- design.assets

- Assets: flip-flops holding sensitive data, representing potential targets for Trojans

- Note that assets are derived manually from netlists based on their function and sensitivity. For example, all flip-flops holding the secret key are considered as assets.

- design.def and design.v

- Post-route design file and corresponding netlist file

- design.sdc and latency.sdc

- SDC files for timing analysis

- As used for timing closure during physical design

- reports/

- All the report files as obtained from the evaluation backend, using the same scripts as shared in the _scripts/ folder

- Few examples:

- checks_summary.rpt: Summary of design checks

- *.geom.rpt: DRC checks, using Innovus command ‘check_drc’

- exploitable_regions.rpt: Exploitable regions checks, using the codes provided in $root/_scripts/_cpp and scripts/exploitable_regions.tcl

- TI/

- Files related to ECO Trojan insertion (TI)

- *.dummy: Dummy files, referring to the ECO TI modes utilized in the backend as well as the variation of Trojan.

- *.gds.gz: The layout file after ECO TI on the baseline layout. Note that we only share the layout post ECO TI, not the underlying netlist. This is to mimick the work mode of security-concerned designers or security researchers which can only, post manufacturing, inspect the layout. Nevertheless, the layout files still contain names of nets and instances which can be easily correlated to the Trojan parts. This is also done on purpose, to not over-complicate the handling of the benchmarks in this contest, where the focus is on security closure, not on Trojan detection.

- Note that timing reports after ECO TI are provided in reports/timing.*.rpt. However, further reports, especially for DRC checks, are not provided; this is on purpose, to not readily disclose all details of the actual Trojan design.

- _scripts/

See also the README files for more details.