-Patterns, Image and Bias

During this week, I would like to have one-on-one final project ideation sessions. Sign up here.

15 / Tu 2 Apr Midterm recap, return to a few concepts of “On the Way to Computational Thinking” (active citizenry in a newly postdigital culture [40]; putting well-known algorithms to use[48]; modeling our understanding of the human mind on algorithms[50])

Readings: Kitchin, “Thinking Critically about and Researching Algorithms”

Noble, from Algorithms of Oppression (in Drive)

Th 4 Apr No classes. Isra & Mi’raj holiday.

16 / Tu 9 Apr Manual Pattern comparison with Images in Digital Space using the ARIES tool (comparison of features on a digital canvas). We will compare images of Renaissance artists in class and then move on to Manga.

Preparation: (1) Add at least 5 of your favorite manga images to the class Drive manga folder (I have started us off with 100). Try to follow the numbering scheme a+number.

(2) Sign up for an account at ARIES (https://artimageexplorationspace.com/). You need to go to sign-in and then create an account. If you have a choice, go for a medium size image and a png. For this one, it is fine to use your nyu account (the project was created at NYU NY in collaboration with the Frick digital art history lab).

Reading: How to be a Better Manga Artist ; Who was Andrea del Sarto?

17 / Th 11 Apr Art History and Machine Learning

Today we will discuss how researchers are using machine learning to think about big picture questions in art history.

Reading: Interview with Fei-Fei Li from Architects of Intelligence (in Drive)

Elgammal et al, The Shape of Art History in the Eyes of the Machine (2018) (espec. sections 2, 2.1, 4)

Ted talk: How We’re Teaching Computers to Understand Images (Li)

Now try this… Create Anime Figures with AI!

18 / Sa 13 Apr Practicum: Manipulating the Color Channels and Classifying png images.

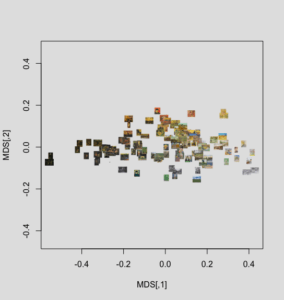

Today we will discuss how images can be “read” by a computer using color channels and then how we can perform a basic “unsupervised” classification of them by color. We will do perform some image manipulation / classification based on two scripts in R. We will use two datasets (our class manga set and a dataset of Vangogh).

Reading: Multidimensional scaling; RGB Color Model

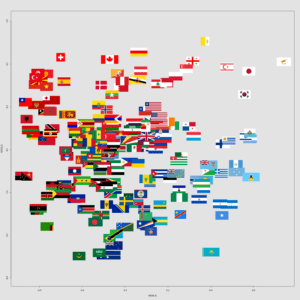

https://www.r-bloggers.com/flag-space-a-scatter-plot-of-raster-images/ https://www.r-bloggers.com/flag-space-a-scatter-plot-of-raster-images/ |

Scatter plot of VanGogh dataset (Folego et al) https://ieeexplore.ieee.org/document/7532335 |

Blog 3: Blog posting on patterns. Wikipedia calls a pattern “a discernible regularity in the world or manmade design [with] elements of the pattern repeat[ing] in a predictable manner.” Do you agree with this definition of a pattern? Do you think that patterns always are significant? What do you think about the relationship of repeated patterns and style are in the two exercises that we did with digital environments?

Use this blog posting to reflect on the two exercises that we did: class 16’s manual feature comparison of Renaissance art and manga facial features in ARIES and class 18’s automatic color detection using the scripts in R with the two visual corpora. What were the differences in these two methods? What did each one of them allow you to see? What are the shortcomings of each? What did you learn about manga or van Gogh from examining the visual results of the exercise? In what other forms of culture might pattern detection produce interesting results?

There is a pdf of the manga classification mangaplot.png in Drive (image data > manga > manga png) as well as a vangoghplot.pdf in the van Gogh folder for your convenience (due date: 24 April, 11:59pm).

-Machine(s) Learning

19 / Tu 16 Apr Optical Character Recognition OCR & Handwritten Text Recognition HTR I

Watch: What is Machine Learning?

Explore: Learning to Read Historical Handwriting

Readings for Transkribus exercise:

Breakthrough in Understanding Medieval Texts

Machines Reading the Archive

Advanced: Transkribus at dhd2019 (slides)

Demo: Optical Character Recognition (David) with Abbyy FineReader including pattern training function with English text. OCR allows us to turn printed or typed documents into machine readable ones. We will be using “cleaned 1935 report on Yas Island” (Drive).

Transkribus is a text recognition system that works for handwriting and print. We will do a sample of text in handwritten English by the philosopher Jeremy Bentham. Then, we will try a sample of text in printed Ottoman Turkish and in printed Arabic.

Discussion: What are some ways that machine learning is present in the devices that you carry? What is Recaptcha and what is its relationship to machine learning? What kinds of texts would OCR deal with easily?

https://twitter.com/cneudecker/status/1025033412065873920

20 / Th 18 Apr Handwritten Text Recognition HTR II

Preparation:

Make sure to download Transkribus to your own laptop if you haven’t done it in the previous class. You can create an account or login with Google.

In preparation for class, I uploaded the 288 page document and pre-ran the segmentation and layout analysis as well as the model. Creating the document took 32 minutes. The segmentation took 10 minutes and the HTR model ran for 120 minutes.

Reading:

British India Office Records | Bushire Residency

Today we will work with some historical manuscript materials about the Arabian Gulf region: Political Residency of the Gulf (Outward Let[ters], Book No 42, 1st January 1826 to 31 July 1826). These documents are now digitized and available online, but they are not searchable!

(1) Let’s compare the handwriting of Jeremy Bentham (1748-1832) and the Political Residency letters (1826). A public model for English was created using transcriptions that were made of Bentham. I applied this model to our 288 page text. What is the approximate error rate?

(2) We will begin transcribing together, starting with some sample page.

Hints: If you do not understand a word, then use the tag “unclear” from the metadata tab. You do this by highlighting the words in the text editor and clicking the small + next to the yellow field. Save frequently using the old fashioned diskette button. If there is an error “already in use” when saving, wait and try again in a minute. If a segment has been identified that is not useful for the transcription, you can delete it using the red “do not enter” icon. You can also refer to the Transkribus user guide here.

21 / Tu 23 Apr Handwritten Text Recognition HTR III

Explore: Transcribe Bentham project

Reading: Transcribing Foucault’s Handwriting with Transkribus

Gamifying the Transcription of Bentham’s Works

Today we will examine how our model does in expanding the text from the Bushire Residency Archive.

What do you think about human handwriting after doing this exercise? Does one’s handwriting remain the same across one’s entire life? What do you imagine the limits of HTR to be?

Want to learn more about machine learning and R? You might check this book.

Blog 4: Blog posting on machines learning. Please in this blog posting reflect on the work of training a computer to learn handwriting. What are some of the lessons of the Transcribe Bentham project? What was it like encountering an archival document from the early nineteenth century? In what ways was the English model based on Bentham’s handwriting successful in recognizing the writing in the Bushire residency document? How easy or difficult was it to transcribe the Bushire residency document? What difficulties did you encounter is dealing with the “reality” of a handwritten text? Were there ways that your experience matched that of the authors of the article on Foucault’s papers? Were there ways that it diverged? Can you think of other historical collections that you might want to transcribe for a deeper understanding of local histories? Supplement your comments with screenshots of difficult “sticking points” in the texts, both for yourself and the machine. (9 May 11:59pm).