PROJECT DESCRIPTION

Gwangun Kim

Jesus Said Chill

2023

Stop by, and take a rest for a while

Website Link: https://gwangunkim.github.io/CCFinal/my-second-projectb/

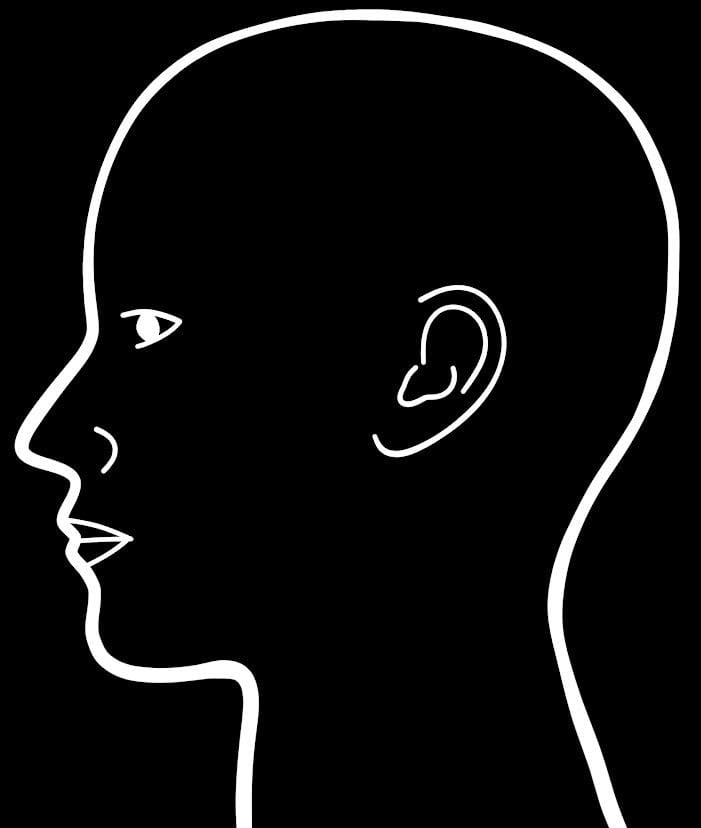

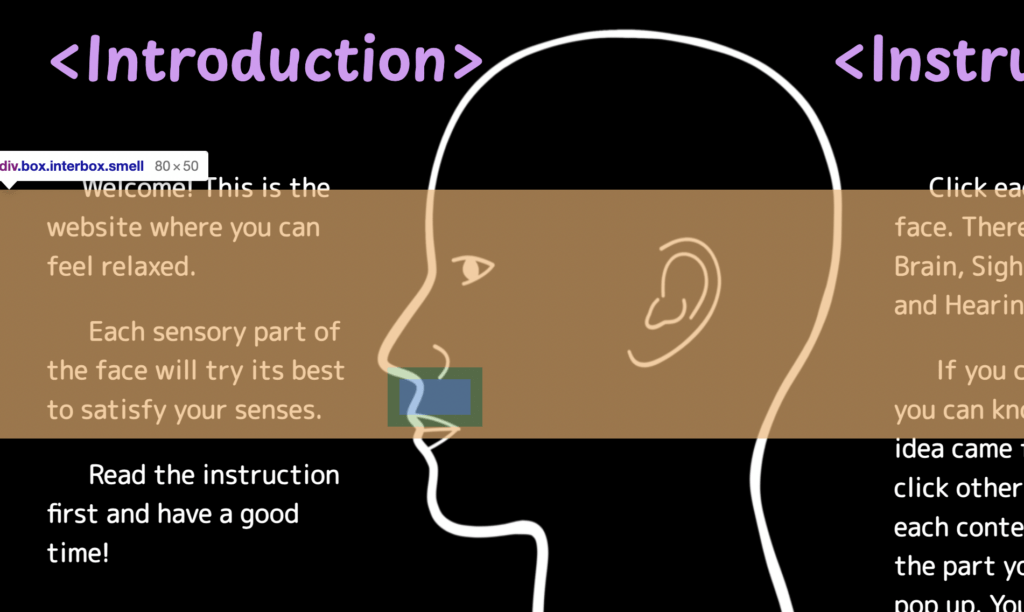

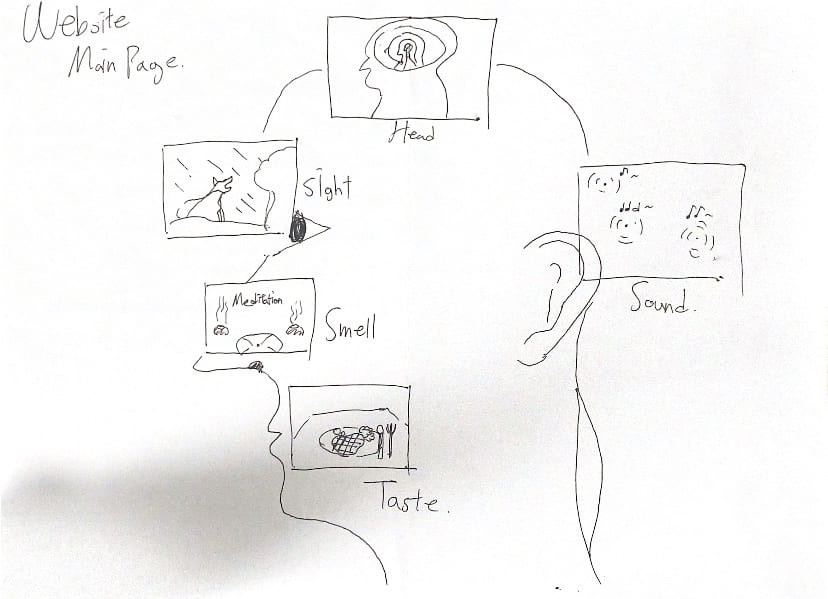

“Jesus Said Chill” is a website where you can feel relaxed by interacting with each sensory part: Sight, Sound, Taste, and Smell. Clicking each part of the face in the middle, you can interact with each content related to the part you clicked using the mouse.

Surfing the internet, I found that the image of the human brain and the universe were very similar. Thus, I imagined that the universe, where we currently live, could be a part of someone’s brain. If that is the case, we are too small beings to make any change in this big universe. However, looking at it from a different perspective, it also means that we can pause our hard work and take it for a while because it will not change anything. Therefore, I created this healing website to make people feel relaxed by watching beautiful sceneries, listening to ASMR sounds, finding good-looking food images, and meditating using the fragrance that I created.

1) Process: Design and Composition

Clicking the face:

The most important thing was to visualize each sensory part. The objective was for users to find and enjoy content that corresponds to their desired sensory experience all in one place. Simply listing the names of each sensory part seemed mundane and uninteresting to me. After much contemplation, I decided to design a human face where users can click on the eyes, nose, or mouth to navigate to web pages tailored to each sensory part.

Sight Part:

I often feel relaxed by watching the sunset or the stars in the night sky. I wanted to provide the audience with a similar experience, so I used the “particles” we learned in class to create moving birds and stars in the p5 sketch.

Sound Part:

To visualize the sound that the audience hears, I added colors that correspond to each sound.

Taste Part:

It was difficult to visualize “taste”. I thought I could work up the audience’s appetite if I hid the food image and let the audience hear the eating sound.

Putting Quotes:

My website theme is healing. While interactions alone can provide healing experiences for people, I believe that incorporating meaningful quotes or uplifting messages related to each sensory part would be even more effective. Therefore, I added a different color(purple) to the quotes based on each part, bringing vibrancy to the website.

By creating HTML pages for each sensory part and designing a page that allows users to navigate to each page according to the position on the face, I created an engaging interaction rather than a simple list to click on. Additionally, by incorporating impressive quotes, I aimed to help people understand my theme and provide them with opportunities for relaxation.

2) Process: Technical

To incorporate click boxes corresponding to each sensory part, I created five div boxes and assigned their positions using the CSS “absolute” property. I made the boxes transparent, allowing for seamless clicking. However, I encountered issues where the boxes would go out of position when resizing the webpage or applying it to different laptop sizes. If I could do this project again, I would aim to create fully responsive boxes.

Sight Part:

I distinguished between night and day by creating two classes. Using a Boolean condition, I made the “sunset” class appear when the mouse is not clicked and the “night” class appear when the mouse is clicked.

<Night>

class Stars{

constructor(x,y){

this.x= random(width)

this.y= random(2*height/3)

this.s= random(1,3)

this.t= random(TAU)

this.size = 0

}

display(){

fill(255)

circle(this.x,this.y,this.size)

}

update(){

this.t+=0.1

this.size = this.s + sin(this.t)*2

}

}

class Meteor{

constructor(){

this.x= random(width)

this.y= random(2*height/3)

this.s=2.5

this.speed = random(3,8)

this.isGone = false;

}

display(){

noStroke()

fill("white")

circle(this.x,this.y,this.s);

triangle(this.x,this.y+15/12,this.x-100/12,this.y-50/12,this.x,this.y-15/12)

}

update(){

this.x = this.x + this.speed

this.y = this.y + this.speed

}

isitgone(){

if(this.y>2*height/3|| this.x>width){

this.isGone = true;

}

}

}

<Sunset>

class Bird{

constructor(){

this.x = random(width);

this.y = random(height/2,height)

this.xd = random(-1,1)

this.flap =0

this.flap2 = 14

this.scalesize = 1.1

this.isGone = false;

}

display(){

push()

translate(this.x,this.y)

stroke("black")

fill("black")

strokeWeight(3.5)

scale(this.scalesize)

//big bird

circle(0,0,3)

line(0,0,10,-2.5)

line(0,0,-10,-2.5)

line(10,-2.5,20,this.flap)

line(-10,-2.5,-20,this.flap)

//small bird

strokeWeight(2.5)

circle(30,15,1.5)

line(30,15,35,14)

line(30,15,25,14)

line(35,14,40,this.flap2)

line(25,14,20,this.flap2)

pop()

}

update(){

this.flap = map(sin(frameCount*0.15),-1,1,-10,5)

this.flap2 = map(sin(frameCount*0.15),-1,1,10,18)

this.x+=this.xd

this.y-=0.5

this.scalesize -= 0.002

}

isitgone(){

if(this.scalesize<0){

this.isGone = true;

}

}

}

Sound Part:

I used the “preload” function to store the loaded sounds in an array and made them play when the mouse is clicked. However, initially, I faced the problem of all the sounds playing simultaneously. Thanks to Marcela, I resolved the issue by using the following code, which stops the other sounds from playing when one sound is played.

function mousePressed(){

//just one sound per one click

index = int(random(sounds.length));

for(let i=0; i<sounds.length; i++){

sounds[i].stop();

}

sounds[index].play();

Taste Part:

For the interaction of hiding and revealing food by erasing pixels, I chose to reference a 2D array on YouTube(check credits) as the concept of freely using x and y coordinates because “pixels” I learned in class did not allow for such an interaction. I created a 2D array for the plane coordinates and made the pixels change from black to transparent as the mouse approached each coordinate, revealing the food image.

<2D array>

for (let i=0; i<cols; i++){

rectangles[i] = [];

for (let j=0; j<rows; j++){

rectangles[i][j] = new Particle(i*size, j*size, size, size);

}

}

All web pages were created using a flex property, and I made efforts to use percentages instead of pixels to ensure that the box sizes could be adjusted responsively.

3) Reflection and Future Development

This is my idea sketch.

I was able to create most of the interactions I wanted, and I was satisfied with how my peers appreciated the interaction of each sensory part, including the Smell and Taste parts that I was initially concerned about. Especially, the Sight part turned out so well that I would love to revisit it repeatedly. However, despite these advantages, there are still some areas of improvement for my website.

1) Responsive Web: I believe the most important aspect of a website is that everyone can view the same content regardless of their device. As mentioned earlier, I failed to create a fully responsive web, and making the div boxes adjust their size regardless of the screen size is another goal of mine.

2) Brain part: I originally intended to add interaction to the brain part as well. The idea was to zoom in on my brain-universe sketch when the mouse moved to the right and zoom out when it moved to the left. Thanks to Marcele, I knew how to manipulate my video, but there were issues with the video frames, and I couldn’t achieve the smooth movement as shown in the uploaded gif. (Here is the sample code that Marcele gave.)

let vid;

let t = 0;

let t2;

let speedT = 0.1;

let reverse = false;

let forward = true;

function setup() {

let canvas = createCanvas(600, 350);

canvas.parent("p5-canvas");

vid = createVideo('assets/wow.mp4');

vid.size(600, 350);

vid.showControls();

vid.hide();

}

function draw() {

background(255);

//when the mouse is in the right side

if(mouseX > width/2 && mouseX < width){ //to start from the same position reverse = false; if(forward == false){ forwardVid(); } //change the speed according to the mouseX position speedT = (map(mouseX, width/2, width, 0, 0.01)); speedT = constrain(speedT, 0, 0.01); t = t + speedT; if(t > vid.duration()){

t = 0;

}

vid.time(t);

//when the mouse is in the left side

} else if (mouseX>0 && mouseX < width/2){

//to start from the same position

forward = false;

if(reverse == false){

reverseVid();

}

//change the speed according to the mouseX position

speedT = (map(mouseX, 0, width/2, 0.01, 0));

speedT = constrain(speedT, 0, 0.01);

t2 = t2 - speedT;

if(t2 < 0){

t2 = vid.duration();

}

vid.time(t2);

}

image(vid, 0, 0);

}

function reverseVid(){

t2 = t;

reverse = true;

}

function forwardVid(){

t = t2;

forward = true;

}

3) Sound part: To visualize the differences in sound, I added color variations to each sound. Furthermore, if I design shapes to correspond to the sounds, it would create more visually and audibly engaging interactions.

4) Taste part: I successfully hid the food using pixels, but I couldn’t create a fully immersive interaction that stimulates the appetite. One peer suggested the idea of turning the food image into pixels and allowing the user to “eat” the food by moving the mouse over it, gradually removing parts of the image as if the person is consuming it. I find this idea fascinating and would like to explore it by further studying and applying 2D arrays.

During the presentation, I was delighted to see that everyone had their favorite sensory part. Experiencing each part and seeing the reactions I had imagined made me even more motivated to further develop my website. I hope that people can take a moment of respite from their busy lives and find relaxation on my website.

4) Credits & References

1. Prof.Marcele gave me a lot of help with Brain, Sound, and Smell parts.

2. Creating infinite zoom video

3. 2D Array

3. Designing a hover button tag

4. Meditating to scent

https://la-eva.com/blogs/from-the-studio/on-meditating-to-scent

5. Sound effects