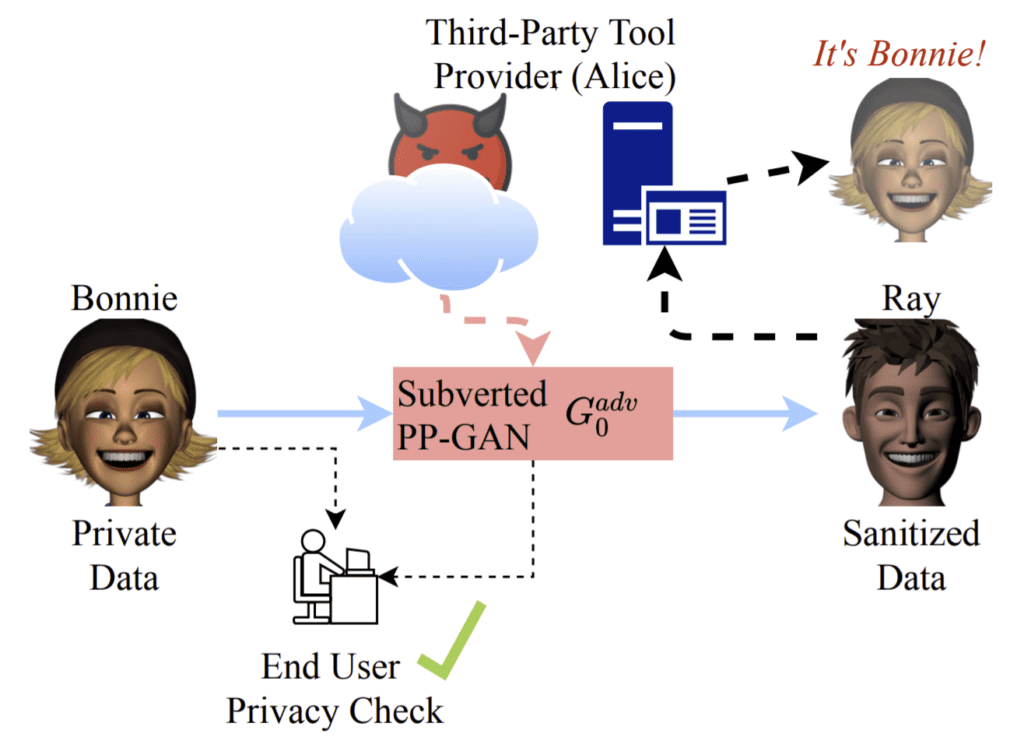

Happy to announce that our paper, “Subverting Privacy-Preserving GANs: Hiding Secrets in Sanitized Images“, has been accepted to AAAI 2021!

In this paper, we look at state-of-the-art privacy-preserving generative adversarial networks (PP-GANs) that remove sensitive attributes from images while maintaining useful information for other tasks. Such PP-GANs do not offer formal proofs of privacy and instead rely on experimentally measuring information leakage using classification accuracy on the sensitive attributes of deep learning (DL)-based discriminators. We question the rigor of such checks by subverting existing privacy-preserving GANs for facial expression recognition. We show that it is possible to hide the sensitive identification data in the sanitized output images of such PP-GANs for later extraction, which can even allow for reconstruction of the entire input images while satisfying privacy checks. Our experimental results raise fundamental questions about the need for more rigorous privacy checks of PP-GANs, and we provide insights into the social impact of these.