Secure Machine Learning

Deep learning deployments, especially in safety- and health-critical applications, must account for the security or else malicious attackers will be able to engineer misbehavior with potentially disastrous consequences (autonomous car crashes, for instance). How we can safely and securely deploy ML/AI technology in the real-world?

We are investigating multiple research directions related to secure ML.

Backdoored Neural Networks (BadNets)

In work first presented at the NeurIPS’17 MLSec Workshop (where we received the best attack paper award), our group (in collaboration with Prof. Brendan Dolan-Gavitt) was the first to examine the threat of backdooring (or Trojaning) of a deep neural network (DNN). The threat arises because users rarely train DNNs from scratch. Instead, DNNs are trained on third-party clouds, or fine-tuned starting from models obtained from online “model zoos.” The question we ask is: can the untrusted cloud return a maliciously trained DNN such that (i) it has the same architecture (or hyper-parameters) as a benignly trained DNN; (ii) the same behavior as a benign DNN on regular inputs, including a held-out validation set; (iii) but misbehave on special, backdoored inputs, for instance, a traffic sign plastered with a certain attacker-chosen sticker? We call such a DNN a BadNet. In our work, we demonstrated empirically that not only can such stealthy BadNets be trained — in a now oft-cited example, we demonstrated a traffic sign detection BadNet that misclassifies stop signs plastered with a Post-It note as speed-limit signs — but also that backdoor behaviors persist even if the BadNet is later re-trained for a new task via transfer learning. To make matters worse, we also identified security vulnerabilities in two popular online repositories of DNN models, the Caffe model zoo and Keras model library, that would allow an adversary to substitute a benign model for a BadNet when the model is being downloaded. Our work has since sparked a spate of follow-on research on new backdooring attacks and defenses, including our own defense method. The paper has been cited as motivation for IARPA TrojAI program.

In work first presented at the NeurIPS’17 MLSec Workshop (where we received the best attack paper award), our group (in collaboration with Prof. Brendan Dolan-Gavitt) was the first to examine the threat of backdooring (or Trojaning) of a deep neural network (DNN). The threat arises because users rarely train DNNs from scratch. Instead, DNNs are trained on third-party clouds, or fine-tuned starting from models obtained from online “model zoos.” The question we ask is: can the untrusted cloud return a maliciously trained DNN such that (i) it has the same architecture (or hyper-parameters) as a benignly trained DNN; (ii) the same behavior as a benign DNN on regular inputs, including a held-out validation set; (iii) but misbehave on special, backdoored inputs, for instance, a traffic sign plastered with a certain attacker-chosen sticker? We call such a DNN a BadNet. In our work, we demonstrated empirically that not only can such stealthy BadNets be trained — in a now oft-cited example, we demonstrated a traffic sign detection BadNet that misclassifies stop signs plastered with a Post-It note as speed-limit signs — but also that backdoor behaviors persist even if the BadNet is later re-trained for a new task via transfer learning. To make matters worse, we also identified security vulnerabilities in two popular online repositories of DNN models, the Caffe model zoo and Keras model library, that would allow an adversary to substitute a benign model for a BadNet when the model is being downloaded. Our work has since sparked a spate of follow-on research on new backdooring attacks and defenses, including our own defense method. The paper has been cited as motivation for IARPA TrojAI program.

To defeat backdooring attacks, we have proposed fine-pruning, a defense builds on our observation that backdoored inputs activate otherwise dormant neurons in the network (i.e., they sequester “spare” capacity in the DNN); pruning dormant neurons using a clean validation set thus disables backdoors. On-going work involves improved online defenses that patch backdoored DNN models on the fly at test time.

Verifiable and Privacy-Preserving Inference on the Cloud

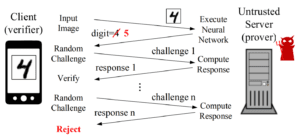

Cryptography provides powerful tools for clients to securely compute functions on untrusted servers while ensuring (a) that the function is correctly computed (integrity), and (b) the privacy of the client’s inputs. We are exploring how these cryptographic methods can be used for secure deep learning based inference on the cloud. In our NeurIPS’17 paper, we ask how can a client be sure that the cloud has performed inference correctly? A lazy cloud provider might use a simpler but less accurate model to reduce its own computational load, or worse, maliciously modify the inference results sent to the client. We propose SafetyNets, a framework that enables an untrusted server (the cloud) to provide a client with a short mathematical proof of the correctness of inference tasks that they perform on behalf of the client.

Cryptography provides powerful tools for clients to securely compute functions on untrusted servers while ensuring (a) that the function is correctly computed (integrity), and (b) the privacy of the client’s inputs. We are exploring how these cryptographic methods can be used for secure deep learning based inference on the cloud. In our NeurIPS’17 paper, we ask how can a client be sure that the cloud has performed inference correctly? A lazy cloud provider might use a simpler but less accurate model to reduce its own computational load, or worse, maliciously modify the inference results sent to the client. We propose SafetyNets, a framework that enables an untrusted server (the cloud) to provide a client with a short mathematical proof of the correctness of inference tasks that they perform on behalf of the client.

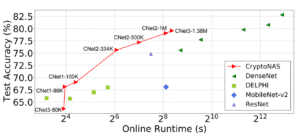

Machine learning as a service has given raise to privacy concerns surrounding

Machine learning as a service has given raise to privacy concerns surrounding

clients’ data and providers’ models and has catalyzed research in private inference: methods to process inferences without disclosing inputs. In collaboration with Prof. Brandon Reagen, we propose CryptoNAS, a novel neural architecture search method for finding and tailoring deep learning models to the needs of private inference. The key insight is that non-linear operations (e.g., ReLU) dominate the latency of cryptographic schemes used for private inference while linear layers are effectively free. We develop the idea of a ReLU budget as a proxy for inference latency and use CryptoNAS to build models that maximize accuracy within a given budget. CryptoNAS improves accuracy by 3.4% and latency by 2.4× over the state-of-the-art.