When discussing the Deep Dream during this week’ s lecture, it reminds me of the Uncanny Valley, since we can easily modify the human features and replace them with others’ using this technique.

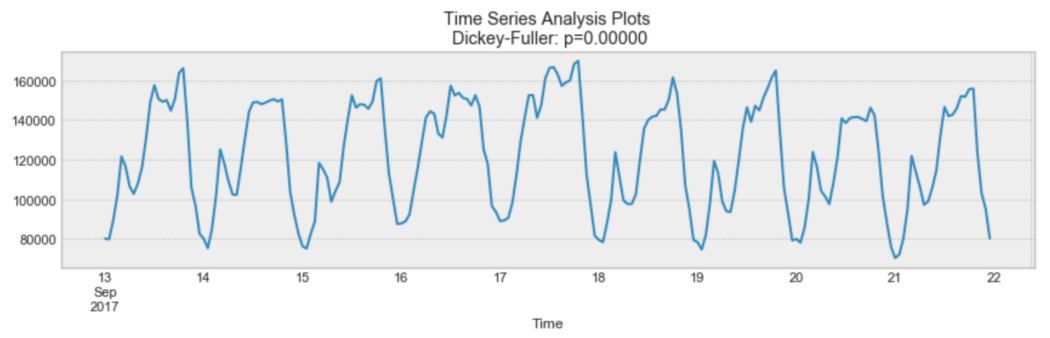

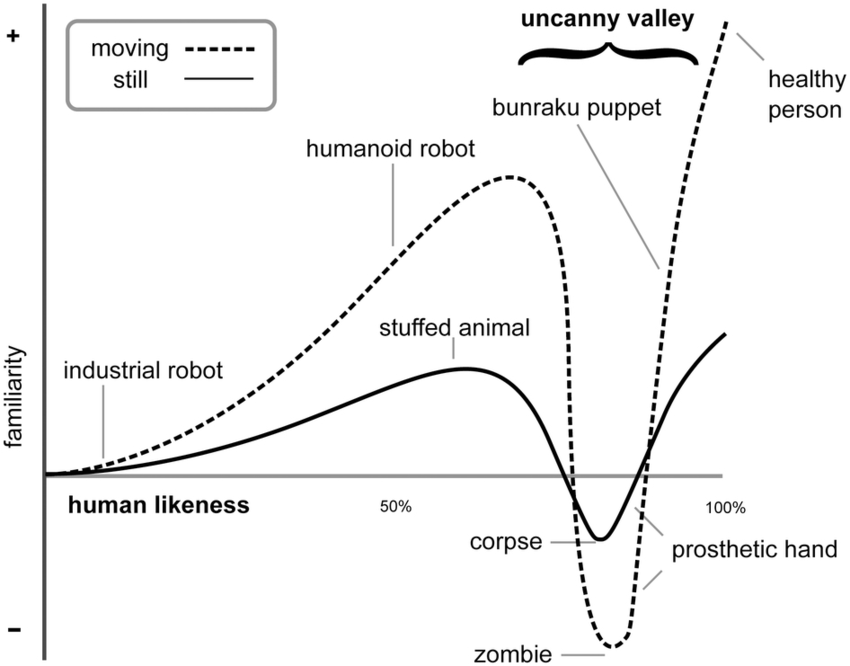

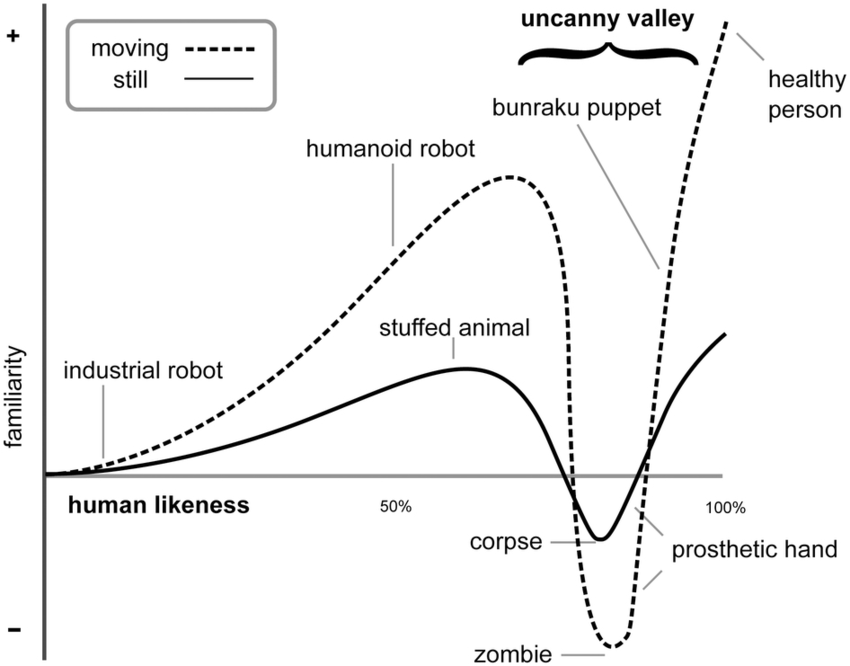

Uncanny Valley is a hypothesized relationship between the degree of an object’s resemblance to a human being and the emotional response to such an object. The concept of the uncanny valley suggests that humanoid objects which imperfectly resemble actual human beings provoke uncanny or strangely familiar feelings of eeriness and revulsion in observers. Technically, it is not a strict scientific rule, but it is commonly used in our daily life. (below is a famous graph explaining it)

So I try to collect Deep Dream images which can cause horror or disgust to us with because of the Uncanny Valley. I categorize them into three types.

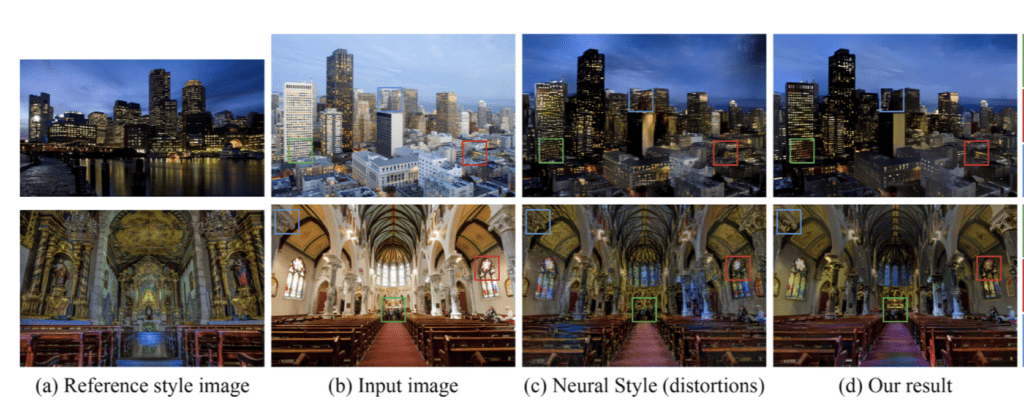

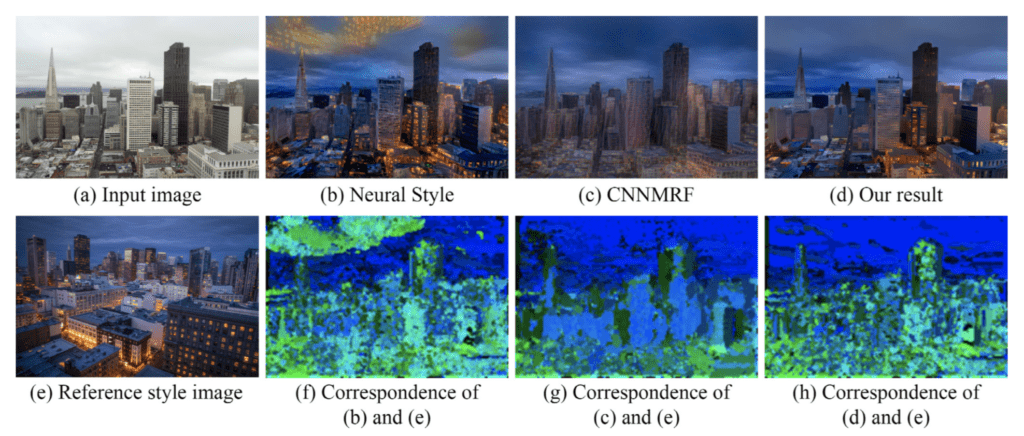

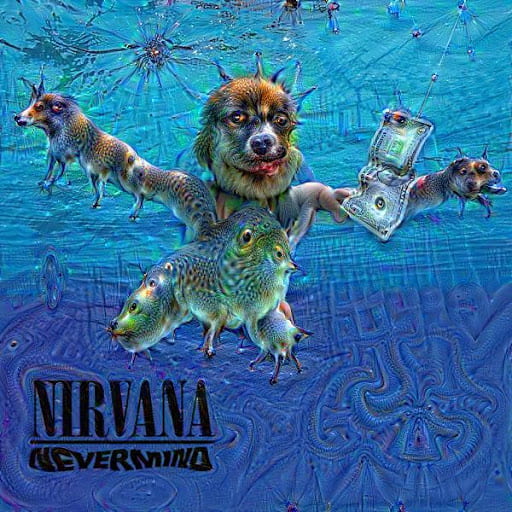

The first type is the most kwon form of both the Uncanny Valley and Deep Dream, it is the human with animals’ features.

Personally, I do not feel uncomfortable when I look at these images, I think it may be because we can only use dog faces and they are so familiar for us so we do not feel strange to see them.

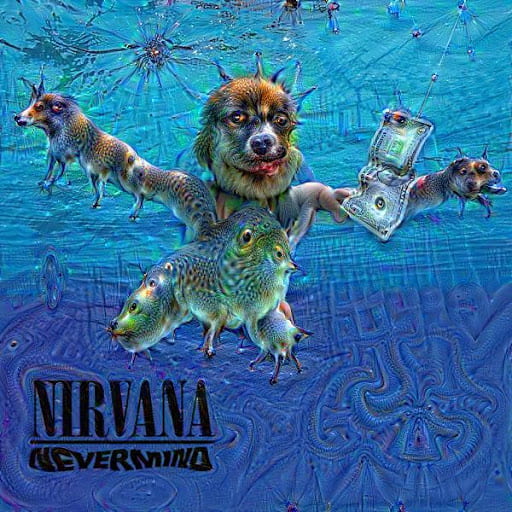

The second type is the animal with human’s features. Actually, I cannot find Deep Dream images belonging to this type, probably because there is no human layer in the technique. But I suggest if we can produce those images, it will be much more scary then the first type. Here is an example (not a Deep Dream image), I think the puppy with human’s face gives me more shock then the man with dog’s face.

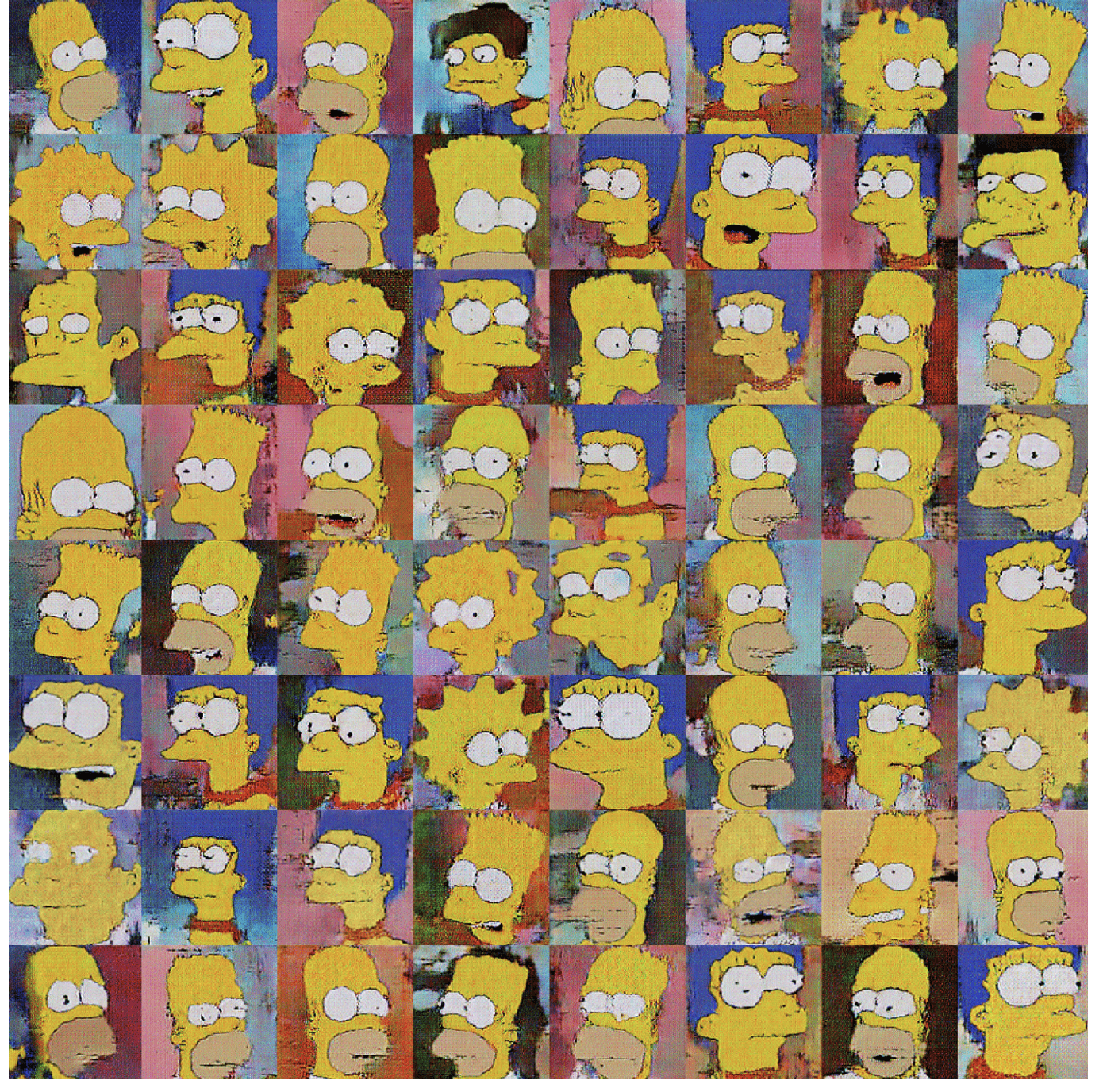

The third type are the animals with irregular body structure. This is not the area of Uncanny Valley since it is not related to human. But in my opinion, it is the animal’s extension of Uncanny Valley which can cause a similar effect.

It can be seen that the animal’s natural physical structure has been changed greatly, which provides a feeling of sutured monsters.

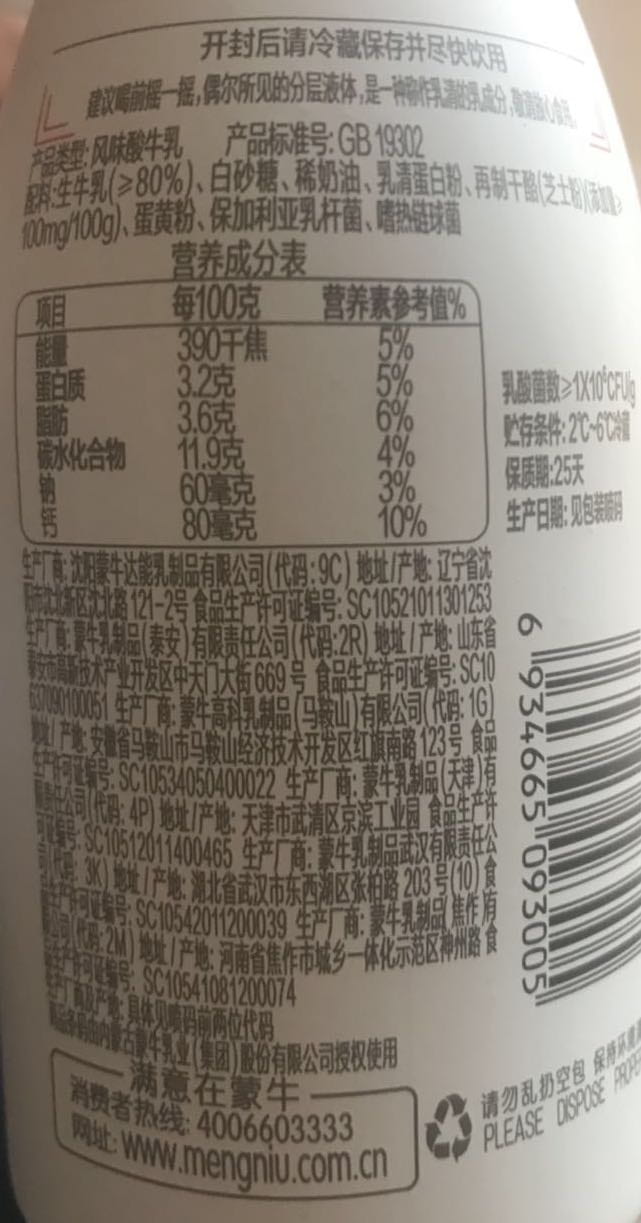

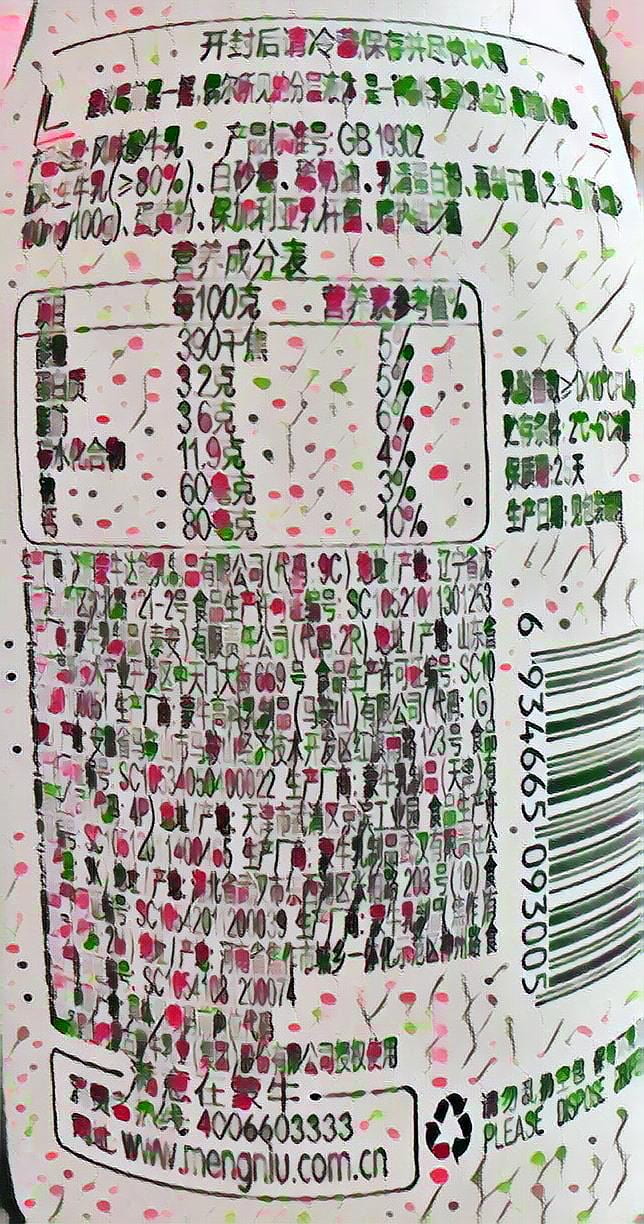

Additionally, I have found a website which produces Deep Dream images based on the food images. It is very creative and it really impress me a lot.

These kind of images can make people very disgusting, I think it is because we have high demand and expectation for food since it will go deep into our body. So if our food looks strange, we will not feel physically comfortable.

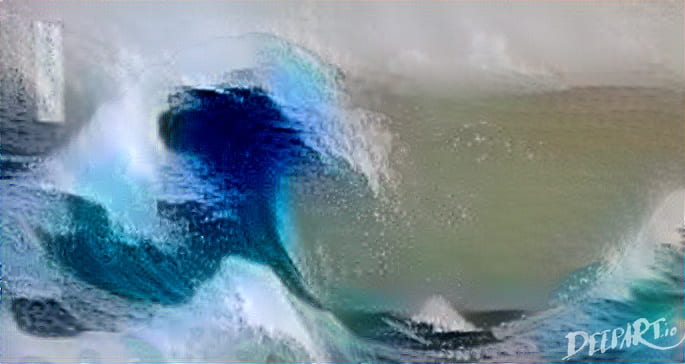

And for my own exploration, I tried to make different font styles with the help of Deep Dream. But the result is not very satisfactory, the font is too thin so it is hard to add much details on the the texts. Also, it will cause an error when using png file, so I cannot discard the white background.

Here is the original image I used

Here are the Deep Dream images I got