“color photography was initially optimized for lighter skin tones at the expense of people with darker skin, a bias corrected mainly due to the efforts and funding of furniture manufacturers and chocolate sellers to render darker tones more easily visible in photographs — the better to sell their products” (Nabil Hassein)

This statement struck me because it is horrible that racism in technology was only fixed in order to accommodate objects that businesses were selling. It was basically just a coincidence this fixed the issue that people with darker skin were having with the technology.

Facial recognition can be used on other creatures, such as fish, in order to determine which fish is which. (John Oliver)

I found this very interesting because I had never heard of facial recognition being used on anything other than humans. I think this technology could be very useful in some areas, such as wildlife preservation, where animals living in a particular area are tracked. Usually this is done via catch-and-release and the animal is either tagged or attached with a tracking device, but this technology could prevent the need to disturb the animals at all.

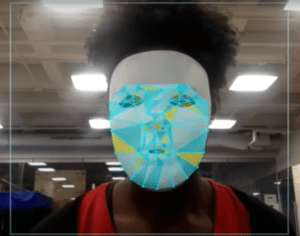

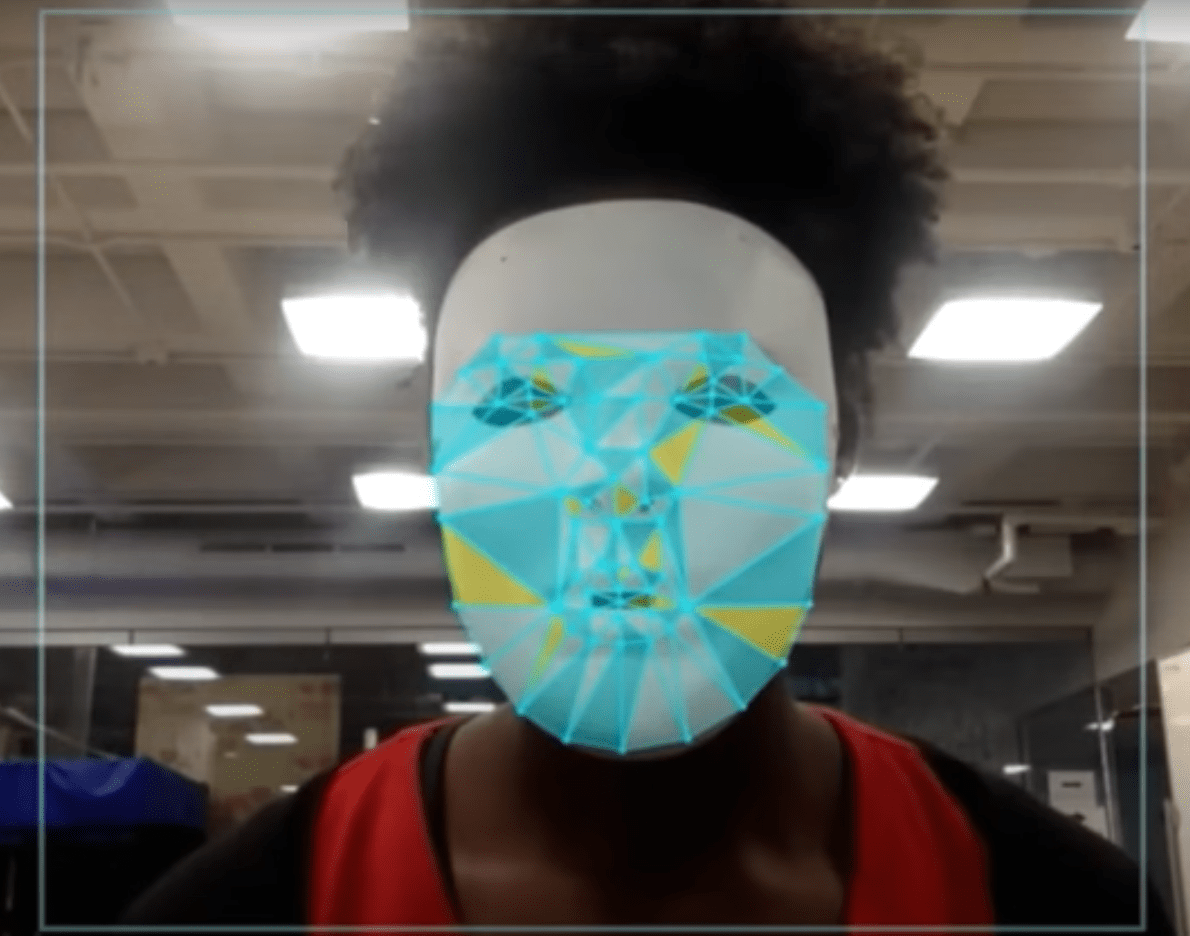

I already know there were biases in the world; no matter where I would go there is a high chance of me experiencing it. I never thought I could expect it from an algorithm. It is not even a living human being and it could treat the same way it treated Joy. I could not believe when she tried to get the computer to recognize her face unless she had a white mask was truly upsetting. It not only her, but it also misrepresents Asians as well, basically, any face that was outside the norm of the algorithm.

I already know there were biases in the world; no matter where I would go there is a high chance of me experiencing it. I never thought I could expect it from an algorithm. It is not even a living human being and it could treat the same way it treated Joy. I could not believe when she tried to get the computer to recognize her face unless she had a white mask was truly upsetting. It not only her, but it also misrepresents Asians as well, basically, any face that was outside the norm of the algorithm.

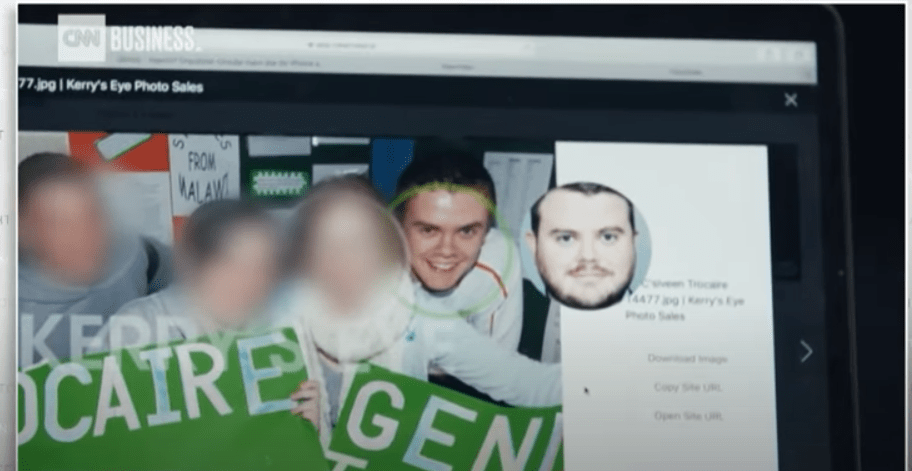

This photo was from John Oliver’s “Last Week Tonight” show, in this show he focused on how facial recognition can be harm to people’s privacy and violates human rights when used in wrong way. This image stood out because this is when the founder of clear view uses the reporter’s current photo and tracked to a photo of the reporter when he was 16 years old. The founder Hoan Ton-That emphasized that he will only work with the authorities with his software, but it also raise concern that if a country who has a very different opinion on people’s sexual orientation could use it to harm people that they consider “not normal” or “a sin”, he didn’t answer the question directly, which could really make people including myself have trouble to believe his statements and “good intention”.

This photo was from John Oliver’s “Last Week Tonight” show, in this show he focused on how facial recognition can be harm to people’s privacy and violates human rights when used in wrong way. This image stood out because this is when the founder of clear view uses the reporter’s current photo and tracked to a photo of the reporter when he was 16 years old. The founder Hoan Ton-That emphasized that he will only work with the authorities with his software, but it also raise concern that if a country who has a very different opinion on people’s sexual orientation could use it to harm people that they consider “not normal” or “a sin”, he didn’t answer the question directly, which could really make people including myself have trouble to believe his statements and “good intention”.