Category: 5. Classify This

Classify This

For my Classify This assignment, I decided to train the teachable machine with an image model. I uploaded photos of city and rural landscapes to try to train the computer to identify the difference between them when shown an image. At first this was challenging regarding the data set and specifically making sure to incorporate daytime and nighttime photos. Once I had trained the model, for the outcome I decided to have the word City or Rural flash on the screen with contrasting colors. In addition, I incorporated sounds that reflect the atmosphere of each that play once the image is identified. I found the audio part to be the most challenging as I struggled with getting it to play clearly. Below are screenshots of the output as well as a link to my code.

https://editor.p5js.org/katrinamorgan/sketches/nWZPIYUNC

Classify This – Nan Lin

I use the teachable machine to train my model to recognize different pitch I play with my Ukulele. After the model was trained, I took it to p5.js and made it to out put the note I was playing whenever it detects a not, and it should say “you are not playing” when it only detect the background noise. This is a simple and fun project, I enjoy the process of it. It is amazing that this program allows us to use the data we chose and train our machine to differentiate them. I noticed that the more data you use the more accurate the model gets. To take this to a next level, I think I can use the pitch to do some game, where the player can use different pitch to control how high and or how far the character jumps.The hardest part of this project was to record the samples. The results is accurate most of the times, but when two notes have very similar waveform, it will mix them up and give the wrong result. I think that can be fixed with more data.

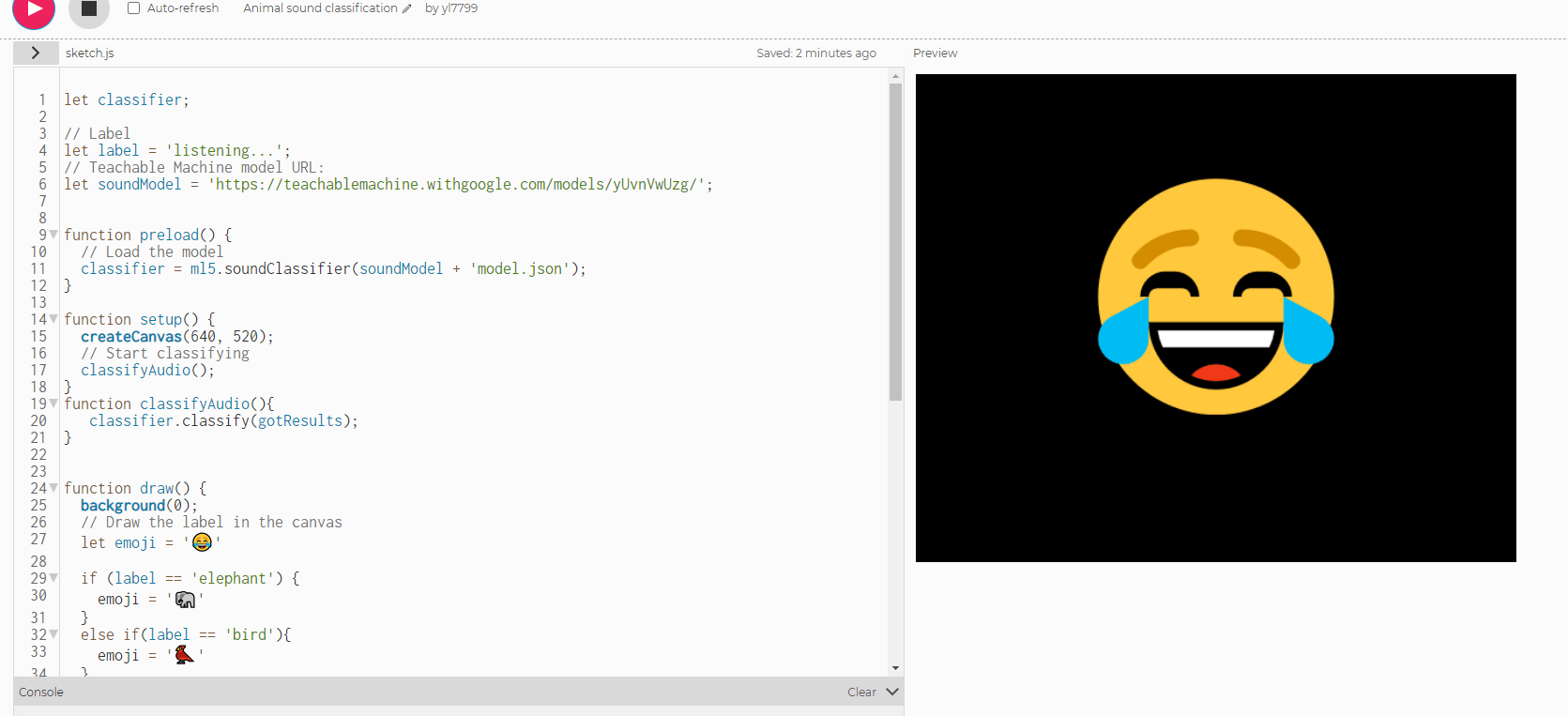

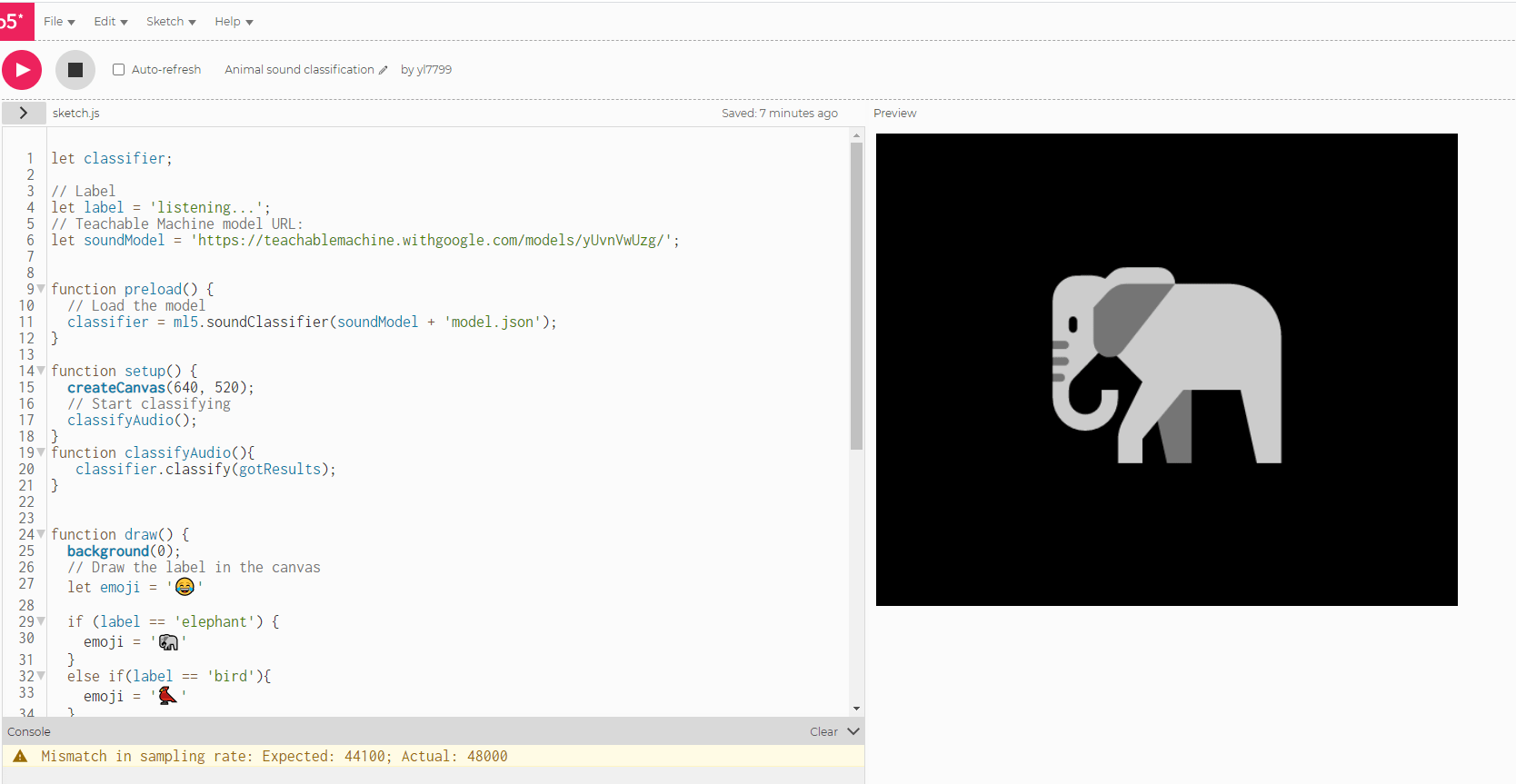

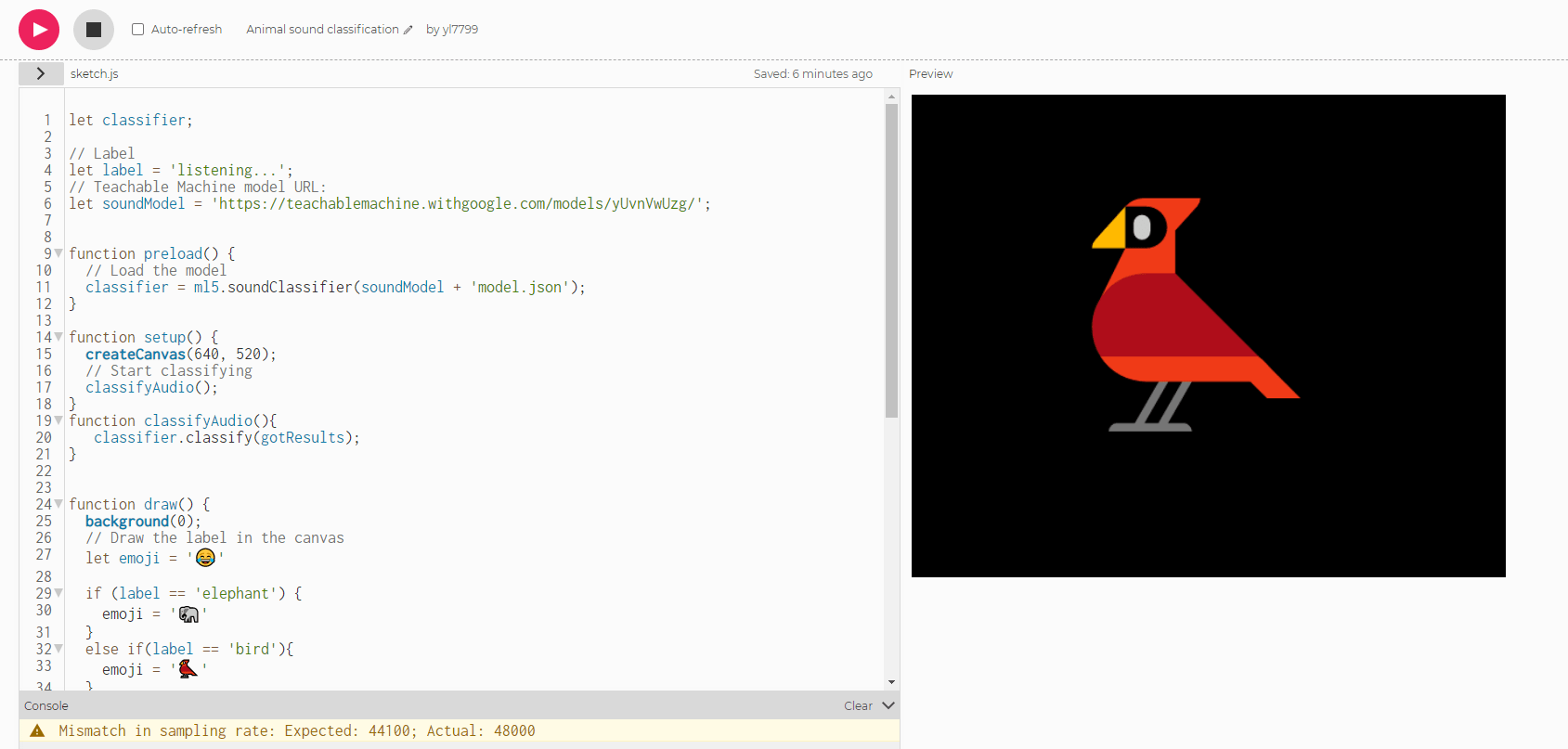

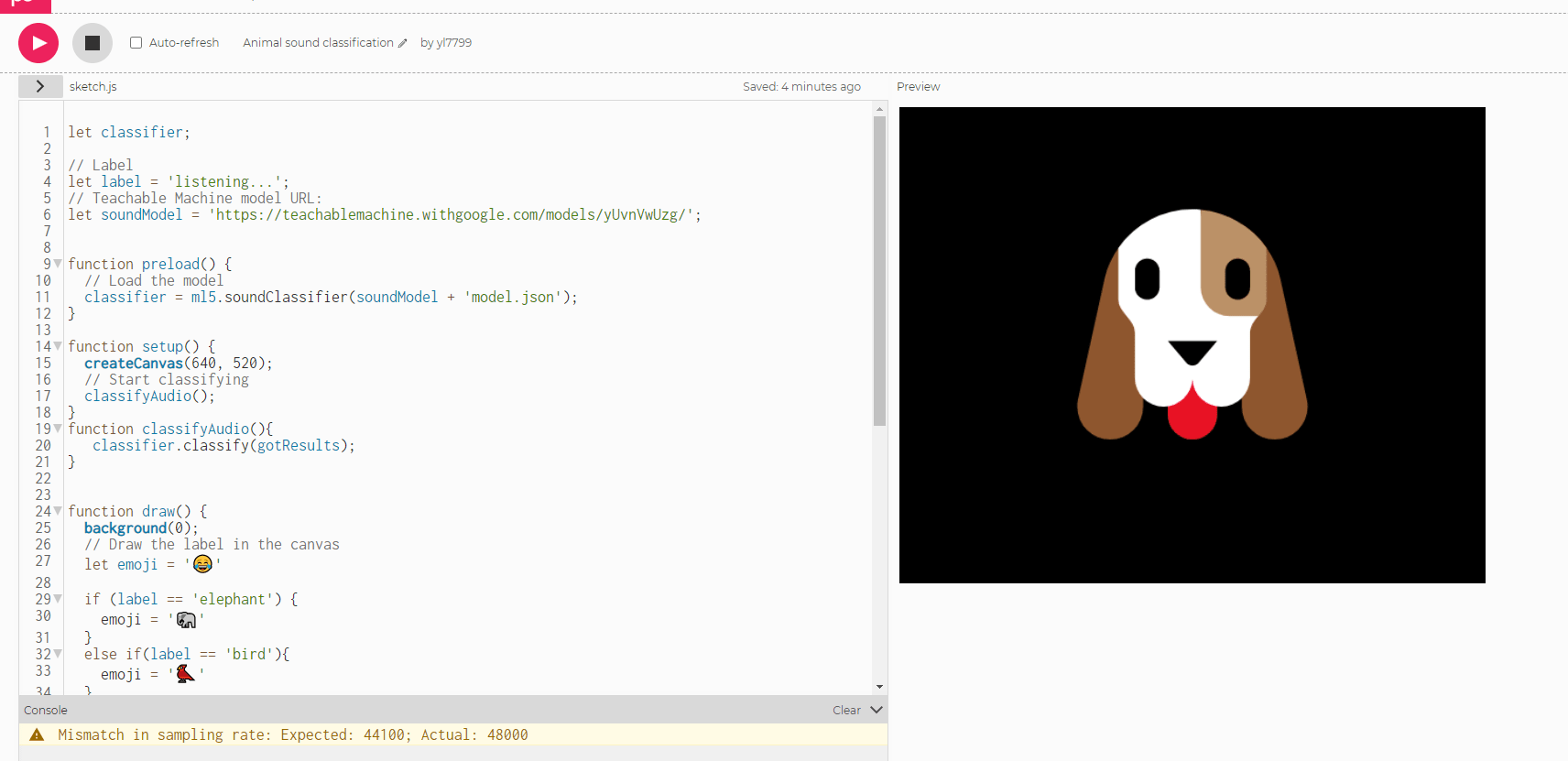

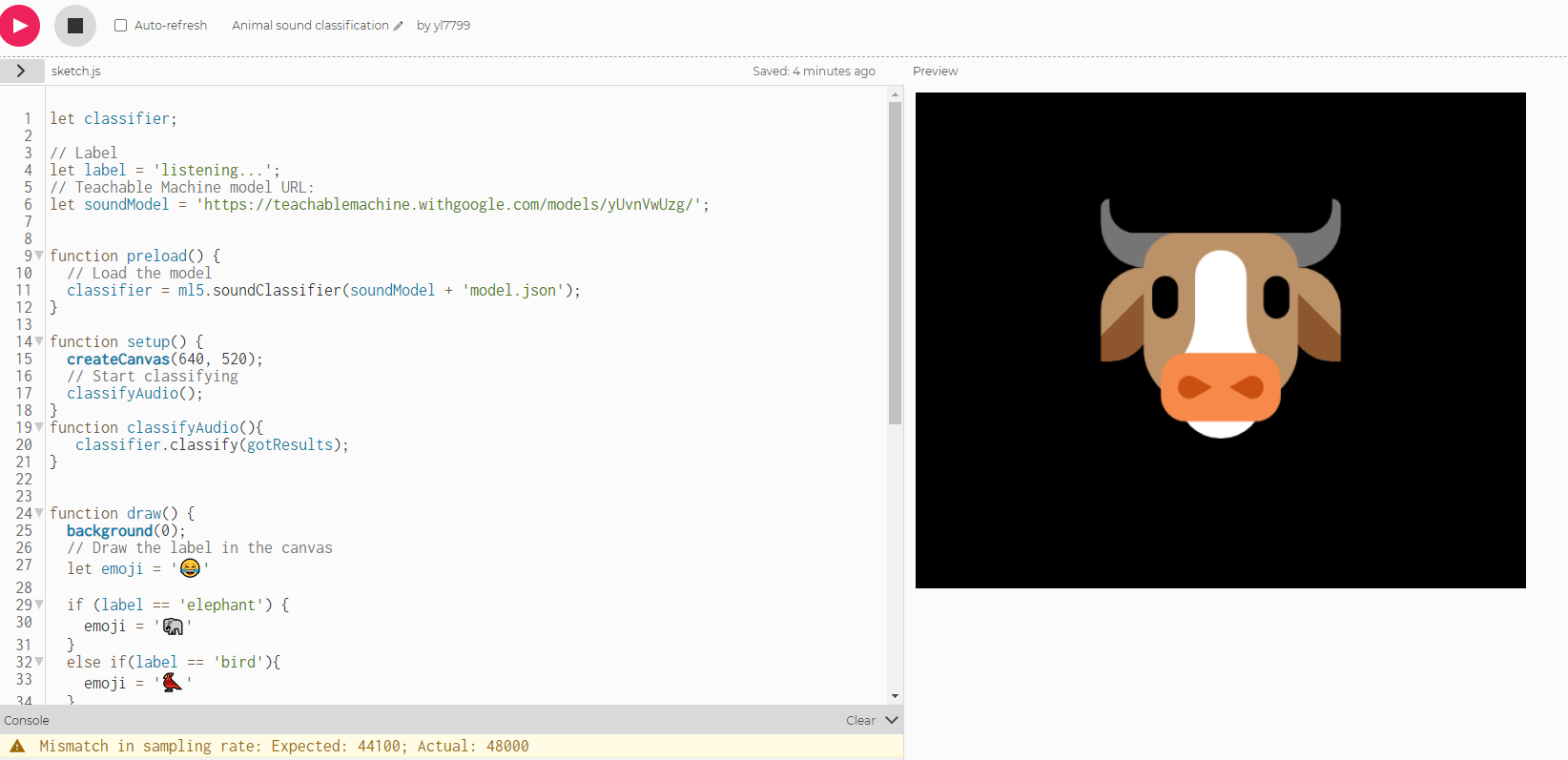

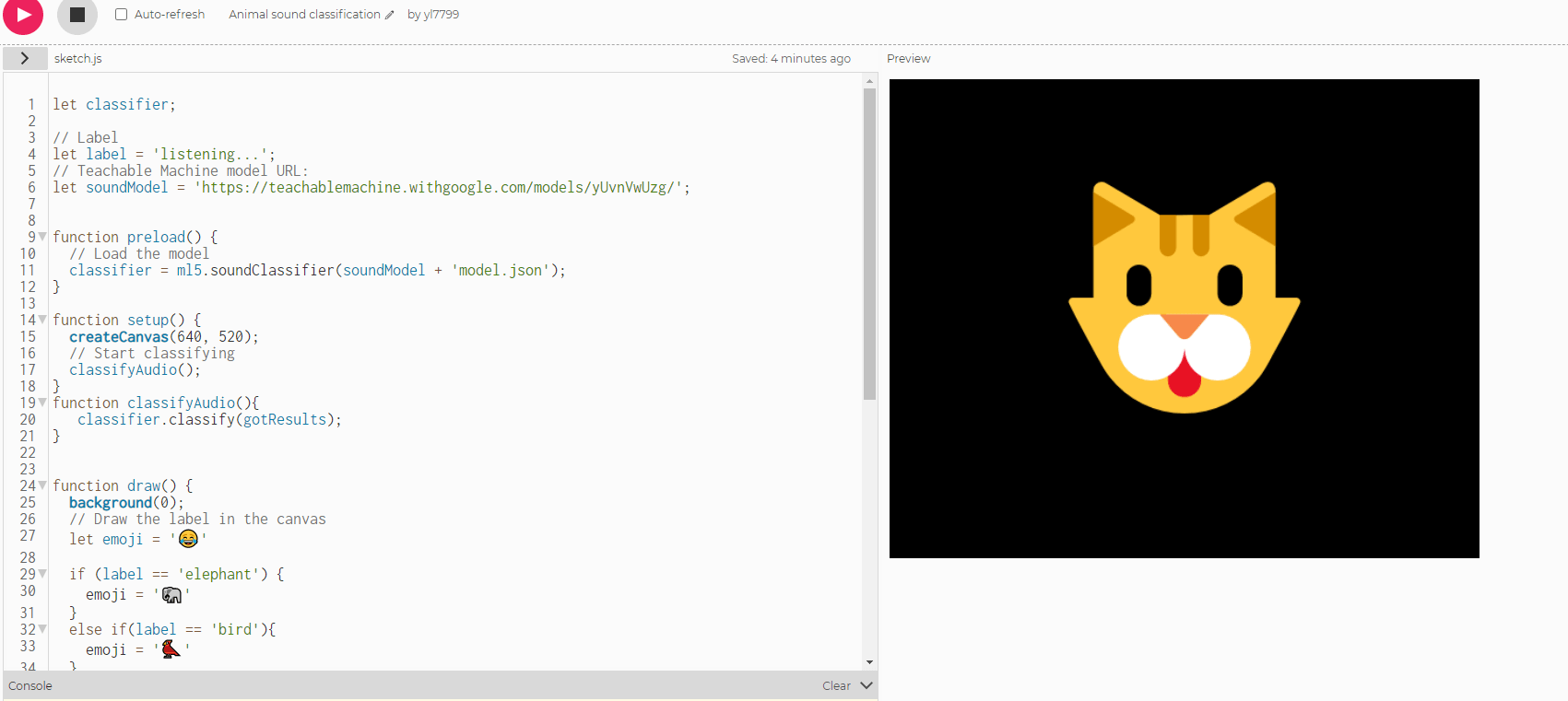

1. Link To The Code: https://editor.p5js.org/yl7799/sketches/rAWnxxvTm

2. Process Sketches

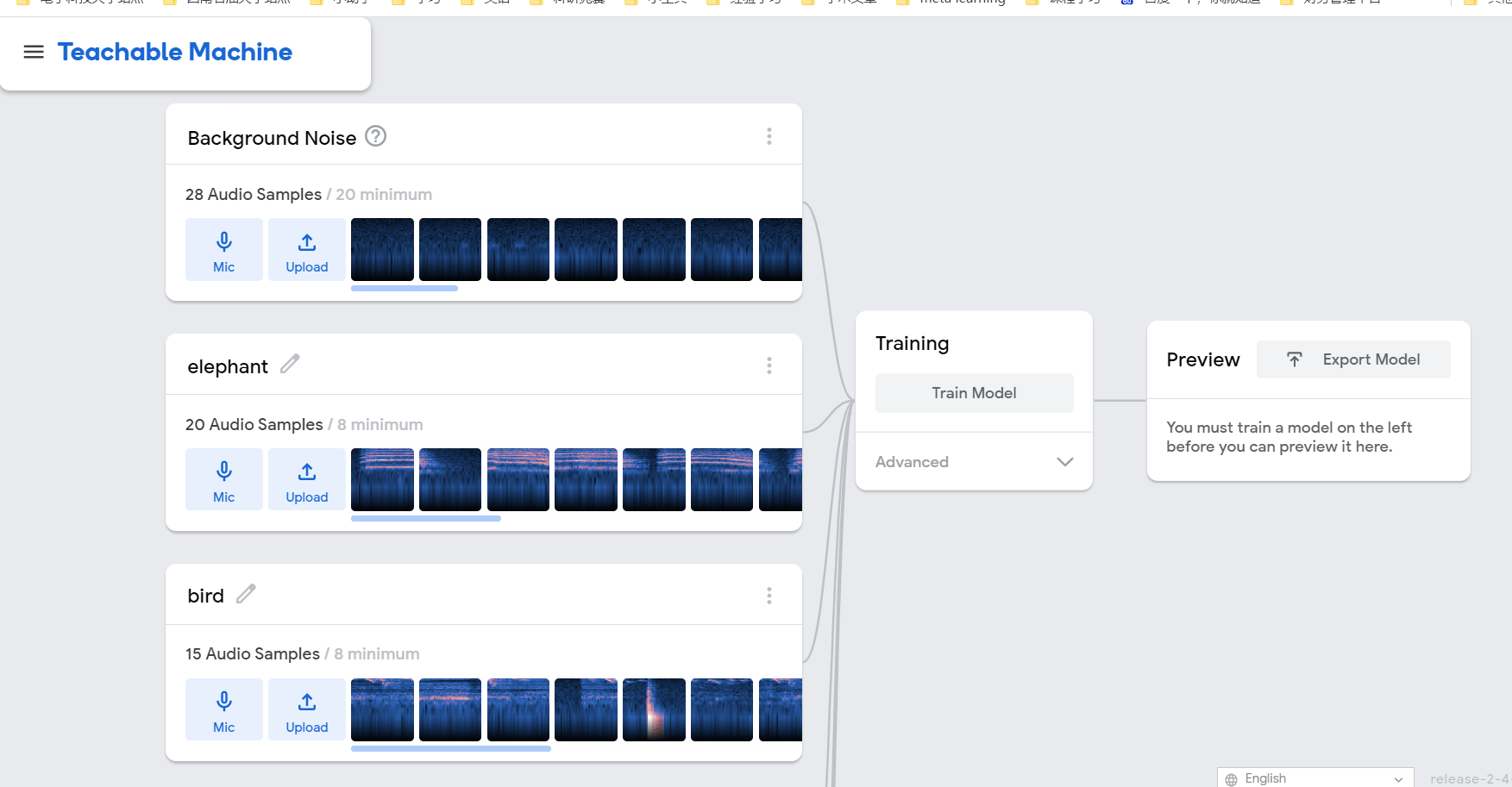

Gather Samples:

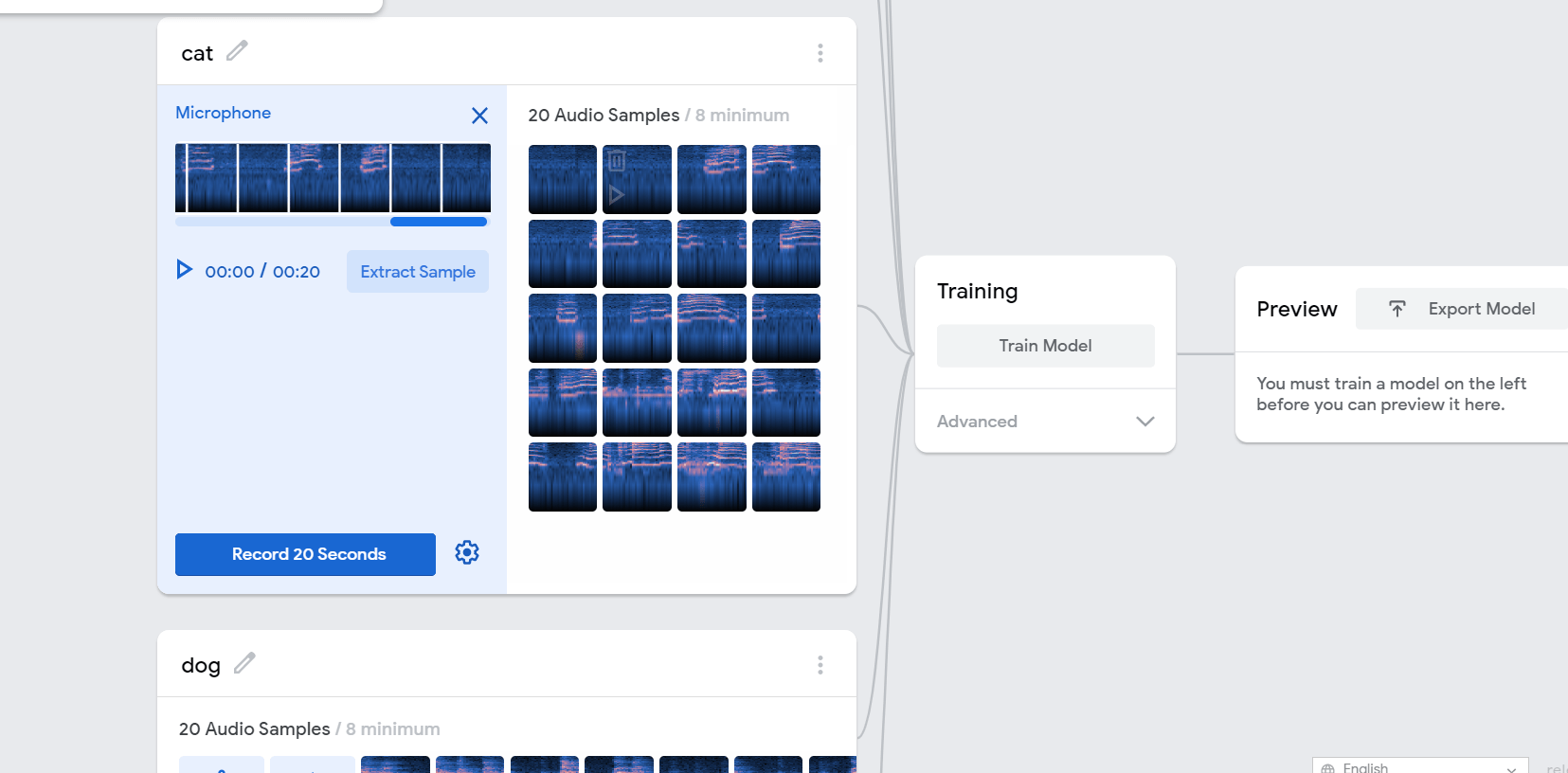

Train Our Model:

Train Our Model:

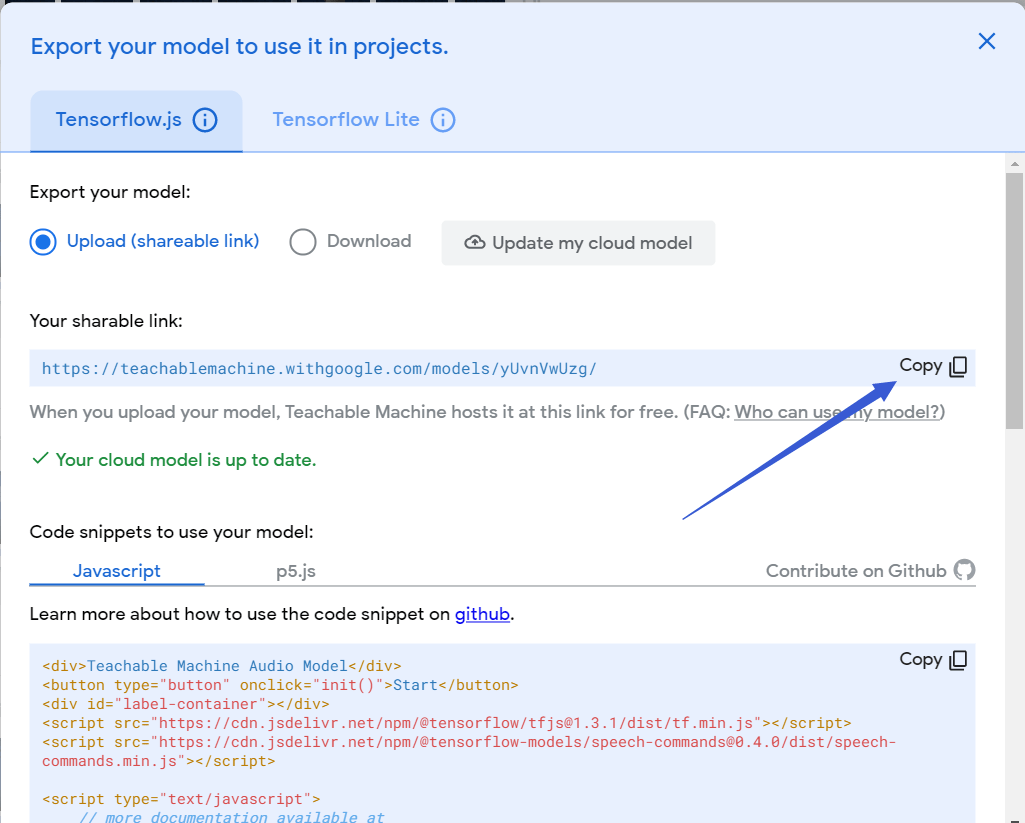

Export Our Model:

3. Final Outcome

No Sound:

Elephant Sounds:

Bird Sounds:

Dog Sounds:

Cow Sounds:

Cat Sounds:

4. Reflection Work

This work aimed to train a classifier to classify animal sounds, which included the calls of elephants, birds, dogs, cows, and cats. First, I visited the Teachable Machines website and used the sounds project to create my model. My classifier model design process went through the following three steps. Collecting samples, training our model, and outputting our model.

In this work, I collected elephants, birds, dogs, cows, and cat sound on youtube as training samples. And I learned the code of the ML5 case, then I used the ML5 library, wrote the code to classify the sound, and loaded the saved model. Eventually, I implemented the classification of calls with animals, and the experimental results proved the effectiveness of the trained model. The results of this experiment also show that the design of my current work is reasonable.

Through this work on designing software classifiers, I learned how to use the ML5 library for machine learning on the web. In addition, I also acquired the skills of creating and exploring artificial intelligence in the browser. For this work, I also studied how to use the toolkit to build a classifier. In the future, I will continue to use ML5 to explore new artificial intelligence doors actively.

5. The Links To My Collection of Animal Sounds On Youtube Are As Follows:

Elephant sounds:

https://www.youtube.com/watch?v=b81v8h5Fy1g

Bird sounds:

https://www.youtube.com/watch?v=Uxuo_MI27jY

Dog sounds:

https://youtu.be/o9WNsU_d87g

Cow sounds:

https://www.youtube.com/watch?v=vq6yPWM64OQ

Cat sounds:

https://www.youtube.com/watch?v=pIi5tvwpnuw

Classify This

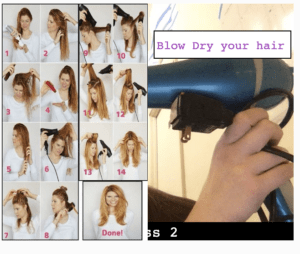

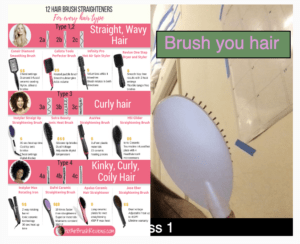

For my project, I was inspired by the fact I was blow drying my hair when I was coming up ideas for my project. So, for my project I used the training model that recognized images. There are 5 classes with my project. The first class recognizes when a hairbrush shown, and displays a side by side images that shows which hairbrush should be used with what type of hair. The second class recognizes when my blow dryer is shown and gives steps by steps of how to blow dry your hair. The third classes recognize when my hairtie is shown and shows a step by step hair style tutorial that uses one hair tie. The fourth class recognizes when my headband is shown, and similar to when my hair tie is shown, it shows three different way to style your hair with a headband. The last class, class five, is simply when I do not have a hair accessory with me. This way, the training machine doses not try to find an accessory:

Classify This – Ahmad Almohtadi

I created the classic pong game but with a small twist. Instead of using the tradition keys to move the paddle to the left or right, you can use the webcam and use your hands to move the paddle. I thought this would have been much easier to do but the teachable machine was very sensitive with analyzing images.

I created the classic pong game but with a small twist. Instead of using the tradition keys to move the paddle to the left or right, you can use the webcam and use your hands to move the paddle. I thought this would have been much easier to do but the teachable machine was very sensitive with analyzing images.

I initially had several ideas that I thought of but the other ideas would only work if a large amount of samples were taken and the samples had to be individual images that were saved. This is why I thought of other ways to do this project. I went off of the Shiffman video on the snake game and made the pong game version of it. First thing I did was code the pong game without considering the teachable machine and then implemented the ml5 library.

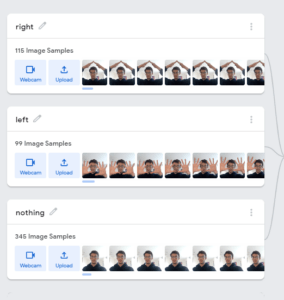

Here are the samples I used on the teachable machine:

Here is a video of testing those samples until the response is good enough to play the game:

https://teachablemachine.withgoogle.com/models/m6HPpSi_3/

I encountered a lot of problems with the sample images and they were all solved through hours of testing. The best outcome came from the hand gestures used on the final product. I tried just raising my hand, pointing fingers, different fingers lifted, papers with letters, and the best results came from a variation of hand gestures shown in the video.

Here is a test run of playing the game:

It is not perfect but it is playable when it is played under the same conditions as the sample images. The instructions under the canvas read : “To move right, move both your hands to make an arrow pointing upwards above your head. To move left, show all 10 fingers and your palm to the webcam. PLAY PONG!”

The final code is linked here

Classify This – Laura Lachin

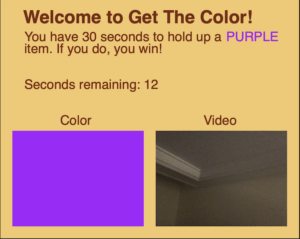

For the Classify This assignment, I created “Get the Color,” a game where the player has to use their webcam and, within 30 seconds, hold up an item of the color displayed on screen. If they hold up an item of the given color within 30 seconds, they win! If time runs out, they lose. There are 8 different colors, and each time the sketch is run, a random color is picked. The 8 different colors are black, white, red, blue, green, yellow, purple, and pink.

In regards to my design choices, I decided to make the color scheme of the game orange so it would not conflict with any of the random colors. In addition, I decided to include instructions on how to play the game so anyone could play the game without me being there to give the instructions. Also, I decided to have two rectangles next to each other, one of the random color and one of the webcam video, so it is very easy for the player to compare the item they are holding up to the random color. I also decided to label these rectangles to make it clear to the player what each rectangle is.

Classify This – Mohamed Alhosani

My initial idea was to have software that can classify different emotions based on facial expressions. Depending on the detected emotion, a song would play. There were five primary emotions; happy, sad, angry, shocked, confused. On the Teachable Machine website, the model gave accurate results. However, when I ran my code in p5.js, the results were inaccurate. Thus, I decided to change my idea.

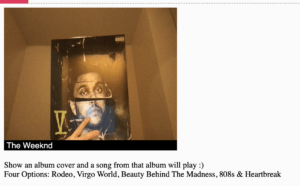

While I was cleaning my room, I sorted out my vinyl collection and thought to myself how inconvenient it is to get the vinyl out, put it on the record player and make sure that it is working properly to hear music. I thought to myself, what if there was a way that I could somehow scan the vinyl image, and a song from that album would instantly start to play. That is when I decided to train a model to identify which album the camera can see. Then, it would start playing a song from the album. At first, I only had one song play for each vinyl. I then modified my code so that it would play one of three songs from that vinyl. However, I had to download the songs in mp3, ensure they were not large (< 5.2 MB), and then upload them to p5.js.

In the future, maybe I can try to connect the code to a music streaming service to play the entire album and perhaps even expand the datasets. Overall, this project was really fun, and I enjoyed working. One day, I hope I can get to develop this idea even further.

Classify This! – Ama Achampong

Classify This- Ama Achampong

A Scavenger Hunt

https://editor.p5js.org/aatlove/full/wUrICZEr2

My concept for the Classifying This! project was a fun mini scavenger hunt game. I wanted people to be able to interact with the teachable machine used to help develop the game as well as get players moving.

Initially, the idea was to have the game be mobile, however, the p5.js does not work very well on a mobile device when the camera is involved. Thus, I made the game based on household items one would typically find in that particular environment.

What inspired my concept was a translator game using the teachable machine where the camera was focused on an item and then the machine would identify it. I figure an identification game similar to this was a scavenger hunt because you had to go out and deliberately find an item to see the machine work and translate it.

I made score there was a scoreboard to keep track of the player’s progress. In order to make the game more engaging, I added a timer to help make the player more motivated to find the items. Moreover, the layout of my game was inspired by Laura and her guess the color game. Although, something would love to continue working on was adding the sound effects. They were not loading, but once I get that up and running, it would certainly be more engaging.

Initial Thoughts/Sketches

Final Outcome

Response:

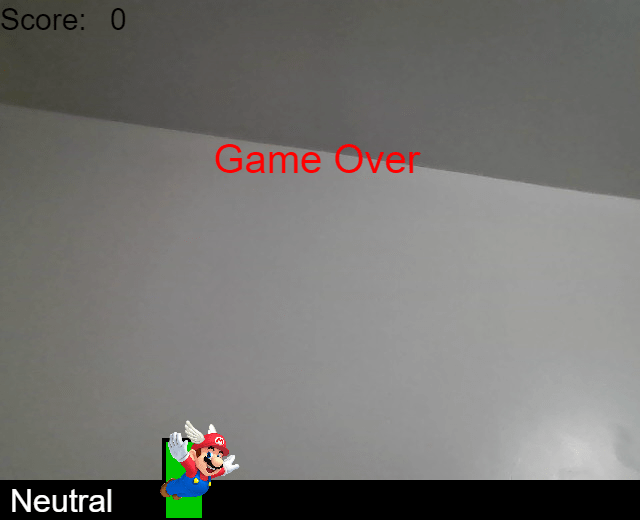

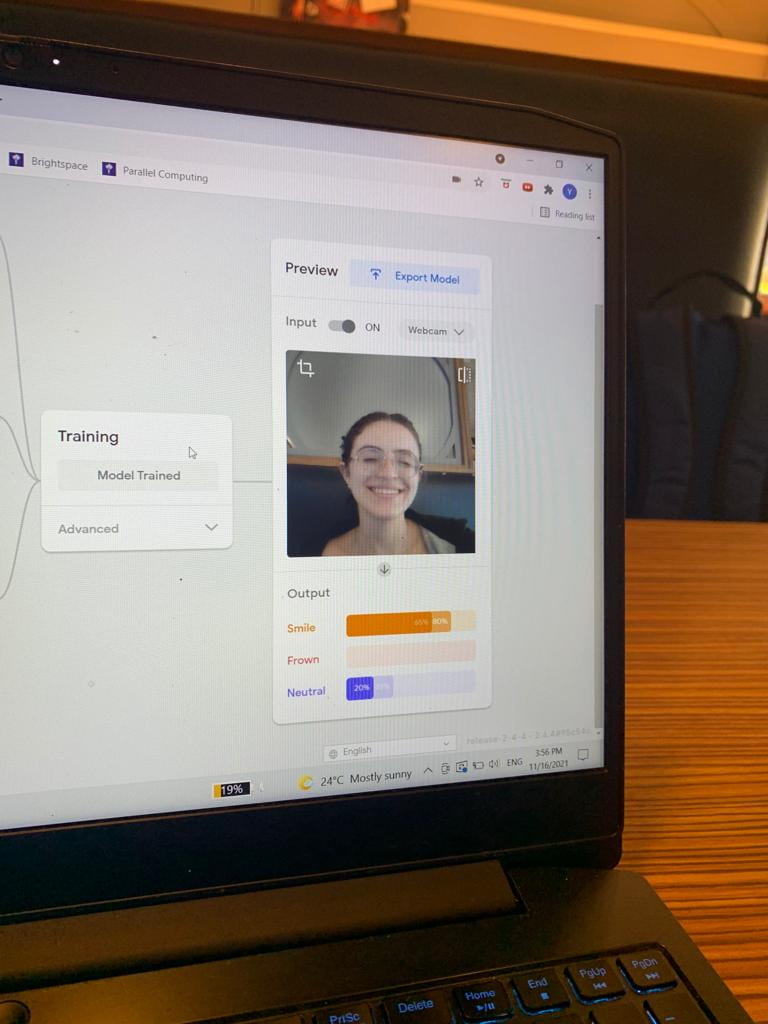

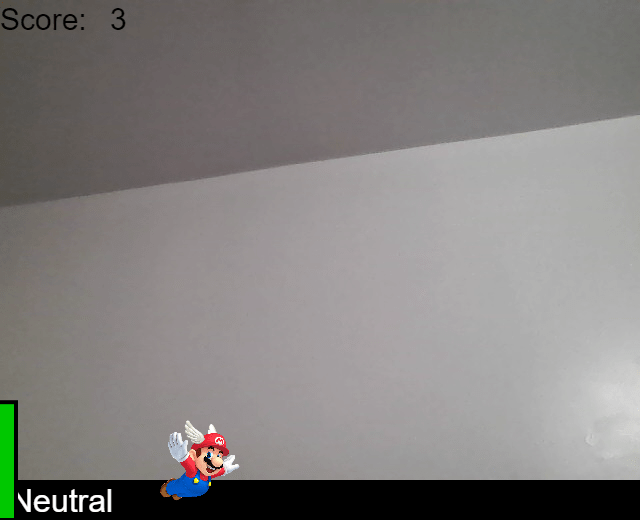

It is said that facial expression play a role in our mood. Forcing a smile is believed to actually improve one’s mood. I was very interested by the parallel between facial expression and games in their effect on our moods. I chose to explore this by creating a simplified Super Mario game that can be controlled with facial expressions. I trained the Teachable Machine to recognize smiling and a neutral facial expression. I did so in three different and distinct times of the day, location, and with different appearances. I had planned to include a frowning class as well but the machine struggled with differentiating it with the neutral class.

Using techniques we learned about in previous classes, I used an image of the character Mario in the sketch in order to better emulate the game. When the user smiles, Mario flies higher, avoiding the obstacles. Each obstacle that gets successfully avoided increases the player’s score by 1.

Using techniques we learned about in previous classes, I used an image of the character Mario in the sketch in order to better emulate the game. When the user smiles, Mario flies higher, avoiding the obstacles. Each obstacle that gets successfully avoided increases the player’s score by 1.

When the user stops smiling, Mario descends downwards. If Mario bumps into an obstacle, the game ends and a message that says “Game Over” appears.