My final project uses machine learning to create a posture corrector. It works as a monitor for your posture during working sessions or extended periods of time standing or sitting down. It should be set up on the user’s side, and based on whether the person using it is slouching or not, the sketch interacts with the user and the computer in different ways. Time also plays a role on the outputs of the sketch.

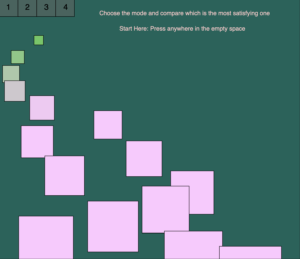

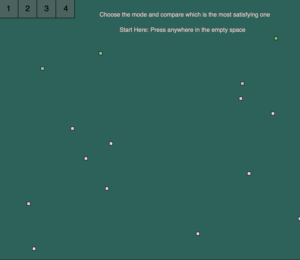

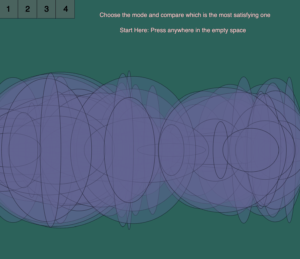

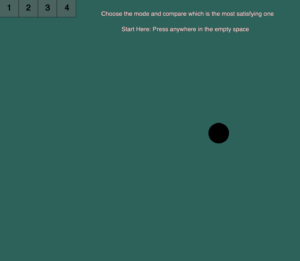

While a correct posture is detected, a game runs on the screen. Every 10 consecutive seconds increases the user’s high score by 1, causing the ball to get thrown in the hoop. If slouching or bad posture is detected, the game disappears and the high score is reset to zero.

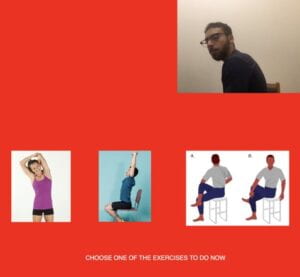

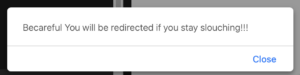

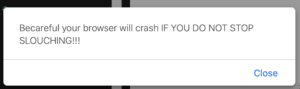

After a certain amount of time an alert is shown on the screen displaying three stretches to be done. If the user remains in the slouching position for a certain amount of time, a new tab will open to an image that takes over the screen. If a correct position is not detected after a long amount of time, then the tab will continuously open up causing the window to crash. The sketch can also detect when the user stretches and encourages them to hold it for a longer period.

Process sketches

Images:

Images:

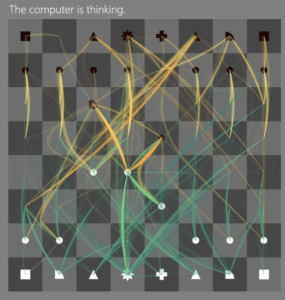

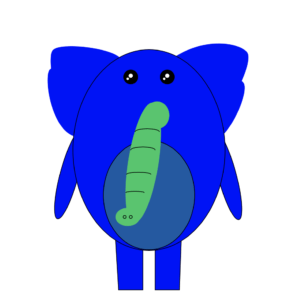

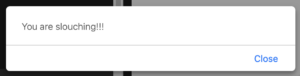

Output after 50 detections of slouching:

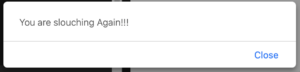

Output after 200 detections of slouching:

Output after 600 detections of slouching:

Output after 4500 detections of slouching:

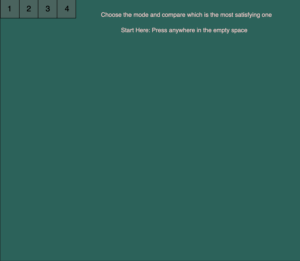

Sketch:

https://editor.p5js.org/zme209/sketches/GELPE9NpR

Voice recordings incase you need to test it:

Voice recordings incase you need to test it: Joy Buolamwini discusses algorithmic bias in her TED Talk. The way facial recognition software generally works is that they are given a “training set” which tells the machine what is a face and what is not. This gives the machine the ability to detect faces. However, the training sets are not always inclusive of all people. Despite this, I especially liked how she phrased this issue by emphasizing that more inclusive training sets must and can be made.

Joy Buolamwini discusses algorithmic bias in her TED Talk. The way facial recognition software generally works is that they are given a “training set” which tells the machine what is a face and what is not. This gives the machine the ability to detect faces. However, the training sets are not always inclusive of all people. Despite this, I especially liked how she phrased this issue by emphasizing that more inclusive training sets must and can be made.