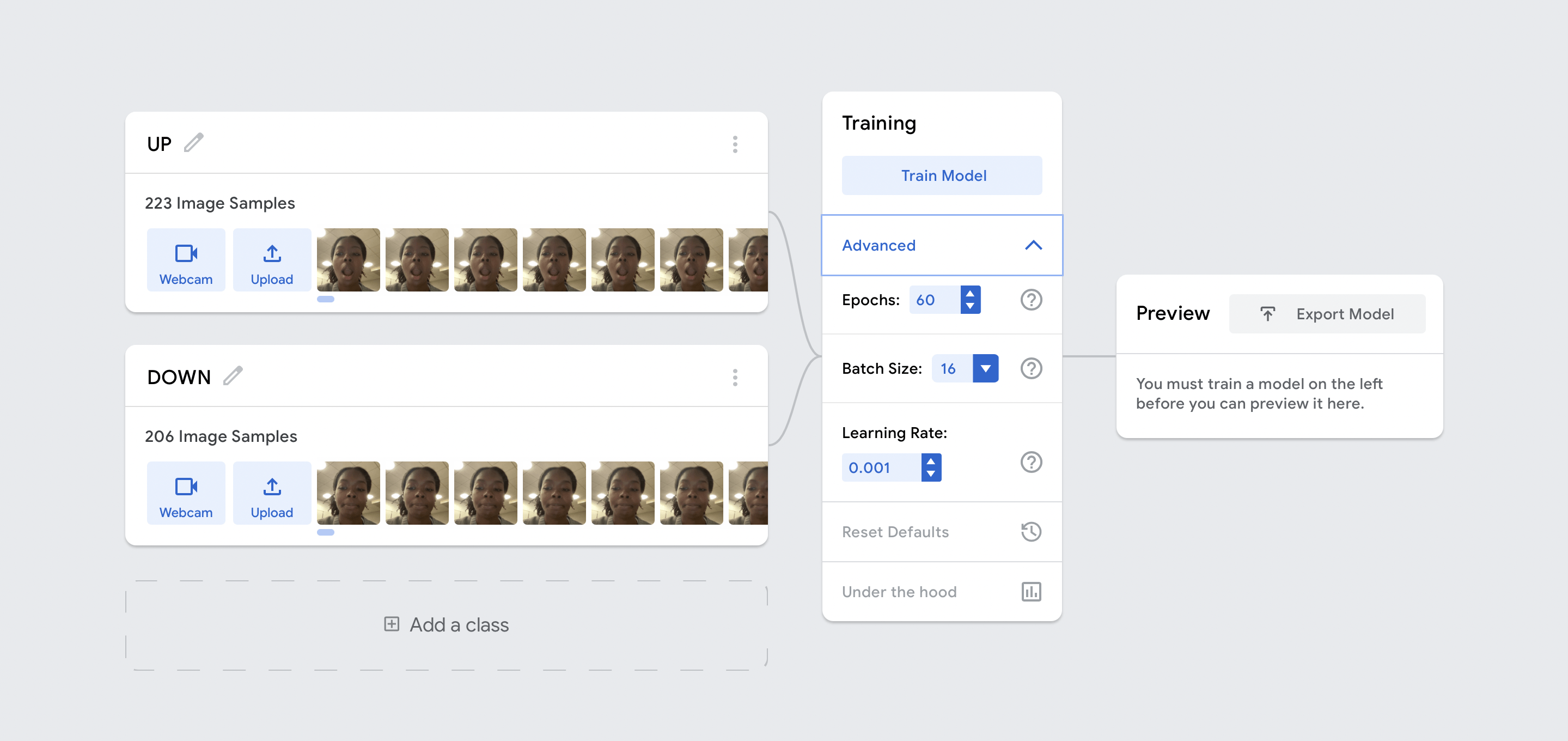

My “Classify This” project is a simple game where the user can control a yellow dot, moving it up and down on the canvas by opening or closing their mouth. The aim is to stay within the bounds of the canvas. I created an image model using Google’s TeachableMachine Toolkit. For the model I designated two classes of images: “UP” and “DOWN”. I then added about 200 images of my mouth opened to various degrees for the “UP” class, and my mouth closed for the “DOWN” class. I also sourced stock images from Google of people doing the same expressions to create a bit more diversity in my data set. When training I kept the default settings for batch size and learning rate, but increased the epochs from 50 to 60, meaning each sample was fed through the model 60 times. I found that this small tweak improved the accuracy of my model while taking a bearable amount of time.

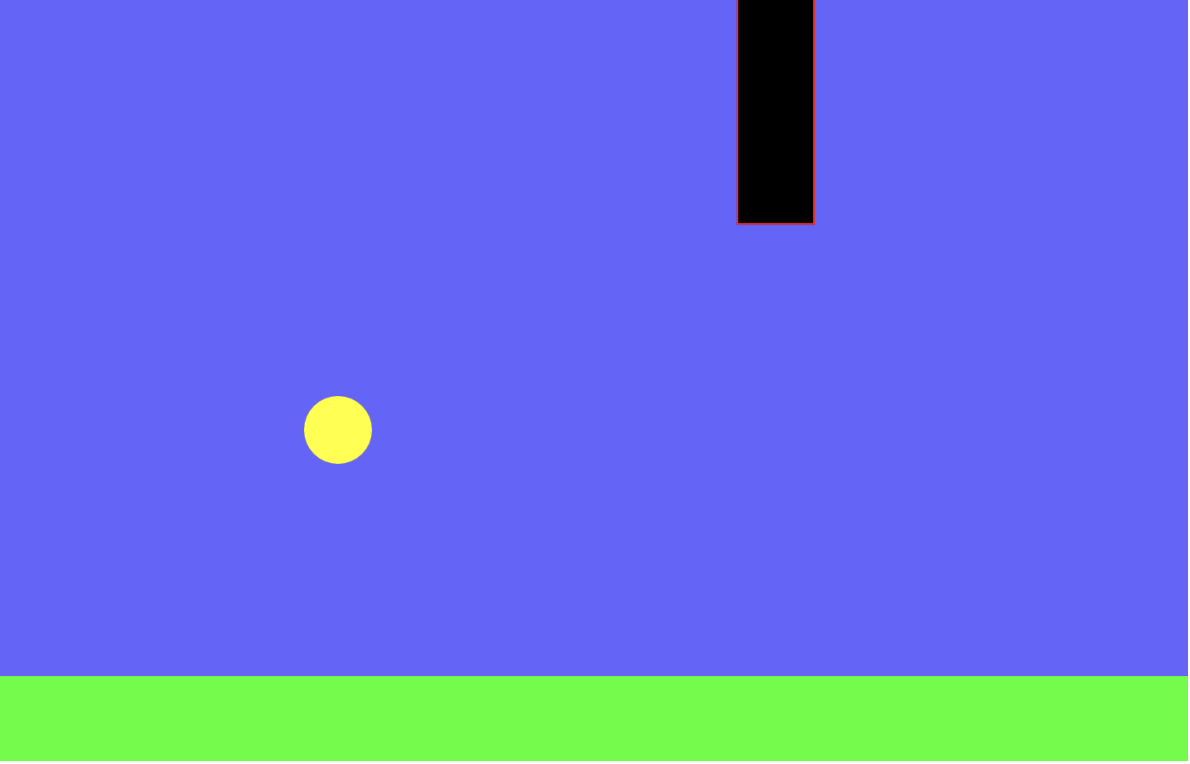

The program is reminiscent of the popular mobile indie game Flappy Bird, where the user taps the screen to move a bird that must evade obstacles coming in both above and below from the right side of the screen. I decided that I wanted to create something similar and found that it was more enjoyable to use facial expressions to control the circle. A challenge I faced while creating this program was the dimensions of the obstacles in the game. The height of the incoming obstacles is supposed to vary which makes the game more difficult since it would end if the yellow dot touches a rectangle. Instead of each new rectangle being a new randomized height, the height would change rapidly while on screen.