Project Description

- Project Title: Unravel the Message

- Project Subtitle: A Visual and Sonic Exploration of Communication Dynamics

- Project Webpage Link: Unravel the Message.

- Creator: Qiya Huang

- Short Description: This project explores how messages can undergo transformation as they pass from one person to another. Through a combination of visual and auditory elements, I aim to create an engaging and thought-provoking experience. Feel free to browse the next three canvases.

- Abstract: Dive into the complex dynamics of the evolution of information in this carefully programmed project named “Unravel the Message” in the digital realm. Inspired by the intriguing “megaphone game,” the project blends visual and auditory elements to demonstrate the metamorphosis of communication. Chaotic pixels triggered by mouth movements visually depict the distortions inherent in the transmission of information. Meanwhile, speech-to-text technology brings a tangible dimension to the audio, providing a concrete representation of the transformation of information. Join the exploration in three fascinating canvases that give communication a visualized form.

- Demos

Process: Design and Composition

Initial Concept:

The design process began with the conceptualization of how to visually represent the transformation of messages. Inspired by the Megaphone Game, I wanted to incorporate changes in objects’ movements on the sketch triggered by mouth movements or speech as a symbolic representation of the distortions in communication.

Control of Speech Sketch:

The initial control mechanism involved a red ball to experiment with user interaction.

Initial Concept

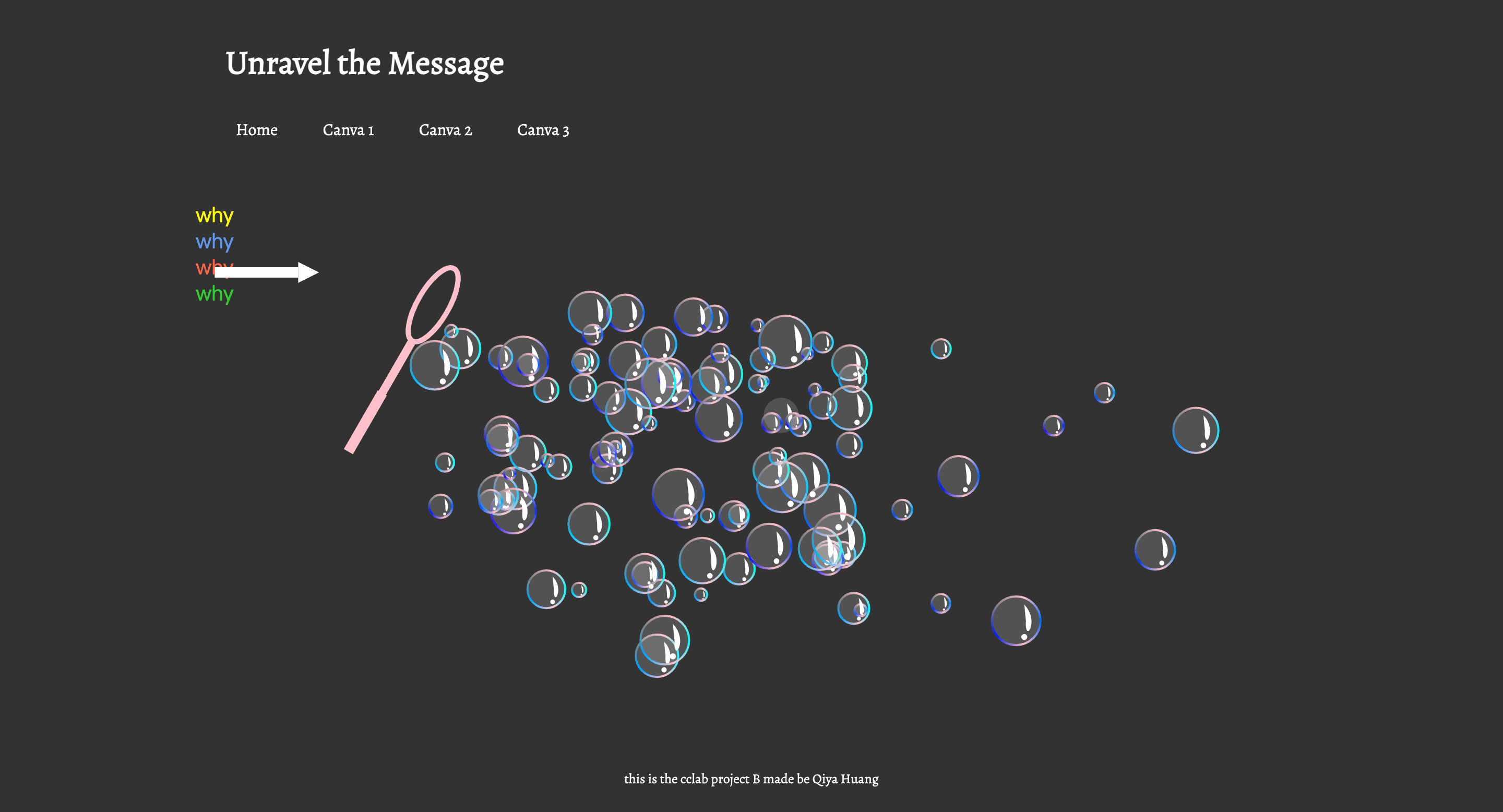

In the second iteration, to improve the aesthetics, I added the bubble setting. At the same time, I expanded the control mechanism beyond color to include directions and a “blow” feature to align with the bubble setting. This decision aimed to enhance both the visual appeal and user engagement, allowing users to have a more dynamic influence on the evolving visual elements.

Second Iteration

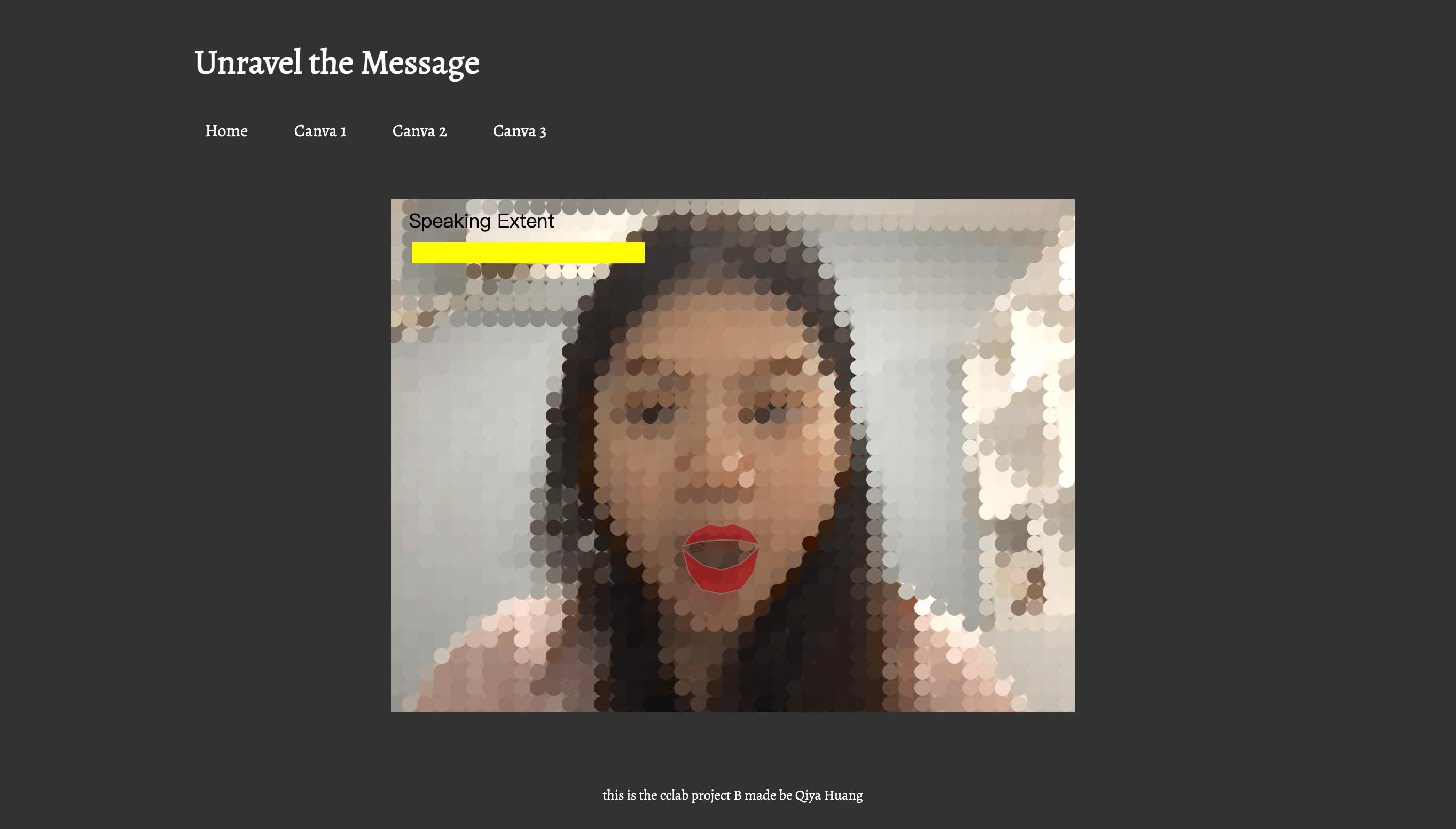

Showcase Mouth Detection and Pixel Chaos:

To integrate the impact of mouth movements on the canvas, I implemented mouth detection to visualize the impact of mouth movements. Then, I designed pixels to change size based on variations in mouth width and height. This part is aimed to visually represent the distortions inherent in communication.

Mouth movements and pixel chaos

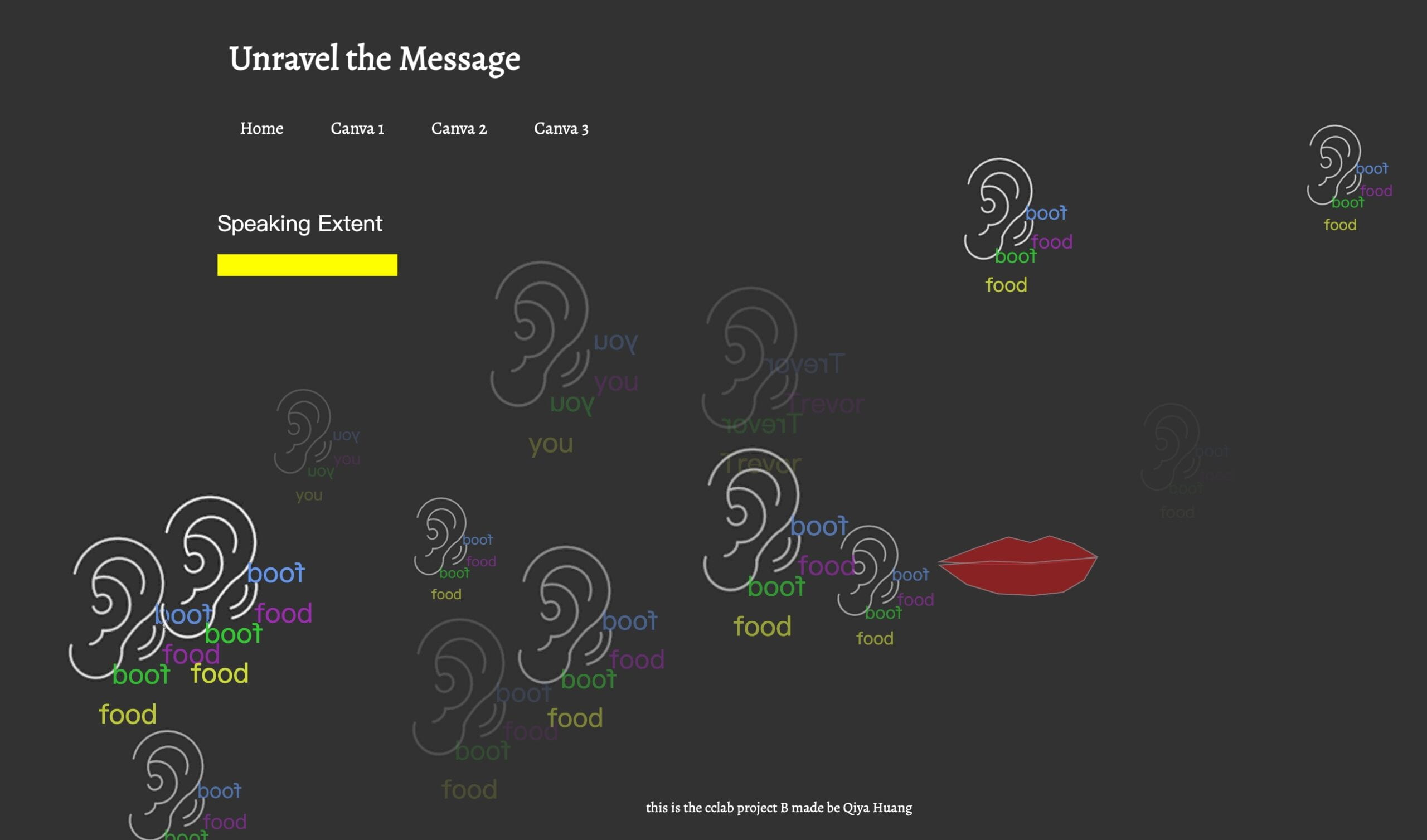

Integration of Mouth Movements and Speech:

To further integrate the impact of mouth movements and speech on the canvas, the third sketch combined ideas from the first two sketches. The most recent word detected was initially displayed in a fixed position on the top of the canvas, but feedback from Professor Marcela suggested exploring dynamic text placement for a more immersive experience. Therefore, I changed the text (the most recent word) position to be closer to each ear image to show a deeper connection to the message being spread.

What Is Heard: Combination of Speech Recognition and Facial Recognition

Final Composition

The final composition involved refining the design based on feedback and testing. In addition, the website was structured to optimize sketch positioning, ensuring a seamless and intuitive user experience. The combination of chaotic pixels, dynamic text placement, and the expanded control mechanism contributed to a visually engaging and thought-provoking exploration of message transformation.

Process: Technical

Speech to Text

- Utilized the p5 library for speech-to-text functionality.

- Created a function,

parseResult(), called on each word detection. - Displayed the current result using

text()and logged it to the console. - Extracted the most recent word for further interaction.

function parseResult() {

// myRec.resultString is the current recording result

text(myRec.resultString, 25, 25);

console.log(myRec.resultString);

// grab the most recent word (the word on the right side of the string)

let wordArray = myRec.resultString.split(" ");

mostRecentWord = wordArray[wordArray.length - 1];

}

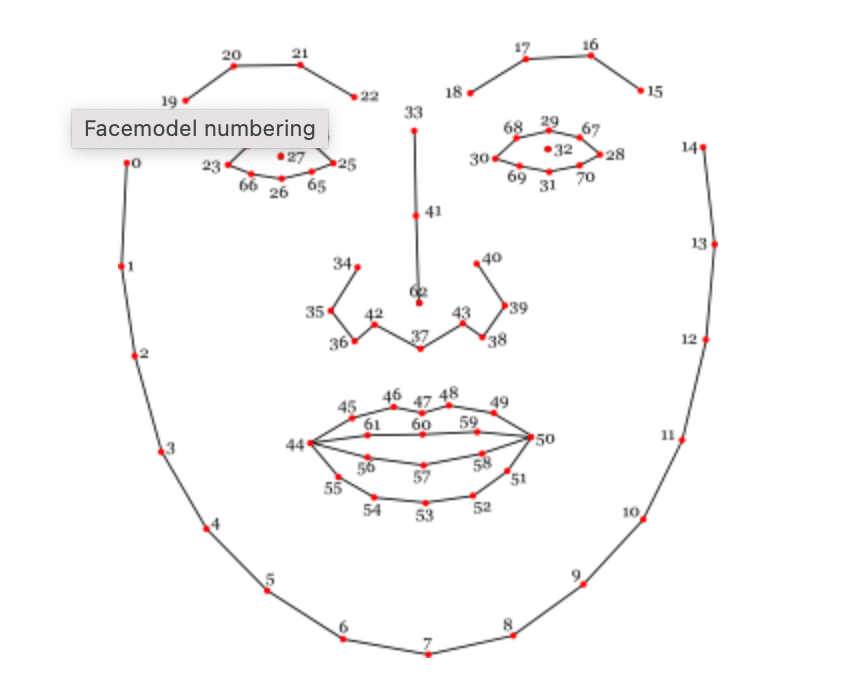

Mouth Tracking

- Leveraged the provided source from the professor for mouth-tracking.

- Studied references to determine how to find the position of the mouth accurately.

Face model Numbering

- Here is how I use the face model numbering to display the mouth on the sketch:

class Mouth {

constructor(positions) {

this.positions = positions;

this.opened = false;

this.defaultClosingValue = 8;

this.mouthWidth = 0;

this.mouthHeight = 0;

this.distance = 0;

}

display() {

stroke("rgba(141,117,122,0.75)");

fill("rgba(164,28,28,0.75)");

//upper lip

beginShape();

for (let i = 44; i <= 50; i++) {

vertex(this.positions[i][0], this.positions[i][1] - 1);

}

vertex(this.positions[59][0], this.positions[59][1] + 1);

vertex(this.positions[60][0], this.positions[60][1] + 1);

vertex(this.positions[61][0], this.positions[61][1] + 1);

endShape(CLOSE);

//bottom lip

beginShape();

fill("rgba(164,28,28,0.75)");

vertex(this.positions[44][0], this.positions[44][1]);

vertex(this.positions[56][0], this.positions[56][1]);

vertex(this.positions[57][0], this.positions[57][1]);

vertex(this.positions[58][0], this.positions[58][1]);

for (let i = 50; i <= 55; i++) {

vertex(this.positions[i][0], this.positions[i][1] + 2);

}

endShape(CLOSE);

}

}

Pixel Chaos

Adjusted pixel size based on mouth size, seeking assistance from the professor Marcela for optimization.

function pixelsChaos(mouthWidth, mouthHeight) {

//gridSize = 20;

let mouthSize = mouthWidth * mouthHeight;

//gridSize = int(map(mouthSize, 500, 1000, gridSize, 19));

gridSize = int(map(mouthSize, 500, 1000, 20, 10));

gridSize = int(constrain(gridSize, 10, 20));

//console.log(mouthSize, gridSize); //200-4000

}

Reflection and Future Development

Evolution from Proposal to Current Version:

The project has evolved considerably from the initial proposal, transitioning from conceptual ideas to a tangible exploration of message transformation. The Megaphone Game inspiration shaped the project’s interactive and dynamic nature. However, adjustments were necessary due to technological limitations and the need to align with the actual outcomes.

Strengths:

- Sketch 1 and 3 effectively convey the desired message transformation.

- Successful incorporation of speech-to-text and mouth tracking technologies.

- Integration of chaotic pixels visually represents communication distortions.

Future Developments:

- Continue refining and expanding Sketch 2 to achieve a more impactful visual representation.

- The suggestion to incorporate additional elements beyond visuals and auditory elements was considered. Recognized the potential to add more elements to enrich the expression of the message transformation concept.

- Experiment with different visual effects or techniques to enhance the overall visual experience. As the guest critics mentioned, there is potential for enhancing the CSS style of the webpage.

Overall Reflection:

This project has successfully explored the dynamics of message transformation, utilizing both visual and auditory elements. While certain aspects performed well, ongoing adjustments and improvements were recognized. The feedback received from peers, instructors, and guest critics has been instrumental in refining my project, and future development will focus on further enhancing the user experience and expanding the expressive elements of the concept.

Credits and References

- Suggestions for improvements from Professor Marcela and classmates.

- Speech recognition: https://editor.p5js.org/re7l/sketches/Zj-Uya9Fd

- Face recognition: https://editor.p5js.org/kylemcdonald/sketches/BJOcyD9hm

- Mouth tracking references: https://www.auduno.com/clmtrackr/docs/reference.html.

- Mini Project 6: Particles