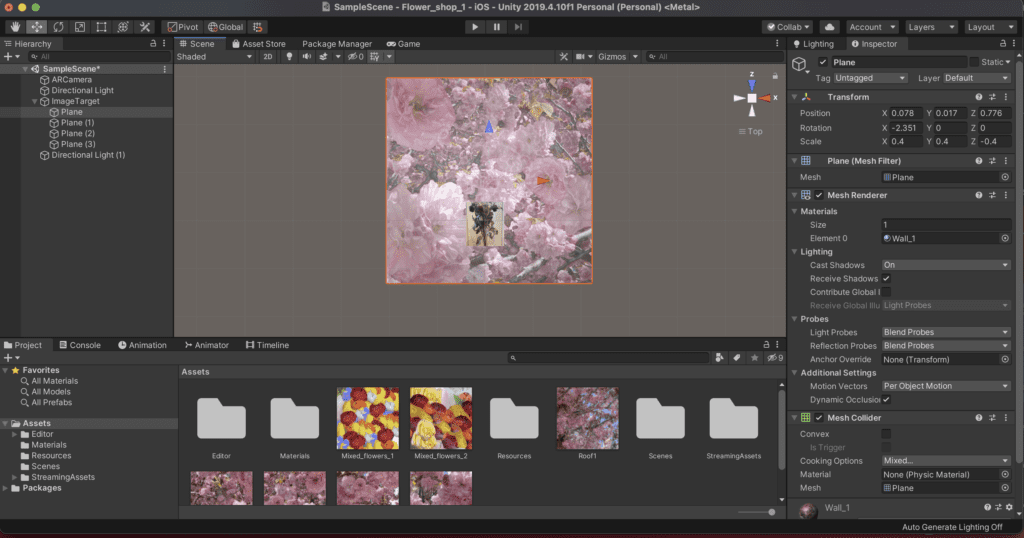

This week we were tasked with manipulating the color values of pixels in a sketch. I chose to work with a live video feed and an overlay.

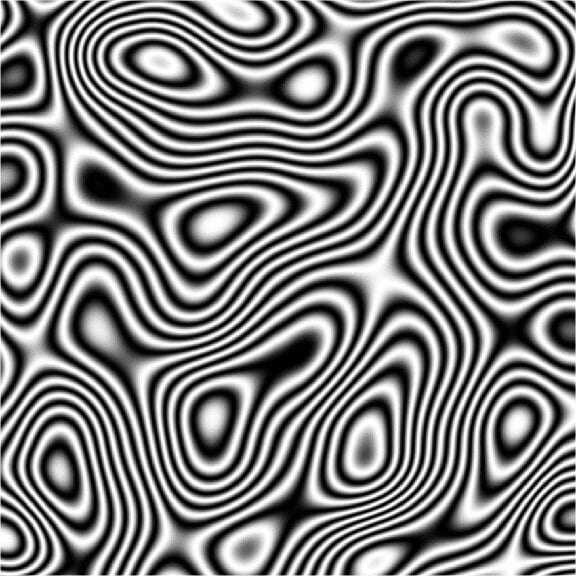

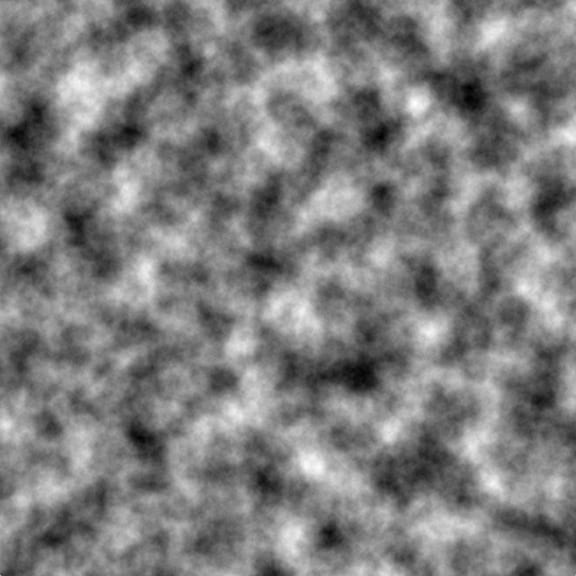

To achieve this, I made two sketches first, the spiral background, and second, the inverted video feed with the banding.

For the video feed, I decided to only invert the colors of the red channel. The choice was purely aesthetic. I called a single band of pixels in either the x or y-axis and applied color manipulation to make the banding effect. I tried using an array so that I can move the band quickly later.

PROBLEMS:

-

-

- Centering the video feed – Whenever I tried to translate, the video feed crash.

- Layering the graphic below the video feed – To get a taste of what I was going for, I left the graphic on top but turned down its opacity.

-

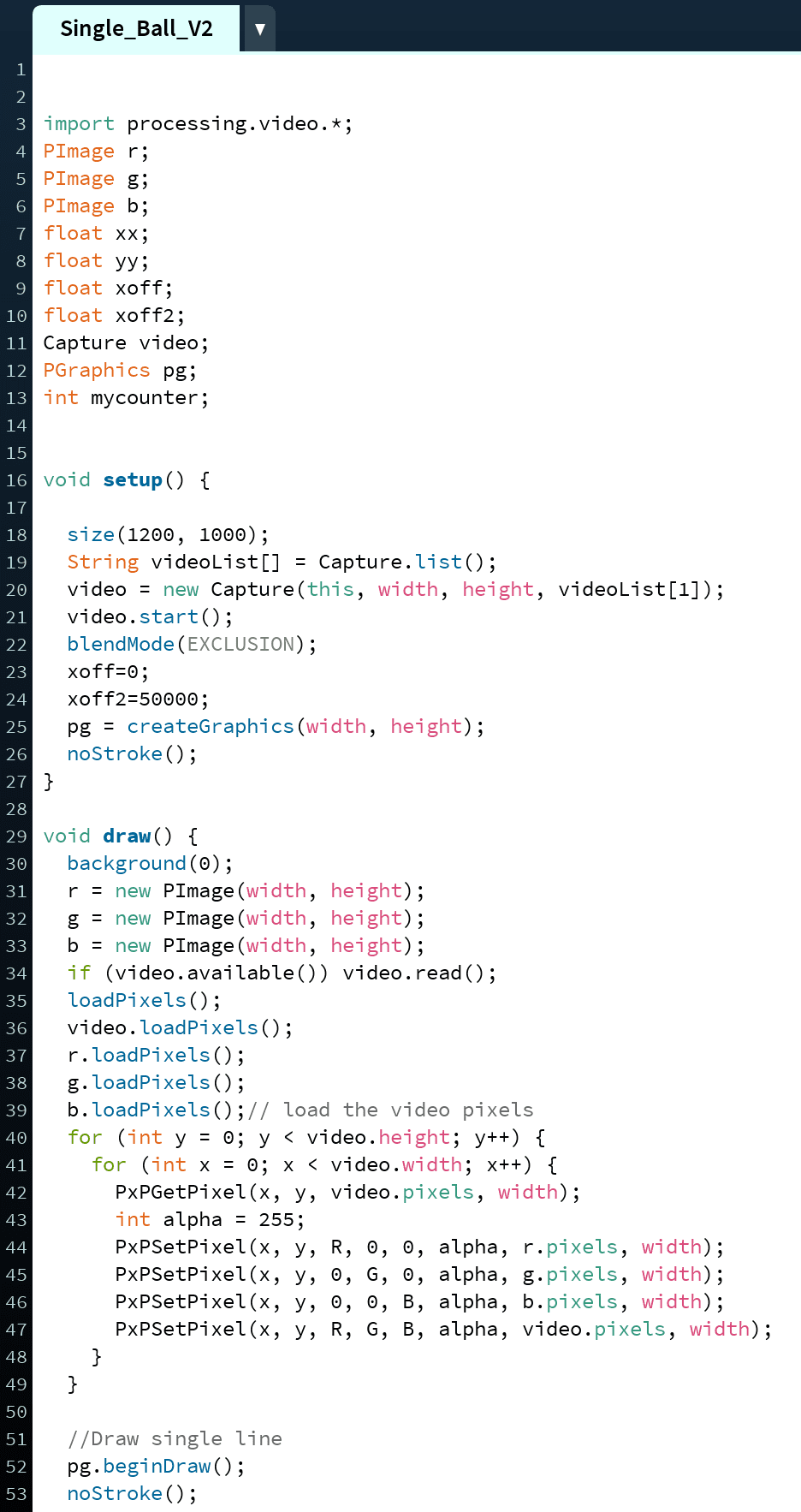

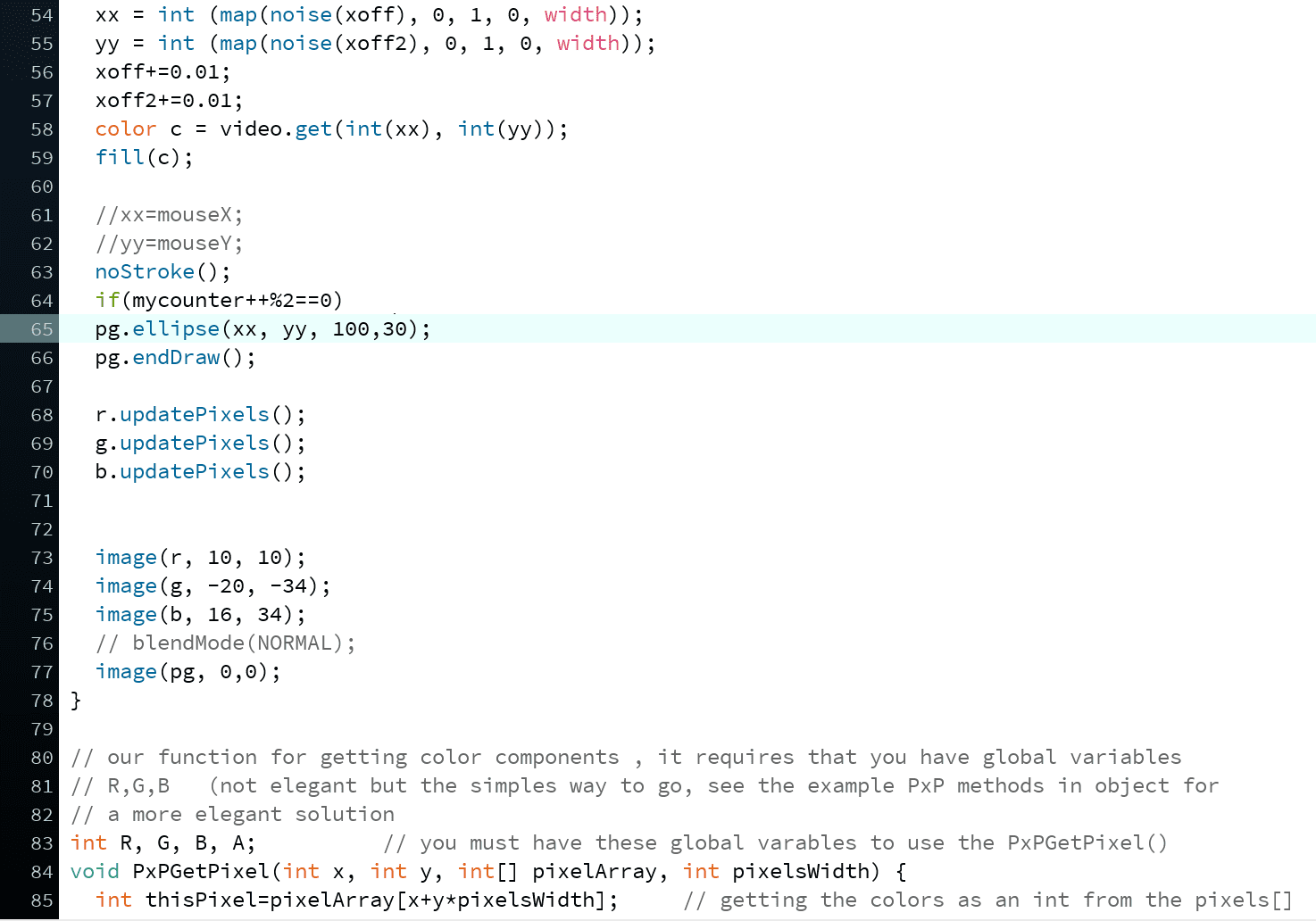

Code:

// The world pixel by pixel 2021

// Daniel Rozin

// uses PXP methods in the bottom

import processing.video.*;

Capture ourVideo; // variable to hold the video

int diaMin = 10;

int diaMax = 1000;

int diaStep = 10;

float a, b, move;

void setup() {

size(880, 720);

frameRate(120);

ourVideo = new Capture(this, 640, 480); // open default video in the size of window

ourVideo.start(); // start the video

noFill();

stroke(55,3,34,40);

strokeWeight(diaStep/4);

}

void draw() {

if (ourVideo.available()) ourVideo.read(); // get a fresh frame of video as often as we can

background (25, 215, 55);

ourVideo.loadPixels(); // load the pixels array of the video

loadPixels(); // load the pixels array of the window

int [] l1 = {20, 60, 110, 140, 158, 210, 214, 340, 390, 415, 421, 591, 610, 630, 421, 455, 580, 631, 560};

int [] l2 = {23, 34, 111, 145, 153, 167, 180, 440, 443, 460, 230, 340, 212};

for (int x = 0; x<ourVideo.width; x++) {

for (int y = 0; y<ourVideo.height; y++) {

PxPGetPixel(x, y, ourVideo.pixels, ourVideo.width);

PxPSetPixel(x, y, 255-R, G, B, 255, pixels, width);

PxPSetPixel(l1[0], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[1], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[2], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[3], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[4], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[5], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[6], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[7], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[8], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[9], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[10], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[11], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[12], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[13], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[14], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[15], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[16], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(l1[17], y, R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[0], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[1], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[2], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[3], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[4], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[5], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[6], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[7], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[8], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[9], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[10], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[11], R, G, 255-B, 255, pixels, width);

PxPSetPixel(x, l2[12], R, G, 255-B, 255, pixels, width);

}

}

updatePixels(); // must call updatePixels once were done messing with pixels[]

a = sin(radians(move+10))*450;

b = cos(radians(move))*450;

translate(width/2, height/2);

for (float dia=diaMin; dia<=diaMax; dia+=diaStep) {

ellipse(-a, b, dia, dia);

ellipse(-a, -b, dia, dia);

ellipse(a, -b, dia, dia);

ellipse(a, b, dia, dia);

}

move++;

}

// our function for getting color components , it requires that you have global variables

// R,G,B (not elegant but the simples way to go, see the example PxP methods in object for

// a more elegant solution

int R, G, B, A; // you must have these global varables to use the PxPGetPixel()

void PxPGetPixel(int x, int y, int[] pixelArray, int pixelsWidth) {

int thisPixel=pixelArray[x+y*pixelsWidth]; // getting the colors as an int from the pixels[]

A = (thisPixel >> 24) & 0xFF; // we need to shift and mask to get each component alone

R = (thisPixel >> 16) & 0xFF; // this is faster than calling red(), green() , blue()

G = (thisPixel >> 8) & 0xFF;

B = thisPixel & 0xFF;

}

//our function for setting color components RGB into the pixels[] , we need to efine the XY of where

// to set the pixel, the RGB values we want and the pixels[] array we want to use and it’s width

void PxPSetPixel(int x, int y, int r, int g, int b, int a, int[] pixelArray, int pixelsWidth) {

a =(a << 24);

r = r << 16; // We are packing all 4 composents into one int

g = g << 8; // so we need to shift them to their places

color argb = a | r | g | b; // binary “or” operation adds them all into one int

pixelArray[x+y*pixelsWidth]= argb; // finaly we set the int with te colors into the pixels[]

}