Updates

On this page

- Update 1: Project Goals

- Update 2: Competitive Analysis

- Update 3 : Exploring Phase

- Update 4 & Update 5 : Exploring AR apps and conducting user testing

- Update 6 : Usability Testing Summary

- Update 7 : User flow, scenario planning, low fidelity prototypes, assets building

- Update 8 & Update 9 : Final Prototype Building

Weekly Update 02/09/2024

This semester has started great with all our team members ready to pick up where we left off before the winter break. We are thrilled to have our newest member, Ayushi Shah, join our group. Our team and Ayushi are excited to embark on this journey together to develop cutting-edge and inclusive learning tools for Luv Michael employees.

To start, we conducted testing of our dynamic prototype, and next, we will move on to the exciting phase of acceptance testing/user testing phase with the users at Luv Michael. We reached out to Diane and were delighted to see her enthusiasm to try out the prototype before launching the acceptance/user testing phase.

We are also looking for more robust AR development platforms than the one we are currently using(Lens Studio). All team members are exploring, researching, and learning about other AR development engines such as Unreal and Unity. We also looked into some MOOCs to learn more about these engines and find potential software to base our AR project on.

We were looking for free options, but we eventually discovered a Coursera course on AR fundamentals with Unity that is well worth the cost. Following this discovery, we came across a number of YouTube tutorials that taught us about specific AR code and implementation. Every video provided excellent knowledge and practical experience, ranging from understanding marker-based tracking to studying 3D model integration. With this additional knowledge, we can’t wait to go deeper into Unity’s features and potentially use this software for our AR game. We plan to meet next week to discuss our findings in detail and choose one platform for future work.

Weekly Update 9 – 12/12/23 & Weekly Update 8 – 12/5/23:

In the last two weeks, we majorly focused on designing the AR experience and building an initial prototype. We plan to have a Luv Michael avatar that is going to guide us through the storytelling process as well as the questions on the screen. We built a prototype of 2 questions on washing hands where the user would have 2 options to choose from. We incorporated text, images, as well as audio for better accessibility and understanding of the information presented. The images that we used are of equipment from the Luv Michael kitchen so that familiarity can help people relate what they’re learning to what they do in the kitchen. We plan to replace the images with 3D scans of the objects/equipment in our next steps.

There would ideally be a scenario between the 2 questions where the user would see something on the floor(using AR) which they would be prompted to pick up on the screen. The 2nd question in our prototype will be prompted on the screen after the scenario is played out in AR which we don’t have built yet.

In our next steps, we plan to conduct a round of user testing with what we have so far and based on the feedback make changes and build all the scenarios.

Weekly Update 7: 11/28/23

It has been an eventful week for us as we worked on developing prototypes based on the observations and environmental analysis conducted with Luv Michael’s employees. Our team, Learners United, had a virtual meeting to discuss the timeline for prototype development. During the meeting, we brainstormed creative ideas for the scenarios and layout of the AR application. Our ideas ranged from simple modifications of the AR basketball game in the SERV Safe curriculum to more advanced modifications with QR codes. We also discussed introducing interactive avatars through the Augmented Reality application in the workspace of Luv Michael to make learning more engaging and interactive than ever before. We collectively came up with a revised version of the timeline to reach the goal. Following is the snapshot of our timeline:

| Tasks Planned to reach the final application | Completion date |

| User Flow | 11/28 |

| Scenario Planning – text scripts, what interactions we have on each screen(storyboarding) – What physical spaces we are expecting to include in the interactions | 12/01 |

| Low fidelity prototype | 12/02 |

| 3D Models and the animations (Liv Michael Avatar) – research + getting basic animations + 3d Scanning tool | 12/02 |

| AR building -> swiping (Lens Studio – 5.0.1 Beta version) – tutorials + basic interactions | 12/01 |

Weekly Update 6: 11/21/23

Usability testing summary, documented by Cathy.

Technology Usage and Preferences:

- Participants: 7 people, 5 using iPhones and 3 using Android devices.

- There’s a general issue with people being uncomfortable using their phones for certain tasks.

Website and App Interaction:

- An independent website is available for downloads.

- Users are comfortable with both sliding and tapping interactions.

- There’s good engagement with virtual objects, but some negative reactions were observed.

Content and Learning Tools:

- The hygiene chapter in AR has been completed, and review materials are available.

- There’s a suggestion for paired associations and partnerships, as well as environmental analysis.

- Boom Learning and iPads are considered useful tools.

- Certain sections previously not allowed for food are now deemed acceptable.

User Experience and Feedback:

- The necessity for more imagery and fewer words was emphasized.

- Alex, who is not very verbal and deals with emotional issues, participated in activities like playing basketball, pointing to objects, and tapping on items like exit signs and windows.

- The relevance and association of some tasks were questioned, indicating a need for clearer task-object associations.

- Both receptive and expressive language issues were noted, with a focus on simple, point-and-click interactions. Audio components were deemed feasible.

Instructions and Scoring:

- Participants are encouraged to obey and scan without worry, as there are no wrong answers.

- Ari showed positive responses to environmental scanning and activities like shooting a basketball.

- A range of 10-15 items was suggested for activities, with an emphasis on encouragement and no scoring system.

Weekly Update 4: 11/7/23 & Weekly Update 5: 11/14/23

Documented by Sreya

This week marked a crucial phase for our team as we embarked on the evaluation of augmented reality (AR) applications. Our objective was to meet with Diane to conduct practical tests and gather insights that would inform the development of our own AR application, specifically designed for educational purposes.

Collaborating with Tej, we systematically tested and devised scenarios for each application, opting to perform a task analysis during our scheduled visit to Luv Michael on Wednesday. In the preceding week, I carefully identified three lessons from chapter 1 of the SafeServ book, laying the groundwork for our AR’s educational content. Simultaneously, Tej focused on refining the specifics of each scenario.

Our chosen approach centers around the use of the first AR app, Chalk. In this application, users scan their surroundings, either drawing or tapping, prompting the app to label the action with a numerical order. Our test scenario involves an inquiry about the availability of handwashing materials. Participants can demonstrate their knowledge through screen taps or by arranging materials in the order they would use them, from initial steps to the final ones. Flexibility is a key consideration, allowing for adjustments based on individual learning needs.

Our major goal is to assess participant reactions to the application, specifically whether they find it overpowering given the visual elements’ physical limits. We also want to measure our target audience’s engagement with this content.

Looking ahead, participants will be presented with a choice between playing an AR basketball game or using an AR bouncy ball app. This will enable us to evaluate participants’ ability to maintain a steady camera position or their preference for tapping and swiping on their phones. Tailoring questions based on the Safe Serv chapter, our aim is to seamlessly integrate educational elements into our AR application. For example, participants will be prompted to throw the ball into the hoop or interact with the virtual ball in the app based on their understanding of when to wash their hands.

Beyond app testing, our agenda includes an environmental analysis during our Luv Michael visit. This analysis encompasses factors such as the lighting conditions in the Luv Michael kitchen, noise levels within the building, and the overall street view. The intention is to ensure our digital application aligns seamlessly with the physical environment.

Mindful of time constraints, we have streamlined our testing process to under 20 minutes, respecting the schedules of Diane and the Luv Michael staff. To facilitate a smooth collaboration, we communicated our plans to Diane in advance, seeking her guidance on the best approach and participant selection for optimal feedback.

As we approach the conclusion of our testing phase, the next steps involve synthesizing the gathered feedback by Wednesday. This information will be diligently handed over to Cathy and Siri, marking the commencement of the development phase for our AR application. Simultaneously, Tej and I will finalize the specifics of the game, ensuring a seamless transition from testing to development. Stay tuned for updates as we progress in the realm of augmented reality, working towards creating an engaging and educational experience for our users.

Weekly Update 3: 10/31/23

After receiving feedback from last week’s presentation, we decided to spend another week in our Explore phase with the following tasks:

- Looking into existing AR applications that involve teaching games for people with autism and/or hygiene practices – we will try to use some of these in the observational analysis we will do at Luv Michael next week.

- Build some lesson plans on the first chapter – Personal Hygiene to share with Diane in next week’s meeting.

- Look into 3D scanning applications which we can use to scan the existing tools in the Luv Michael kitchen that we can use in the AR exercises(this is to ensure that there is familiarity in the objects that they see in real life and in the AR app which helps them relate to their work better, as suggested by Diane).After completing our research in the Explore phase, we plan to test out the AR apps that already exist with the Luv Michael employees to understand the challenges and to see what works. In the same visit, we plan to gather information on the device types and other software details to figure out what requirements are met/not met.

These are the results of our research from the Explore phase:

- Analysis on AR apps:

| AR Apps: | Purpose | Notes | Ratings | Top 3 lessons in SafeServ | Sections |

| Ava’s Tangle | This AR app is designed to help children with autism improve their fine motor skills, hand-eye coordination, and spatial awareness. Users can interact with virtual objects in a 3D space to develop these skills. | 1 | Your Role to Keep food safe | Good Hygiene, Control Time and Temperature of food, Prevent Cross Contamination | |

| Auminence | Auminence uses AR to create a virtual world that helps individuals with autism develop social and communication skills. It offers scenarios and characters for users to interact with and practice various social situations. | 2 | When to wash hands | Using restroom, Handing raw meat and poulty or searfood, before and after, Taking garbage, Touching your hair, face and body, Touching clothes, sneezing or using a tissue, handing chemical, clearning tables, handing money, before putting on gloves at the start of new task, handing service animals, smoking, eating or drinking, chewing gum and tabacoo, leaving kitchen prep, touching anything else. | |

| HoloTour | HoloTour by Microsoft offers virtual travel experiences. It can be used to introduce individuals with autism to new environments in a controlled and interactive way, helping with sensory regulation. | No spefically for Autism | 3 | What to wear | Hair covering, clothing, aprons, jewelry. |

| Look at Me | Look at Me is an app designed to improve the social skills of children with autism. It uses AR to create interactive games and scenarios for users to practice eye contact, emotion recognition, and facial expressions. |

Next steps for the explore phase and lesson plans:

| Method: Observational research | Develop an understanding of your user’s life What tasks they perform Why they perform these tasks How they perform these tasks Focus on order of tasks and conditionals | Task: make a report on google docs |

| Hierarchical Task Analysis (HTA) | Pick a task and create a HTA about the process | Create HTA |

| Environment Analysis | What impact will the environment have on user interaction? |

WEEKLY UPDATE 2: 10/24/23 (COMPETITIVE ANALYSIS)

| Jigspace | Quiver | Blippar |

Strengths:

|

Strengths:

|

Strengths:

|

Weaknesses:

|

Weaknesses:

|

Weaknesses:

|

Opportunities:

|

Opportunities:

|

Opportunities:

|

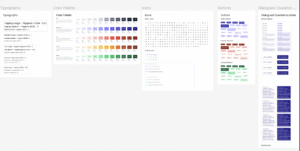

WEEKLY UPDATE 1: 10/17/23 (GOALS)

- Team Name After our initial meeting with the client, our team got together to brainstorm and came up with a name for our group. We searched online for different options and considered a few ideas such as “Learning Together”. Eventually, we unanimously agreed on “Learners United” as our final choice. This name represents our commitment to inclusive learning that caters to everyone’s needs and preferences.

- Schedule: Our team convenes twice a week to brainstorm, share ideas, and tackle challenges. These regular meetings have been pivotal in helping us find creative and actionable solutions. In addition to these scheduled meetings, we also meet after every in-person visit to Luv Michael to provide updates and keep each other informed.

- About the project: Leaners United has partnered with Luv Michael to create a customized Serve Safe curriculum that promotes safe kitchen practices for employees, who are adults with autism. The curriculum will address the unique learning needs of this neurodiverse population through interactive and engaging content.

Augmented reality (AR) applications are known to enhance well-being, social skills, and learning in individuals with autism. We plan to leverage the benefits of AR and adapt the ServSafe curriculum into situation-based games using AR. This will provide an interactive way for adult learners with autism to practice and reinforce their knowledge of safe kitchen practices.

- Team expertise & responsibilities: Our team comprises skilled students with diverse backgrounds in IT, user experience and interface research and design, digital marketing, and physical therapy. Additionally, our team members possess expertise in Augmented Reality (AR) design. We plan to utilize Lens Studio in AR for our prototype development.

Sreya leads team communication and lesson design. Cathy, Siri, and Tej are the product developers of our team.

Timeline:

| Item | Date |

| First brainstorming session of the group | 9/25/2023 |

| First virtual session with Diane | 9/29/2023 |

| Luv Michael in person visit | 10/11/2023 |

| Luv Michael second in person visit. | 10/17/2023 |

| Finalizing the game ideas for priority lessons of Servsafe Curriculum & AR prototype/3D scan. | 10/25/2023-11/08/2023 |

| Integrating game idea with AR prototype | 11/08/2023-11/22/2023 |

| Prototype testing with client | 11/22/2023-11/29/2023 |

| Iteration & Refinement | 11/29/2023-12/11/2023 |

| Project final presentation | 12/12/2023 |