glitch / خلل

With an interest in the idea of glitches and malfunctions in technology, I seek to explore how “glitch” manifests in the natural world and our memories. Thinking of my grandfather’s traumatic experiences at sea, I attempt to explore the way he feels when he looks at the view of the sea from his living room window. How does he feel when he looks out towards this inescapable feature of his environment and surroundings?

Concept Process

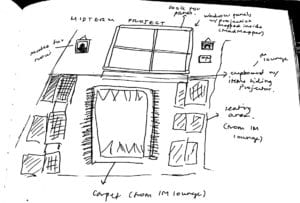

For my midterm project, I went through a very long series of trial and error, and meddled with a few different concepts. I was interested in exploring the idea of glitch, but my first proposal was difficult to implement because it would’ve been hard to acquire the latex fabric I needed to make it work, and I was also thinking about the idea of glitches and what it is I find interesting about them in a very broad way. After realizing that I had to tweak the idea, I decided to create live-video with glitch effects, but with no underlying rationale to support the idea, it felt like the project would just be a fun little effects mirror with no conceptual reasoning behind it. I must admit that I thought about the idea for my project from a very one-sided point of view, and stuck with one focal idea without really looking beyond it because I was afraid that I was running out of time without making significant process, and I worried too much about whether I would be able to tackle the technical aspects of the project. Although the technical process was difficult, I should have been more thorough during my ideation process, as that would have made my goals clear from the beginning. This is something I hope to do differently in my final project.

For the space, I initially wanted to have physical window panes, and map the projection so that it would appear between the four squares of the window pane. However, I couldn’t find a good way of executing this and the window panes I initially tried to use (made of white acrylic) didn’t really achieve the look I was going for. So instead, I overlaid a png image of a window pane onto the video.

Technical Process

Once I had formed this final idea and consulted Aaron about the next steps, I decided to use OpenFrameworks to play a regular video of the ocean, then add a glitch effect to it as soon as the interactor’s distance to the Kinect was closer, hoping to have a gradual effect transitioning on one video rather than an abrupt transition between two different videos. I used an add-on called OfxPostGlitch, which applies a glitch effect to the Fbo. It worked well with the live RGB video from my Kinect, but would not apply to the video of the ocean that I was planning to use, despite all my efforts to figure out any issues with the code itself and the video’s properties (format, length, size, etc.). The shader worked on the video eventually, but only when it was stuck on one frame, and would not play, so it kind of treated the video as an image instead. It was hard to tackle this issue because the shader add-on itself felt very constraining to work with, and I didn’t know enough about how it worked to make any changes to the add-on source code.

To attempt to solve this problem, I decided to use Processing instead. I created two different video files, one with a glitch effect and one without. I also created two different sound files, one to be played with each video. This solution worked, but I still feel like the OpenFrameworks shader would have created a much better transition between the two videos.

I used the Minim library to be able to try more solutions with sound, because the two audio files kept lagging and playing over each other. I set a threshold for distance, to control which video and sound file would be playing at any given distance, then set a path for the Kinect so it would only be able to view the white pixels of whoever walks into the space and stands directly in front of the projected video, disregarding all other objects and movements.

Here’s my code (Processing):

import ddf.minim.*;

import ddf.minim.analysis.*;

import ddf.minim.effects.*;

import ddf.minim.signals.*;

import ddf.minim.spi.*;

import ddf.minim.ugens.*;

import processing.sound.*;

import processing.video.*;

import org.openkinect.freenect.*;

Kinect kinect;

PImage depthImg;

int minDepth = 60;

int maxDepth = 860;

Minim minim;

Minim minim2;

AudioPlayer sea1;

AudioPlayer sea2;

float angle;

//Videos

Movie Video1;

Movie Video2;

void setup() {

//size(1280, 720);

fullScreen();

kinect = new Kinect(this);

kinect.initDepth();

angle = kinect.getTilt();

//videos

Video1= new Movie(this, "seavid.mp4");

Video2 = new Movie(this, "glitchvid.mp4");

minim = new Minim(this);

sea1 = minim.loadFile("calmsea.mp3", 2048);

//sea1.loop();

minim2 = new Minim(this);

sea2= minim2.loadFile("glitchsound.mp3", 2048);

println("SFSampleRate= " + minim.sampleRate() + " Hz");

println("SFSamples= " + minim.frames() + " samples");

println("SFDuration= " + minim.duration() + " seconds");

println("SFSampleRate= " + minim2.sampleRate() + " Hz");

println("SFSamples= " + minim2.frames() + " samples");

println("SFDuration= " + minim2.duration() + " seconds");

depthImg = new PImage(kinect.width, kinect.height);

}

void draw() {

//image(kinect.getDepthImage(), 0, 0);

int[] depth = kinect.getRawDepth();

int i = 0;

float closestPoint = 700;

int closestX=0;

int closestY=0;

for (int x = 0; x < kinect.width; x++) {

for (int y = 0; y < kinect.height; y++) {

int offset = x + y*kinect.width;

// Grabbing the raw depth

int rawDepth = depth[offset];

//println(mouseX);

if (rawDepth >= minDepth && rawDepth <= maxDepth && x>162 && x<678) {

depthImg.pixels[offset] = color(255);

if (rawDepth<closestPoint) {

closestPoint = rawDepth;

closestX=x;

closestY=y;

}

} else {

depthImg.pixels[offset] = color(0);

}

}

}

// Draw the thresholded image

depthImg.updatePixels();

println(closestPoint);

//pushStyle();

if (closestPoint>600) {

Video1.play();

image(Video1, 0, 0, width, height);

sea1.play();

}

if (closestPoint<500) {

Video1.stop();

Video2.play();

image(Video2, 0, 0, width, height);

sea1.pause();

sea2.play();

//sea2.loop();

}

if (closestPoint>600 && sea2.isPlaying()) {

sea2.pause();

sea1.loop();

}

}

void movieEvent(Movie m) {

m.read();

}

void keyPressed() {

if (key == CODED) {

if (keyCode == UP) {

angle++;

} else if (keyCode == DOWN) {

angle--;

}

angle = constrain(angle, 0, 30);

kinect.setTilt(angle);

} else if (key == 'a') {

minDepth = constrain(minDepth+10, 0, maxDepth);

} else if (key == 's') {

minDepth = constrain(minDepth-10, 0, maxDepth);

} else if (key == 'z') {

maxDepth = constrain(maxDepth+10, minDepth, 2047);

} else if (key =='x') {

maxDepth = constrain(maxDepth-10, minDepth, 2047);

}

}

User Testing

I thought it was interesting that the first user removed her shoes when she entered the space, and that her first thought was to walk straight towards the projection rather than sit down or linger around, but I feel that because I asked her to come and look at the project, she assumed she had to “do” something rather than just take the time to explore. This interaction might be different for people who come across the projection of the ocean without me asking them to come and take a look. For example, as I was walking across the hallway, someone stopped to look at the projection from where the first partition was, but didn’t really bother walking towards it. Someone else just sat in the majlis for a while, without walking around the space. My second user, after walking towards the projection and finding that there was a change in the sound and video, asked if it would be better to have some sort of signifier (like two footsteps on the carpet), but I feel like that would make it too explicit, and I want the interactor to interact with it the way that they think makes sense, the way they see fit. However, I placed three picture frames on the table next to the projection, so that people might be curious to walk towards the front.

Overall, I feel like I could have developed this idea a little more, especially the technical side of things (mostly the transition between the two videos). I realized that without a description, the video and the interaction itself doesn’t make sense to most viewers. I think I achieved to make the space feel like it’s someone’s living room, but beyond that, understanding the project still requires some further explanation to the viewer. I don’t know if that’s essentially a bad thing, but I wanted to create something that can convey meaning through the interaction itself.