Up until now, I’d say Steven and I are about 3/4 of the way in. This post will break down the hardware and software progress we have been able to do!

Hardware

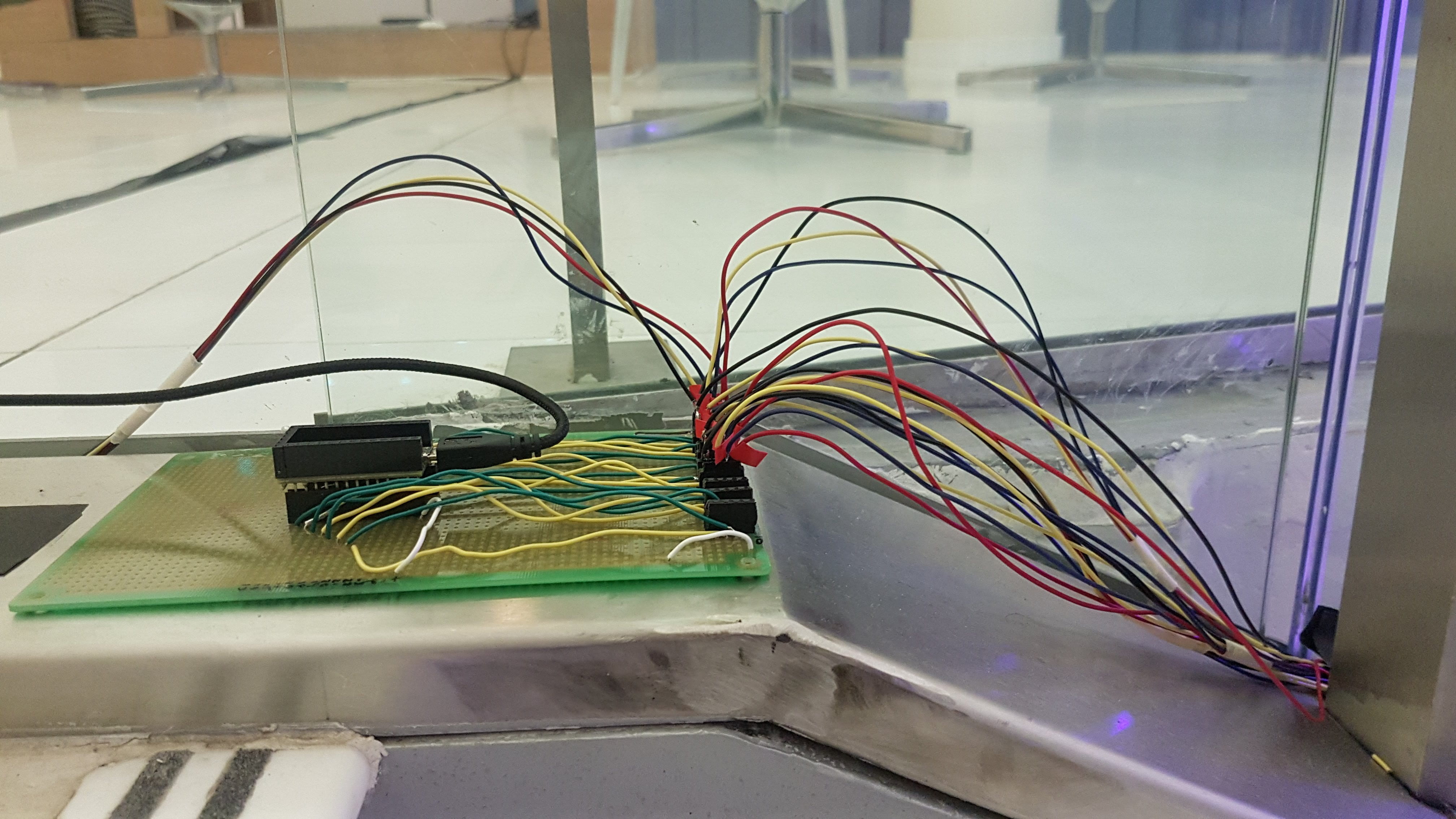

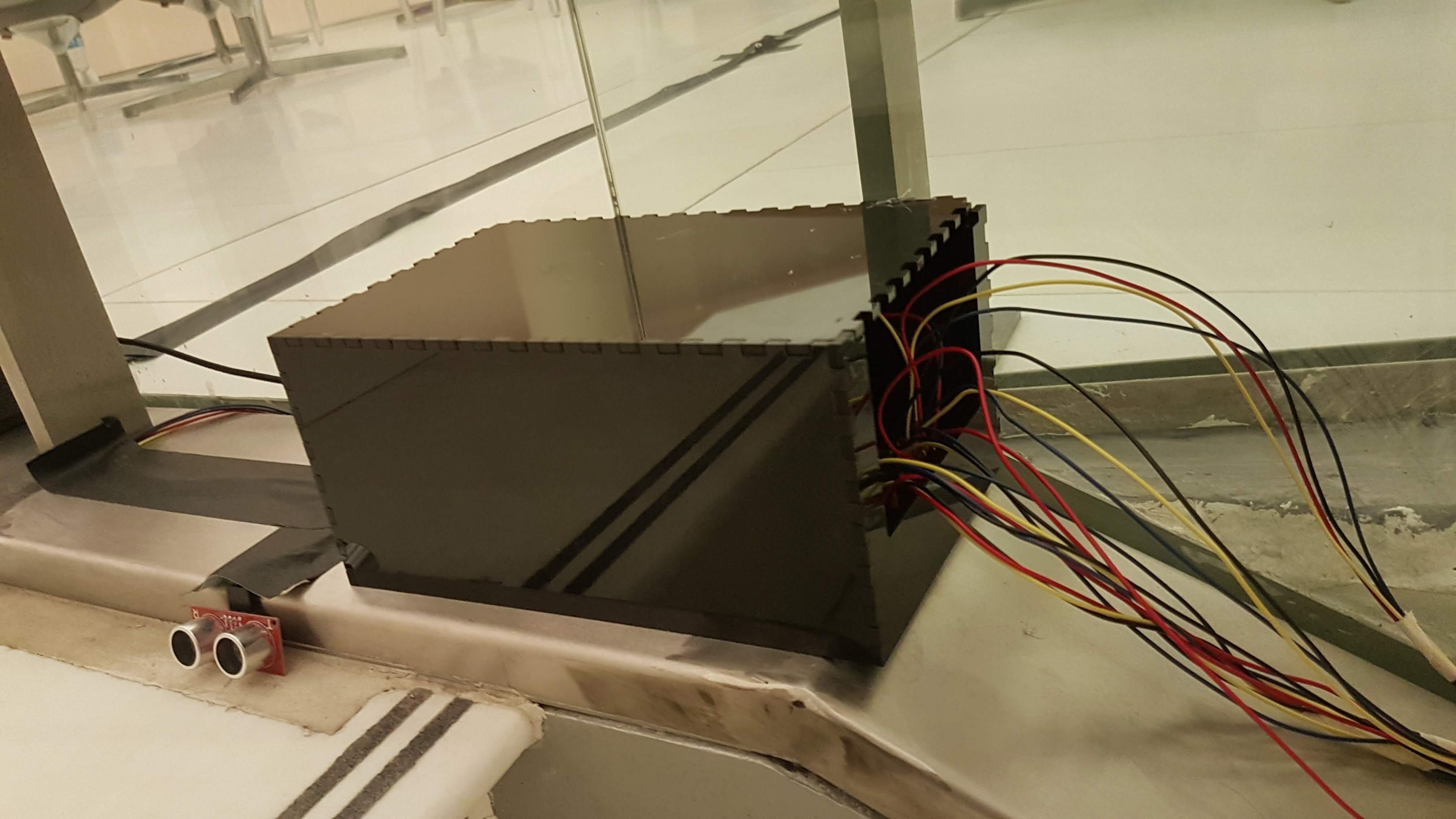

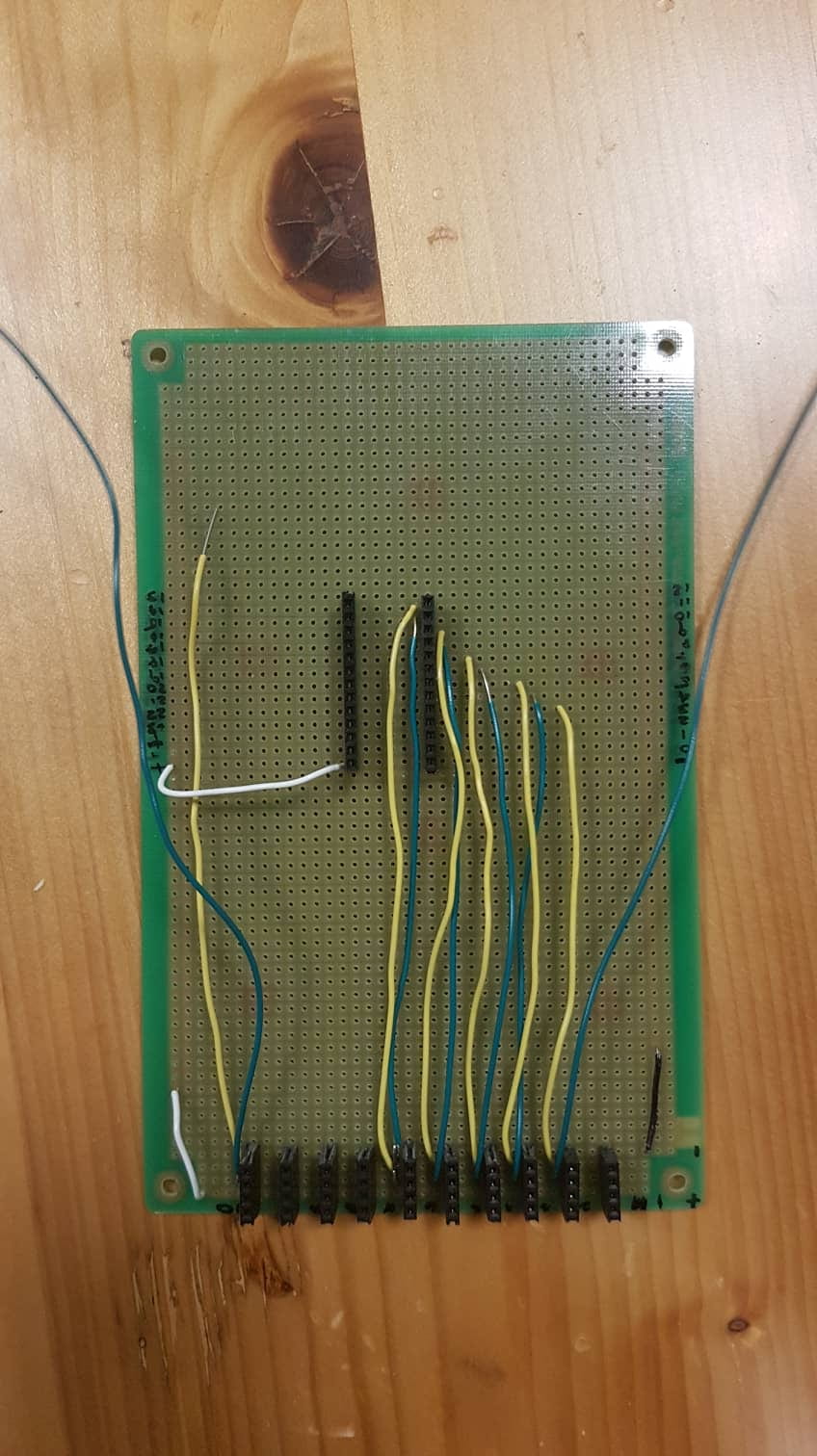

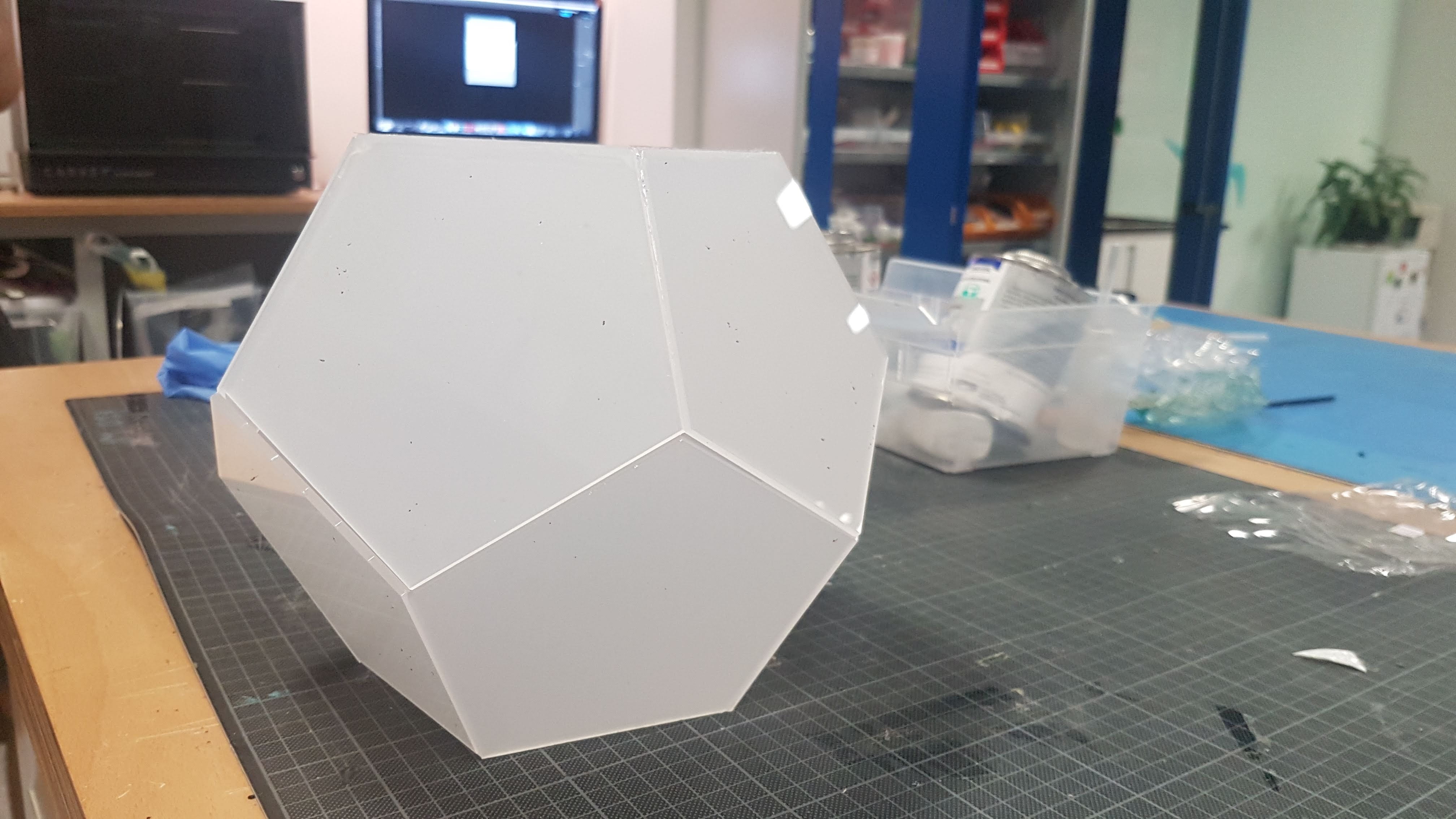

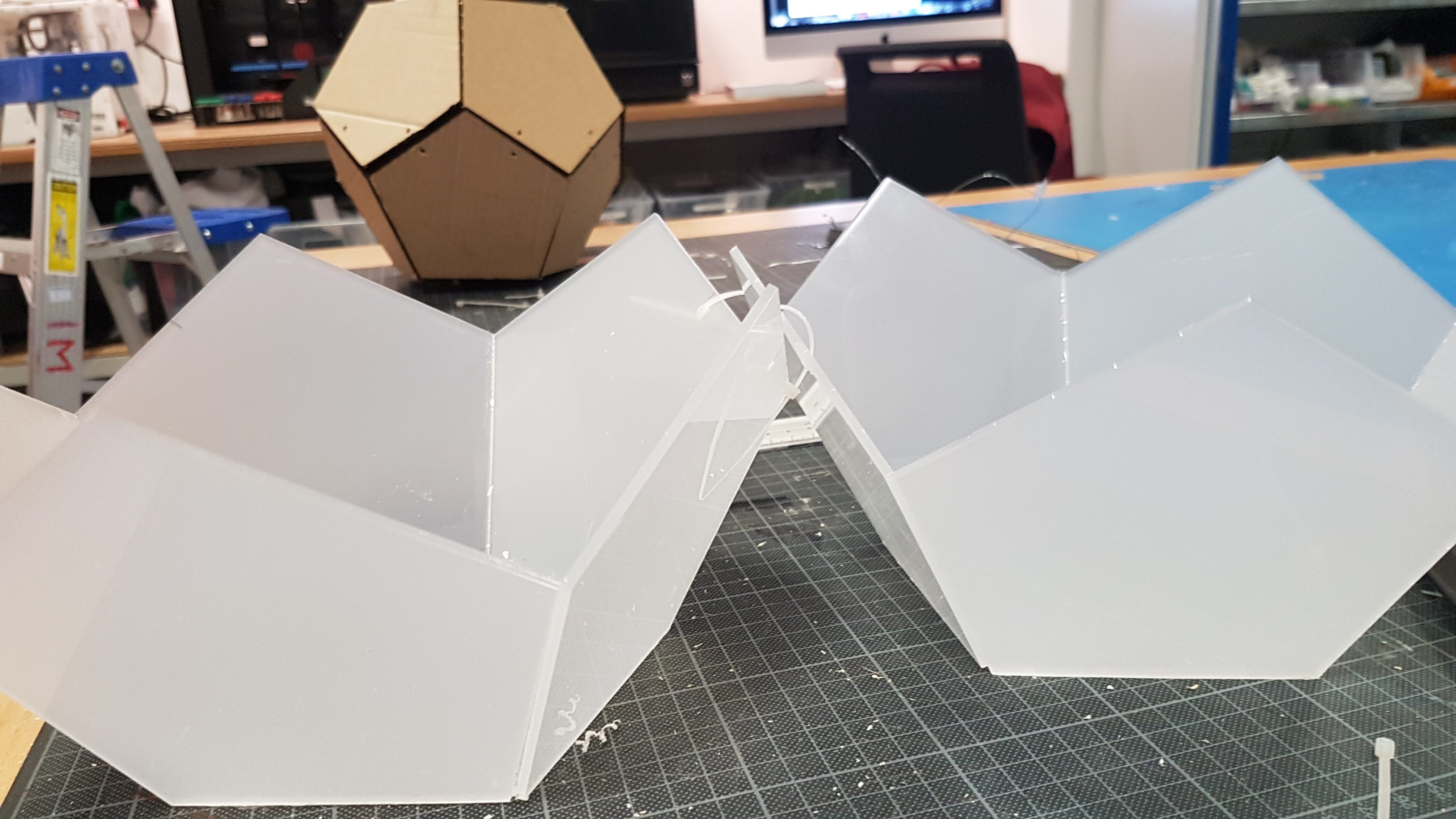

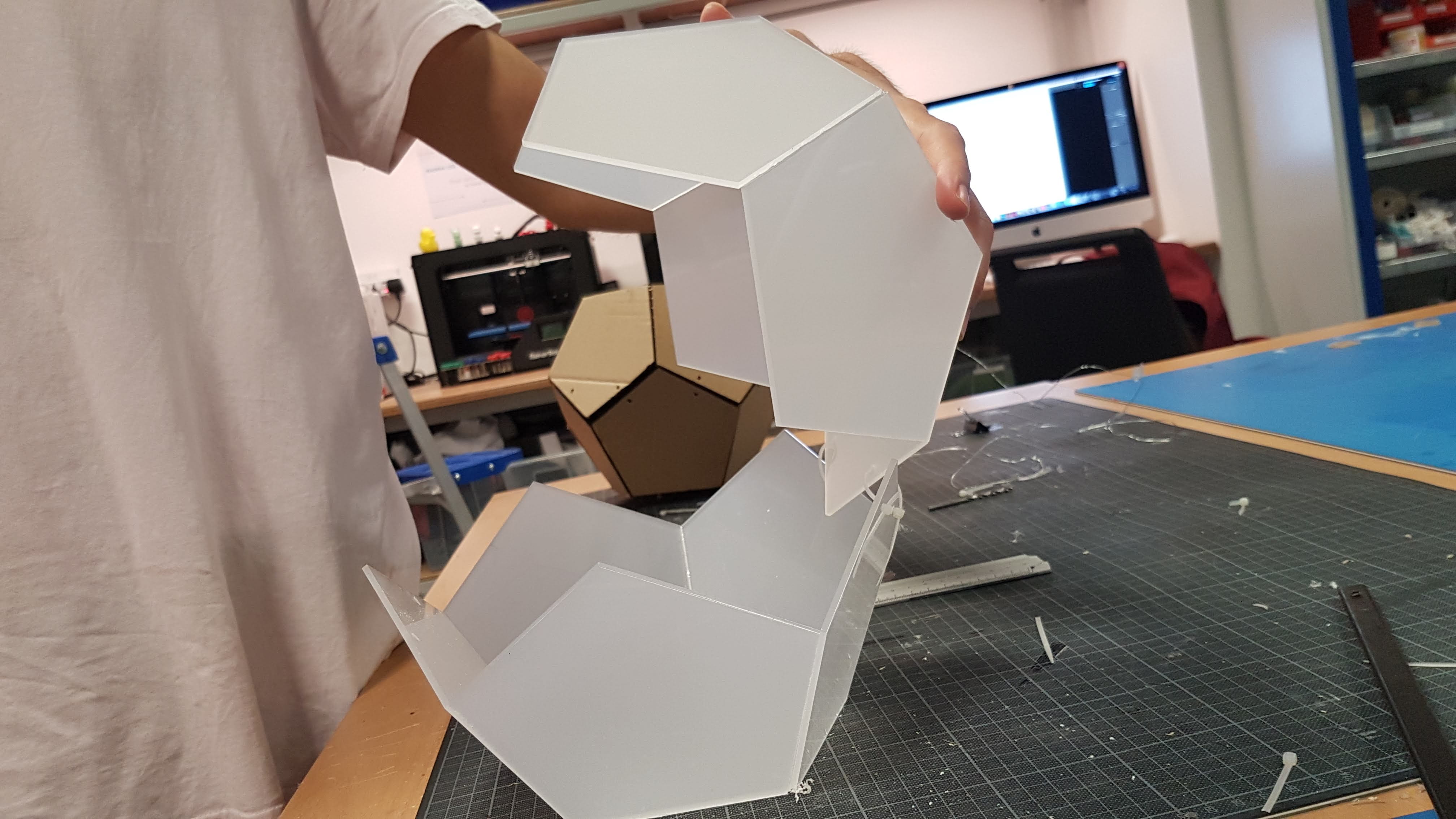

In the end, we decided to laser cut a dodecahedron on frosted acrylic instead of buying or ordering an acrylic ball. We decided to do our own since this would give us more flexibility in terms of size options and it would be easier to cut into 2 halves and then attach together before the performance, rather than working through the acrylic ball’s hole. After many hours of applying acrylic glue and making sure we were placing each face at the proper angle, this was the result:

Software

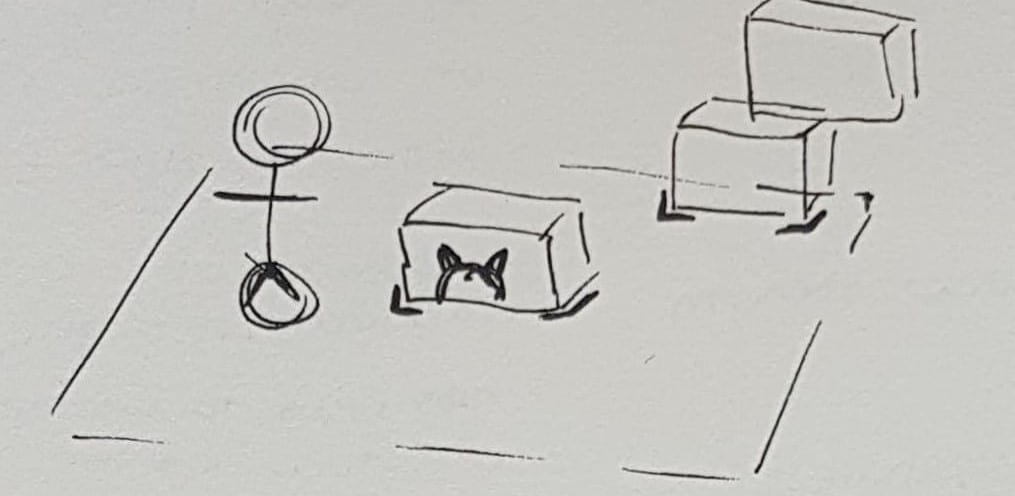

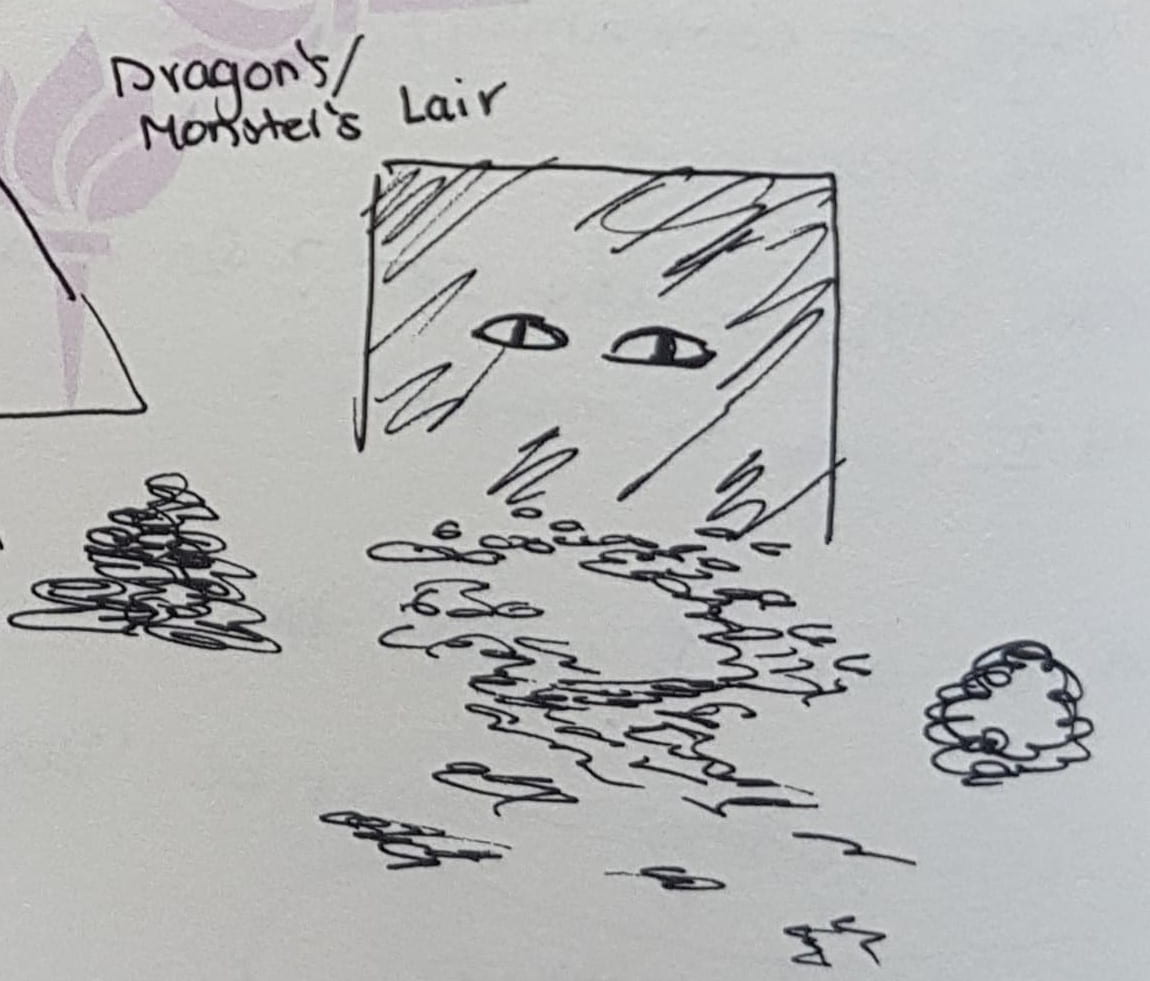

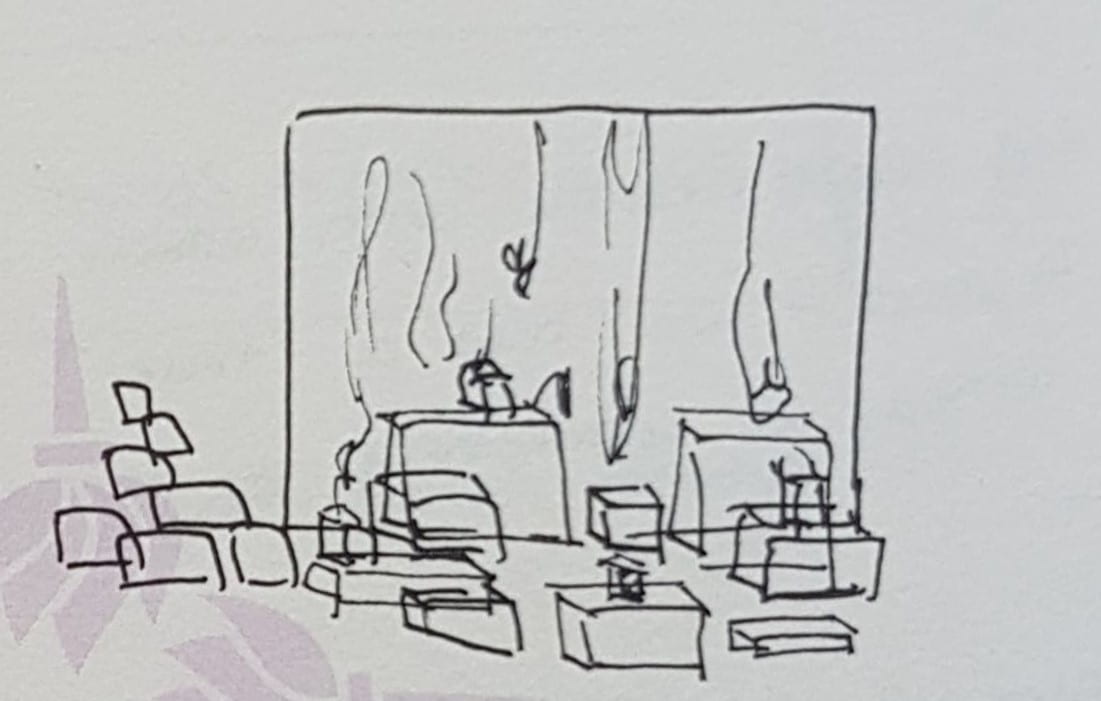

In terms of the actual code/performance, we’ve established the visuals for the first 2 parts of the performance (out of 3). This is how they look:

Part 1: Erica (our dancer) is curious about the glowing “thing” in the middle and slowly approaches and gently nudges it.

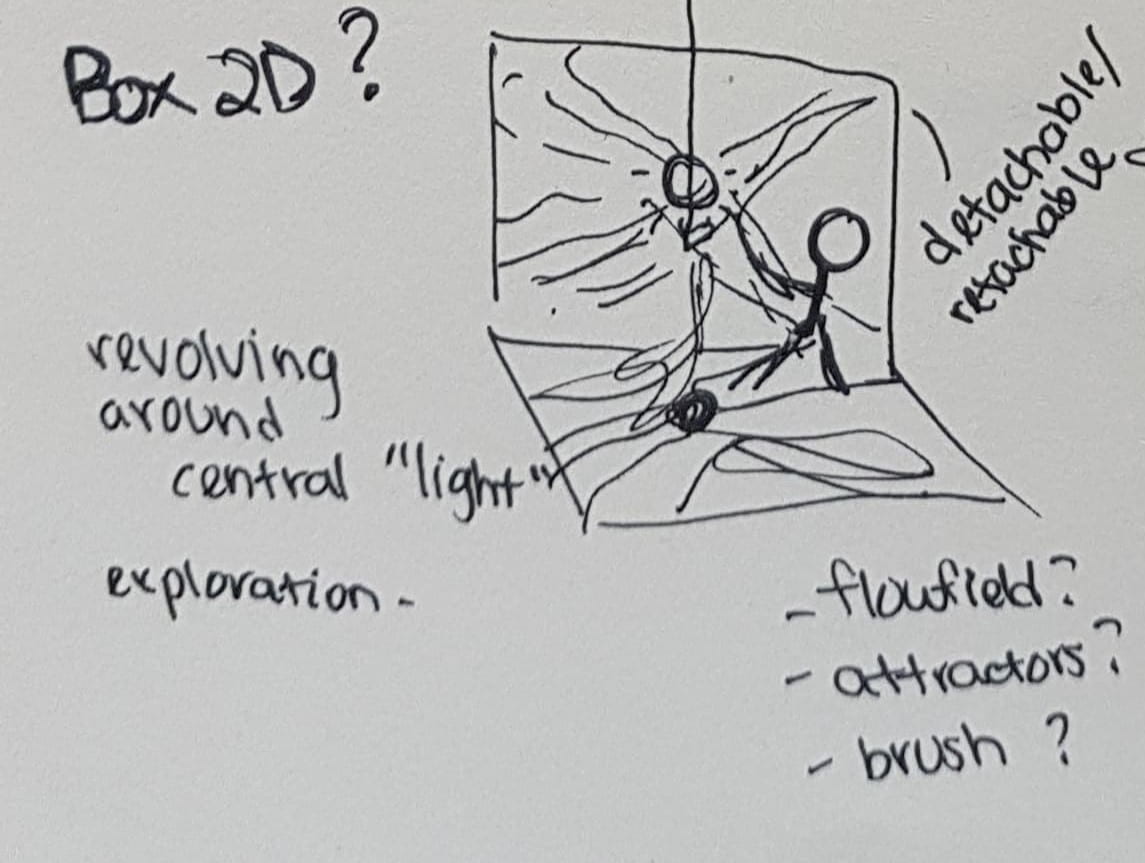

Part 2: Erica will detach the ball and start “drawing” with it.

The particles will eventually form a preset shape/text (we still need to decide on this)

Sound

Things we still need to do:

Overall, we think we’re in a pretty good spot! We’ll do the following before doing rehearsals during the weekend:

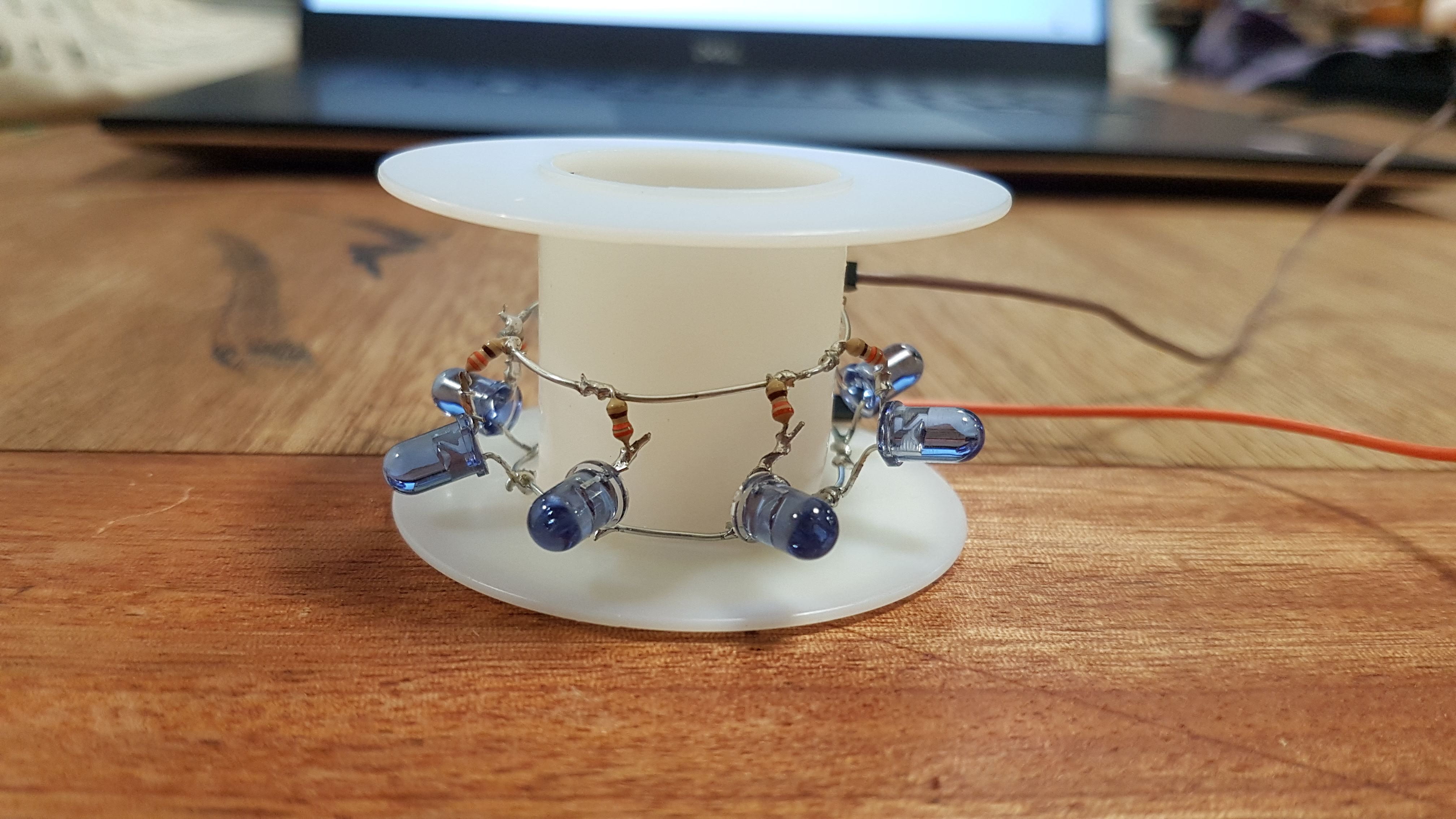

Hardware:

Check out 2 more neopixel rings

Set IR LEDs and Neopixel rings on place

Control neopixels with Bluetooth LE

Software:

Get attractors & particles drawing image sketch working

Finalize 3rd part – get attractors sketch working

Put all separate parts into the same code

Test all parts with IR and ball

Test IR with camera on the floor (possibly image warp)

Program saves previous locations in case it doesn’t identify an IR light

Capacitive touch Key Presses

Sound:

Choose specific sound times

Choose sound effects for last part

Image:

Decide image/text to display in part 2